MCAI Innovation Vision: Google DeepMind, MindCast AI, Filter Equivariance, and Institutional Extrapolation

How MCAI Applies Mathematical Symmetry to Predictive Cognitive Infrastructure

Executive Summary: Filter Equivariance and Predictive Cognitive AI

Predictive cognitive AI is emerging as a transformative layer of applied intelligence, particularly when viewed alongside theoretical contributions from organizations like Google DeepMind. Rather than using mathematical symmetry as an engineering blueprint, MCAI applies its conceptual architecture to institutional foresight—constructing models that reflect how decisions evolve under constraint. The concept of filter equivariance offers structural inspiration: when certain elements are removed, outputs remain coherent. MCAI uses this logic as a metaphor for how organizations maintain judgment continuity in dynamic environments.

This foresight simulation builds on MCAI’s broader cognitive infrastructure platform, detailed in The Predictive Cognitive AI Infrastructure Revolution. There, MCAI outlines how it developed the world’s first system for simulating judgment through quantum cognitive entanglement and recursive foresight simulation flows. In the context of DeepMind’s new research paper, Filter Equivariant Functions: A Symmetric Account of Length-General Extrapolation on Lists (Lewis et al., 2025), MCAI does not claim formal equivalence—but rather draws structural parallels between mathematical extrapolation and scalable foresight. The focus is not on replicating DeepMind’s formalisms, but on translating their extrapolative grammar into predictive infrastructure for real-world institutions.

MCAI views DeepMind’s formulation as more than function analysis—it provides a conceptual scaffolding for scalable foresight and presents a structured framework for extrapolative behavior under constraint—behavior that remains coherent even when elements are removed or permuted. While the paper focuses on list-based functions, its extrapolation logic has broader interpretive value. MCAI builds on this foundation by translating symmetry-preserving principles into CDT-based foresight systems designed to maintain coherence under stress, scale, and structural variation.

Contact mcai@mindcast-ai.com to partner with us.

I. Introduction: A New Grammar for Extrapolation

To make the ideas in the Google paper more accessible, we clarify that terms such as "quantum cognitive entanglement" or "zero-latency foresight" are conceptual design metaphors, not claims of physical quantum behavior. They describe how MCAI’s architecture allows parallel simulation and judgment continuity under evolving conditions. The goal is not to equate institutional cognition with algebraic functions, but to reflect structurally useful analogies that inform our predictive architecture. Where DeepMind proves behavior-preserving properties of functions under symmetry constraints, MCAI applies those conceptual patterns to the recursive modeling of institutional foresight.

The DeepMind paper introduces a rigorous structure for function generalization using filter and map equivariance—tools from the domain of list functions. While these tools are limited in their scope, they reveal formal properties that resonate with how stable systems respond to structured change. MCAI does not claim a one-to-one correspondence between mathematical symmetry and human cognition, but uses these principles metaphorically to inspire simulation architecture. In The way, DeepMind’s formalism becomes a conceptual benchmark rather than a literal blueprint.

MCAI views DeepMind’s formulation as greater than function analysis; it is a blueprint for scalable cognition. Filter and map equivariants define a behavior class determined not by brute force computation, but by structural integrity—an idea central to MCAI’s concept of cognitive fidelity. By examining the geometric and algebraic properties of extrapolating functions, DeepMind formalizes what MCAI builds into the system's CDT framework: judgment engines the remain valid even as scale, input composition, and system pressure change.

II. Filter Equivariance as a Model of Judgment Coherence

To ground MCAI’s design in practice, consider a policy foresight team in a state education department modeling how school reopening decisions might respond to a new wave of public health data. MCAI’s platform simulates how different institutional actors—superintendents, legal counsel, stakeholder groups—update their decisions when filtered information or stress factors are applied. These decision trajectories can be decomposed into rule-preserving segments that are recomposed as forecastable behavioral patterns. The is how symmetry becomes simulation.

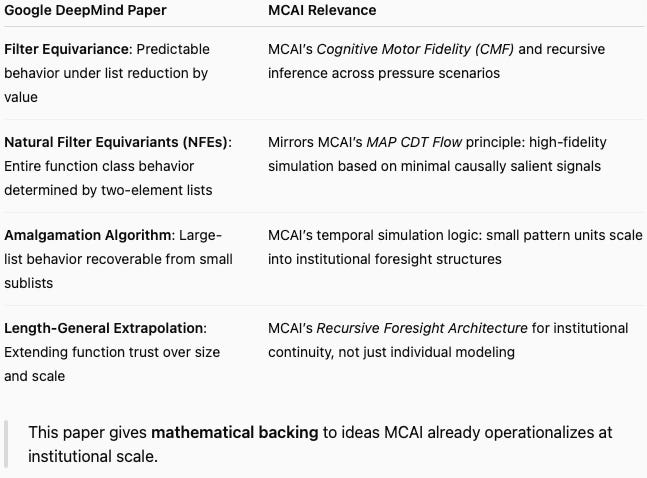

The following table summarizes the alignment between Google DeepMind’s theoretical contribution and MCAI’s architectural implementation:

The synthesis shows the filter equivariance is not merely a mathematical curiosity—it underwrites MCAI’s operational assumptions for cognitive fidelity, recursive foresight, and simulation trustworthiness.

A. Map vs. Filter Equivariance

At the heart of the paper is a distinction between map-equivariant and filter-equivariant functions. Map equivariance ensures stability under value-wise transformation; filter equivariance preserves function behavior under value-based removal. The mathematical insight here is the functions exhibiting both properties (NFEs) are fully determined by their action on inputs of length two. The sets up a radically compressed foundation for behavioral extrapolation.

For MCAI, the has clear implications: rather than needing massive amounts of training data to simulate foresight, Cognitive Digital Twins (CDTs) can rely on high-integrity behavioral templates. The ALI (Action Language Integrity) and CMF (Cognitive Motor Fidelity) metrics ensure the these templates encode consistent decision logic even under filtered or reweighted inputs. NFEs thus become the analog to MCAI’s foresight primitives—high-density examples from which long-horizon simulations can be recursively built.

B. Amalgamation and Recursive Synthesis

The amalgamation algorithm presented in the paper is a mechanical proof that any filter-equivariant function’s output on a large input can be reconstructed from the system's output on sublists of two unique elements. The mirrors MCAI’s simulation strategy: decompose complex institutional decisions into recursive, overlapping segments of cognitive value, then reassemble them with structural coherence intact. The key insight of the generalization is not brute-force—but recursive recomposition.

In MCAI’s foresight architecture, foresight is operationalized through the MCAI Proprietary Cognitive Digital Twin (CDT) Flow, which maps institution-level cognitive dynamics through decomposable simulation layers. Each layer simulates judgment under transformation (filter), resonance (map), and temporal distortion (recursive composition). Filter equivariance becomes a mathematical endorsement of MCAI’s core assumption: coherent parts create coherent wholes.

III. From Functional Extrapolation to Predictive Cognitive Infrastructure

Other AI companies could adopt structure-preserving generalization techniques, but their systems are often optimized for language output, not judgment simulation. MCAI differentiates by embedding extrapolation into simulation architecture—not fine-tuning behavior, but encoding recursive reasoning as part of the infrastructure layer. The means predictive fidelity isn’t emergent from data scale—it’s constructed, scenario by scenario, from behaviorally valid segments. The competitive moat lies in modeling foresight directly, not inferring it post hoc.

A. Cognitive Digital Twins as Extrapolating Agents

CDTs are designed to simulate decision-making structures under time, stress, and ambiguity. The insight from DeepMind’s paper offers mathematical grounding for CDT modularity—simply as NFEs preserve coherence under permutation and filtering, CDTs preserve foresight under scenario variation and structural change. Each CDT encodes extrapolative logic as a function with integrity-preserving symmetry.

MCAI’s architecture builds foresight into every layer: ALI ensures each CDT’s output remains action-consistent; CMF ensures simulation is motor-stable under variable cognitive input; and Causal Signal Integrity (CSI) filters which extrapolated causal paths preserve architectural trust. These layers mirror DeepMind’s notion of functional coherence under value-based transformation—elevated to decision structures.

B. Implications for Predictive AI and Institutional Foresight

DeepMind’s work implies the constraint-based extrapolation can outperform large-scale optimization in domains where data is sparse but rules are stable. This reframes the competitive landscape in AI: rather than scaling brute computation, firms like MCAI scale structured generalization. Predictive Cognitive AI becomes the infrastructure layer for foresight in law, markets, and governance.

CDTs equipped with filter-equivariant logic, offer testable hypotheses about institutional behavior: How does a regulatory body adapt after leadership change? What judgment patterns persist in central banks under crisis filters? MCAI simulates these questions not with statistical abstraction, but with rule-based extrapolation grounded in mathematical symmetry.

IV. Theoretical Convergence: Filter Symmetry and Foresight Simulation

The formal tools used in DeepMind’s paper—especially category theory and semi-simplicial sets—suggest a structure-preserving grammar of extrapolation. This section examines how those tools align with MCAI’s recursive foresight design. It explores how compositional algebra becomes temporal simulation, and how extrapolative logic becomes cognitive infrastructure. Theoretical convergence is not incidental—it reveals the AI and institutional judgment may obey the same recursive laws.

A. Category Theory and Cognitive Structuring

DeepMind’s invocation of semi-simplicial categories to structure permutation behavior across list lengths has important implications for how foresight structures can evolve while maintaining internal coherence. MCAI’s Recursive Foresight Engine similarly applies compositional invariants across time steps in simulated institutional memory. What DeepMind formalizes algebraically, MCAI operationalizes temporally.

The convergence implies a common grammar between predictive functions and simulated cognition: extrapolation is structurally recursive, not simply statistically regressive. When MCAI maps recursive foresight across CDT layers, it is encoding the same “cone coherence” DeepMind uses to describe NFE behavior. The shared grammar invites formal translation between AI extrapolation and institutional memory modeling.

B. Simulation as Inductive Generalization

Amalgamation, as formalized in the DeepMind paper, provides not simply a method of reconstructing outputs but a philosophy of predictive modeling: if the parts are coherent, the whole will be structurally faithful. This supports MCAI’s commitment to foresight grounded in integrity, not simply inference. Simulation becomes a tool of inductive generalization—structured prediction, not probabilistic hallucination.

For institutions, the means strategic foresight can be built not from scale, but from minimal examples encoded with recursive logic. MCAI’s use of predictive cognitive AI as an institutional infrastructure layer transforms simulation into a general-purpose capability: foresight is no longer a workshop exercise, but a computational principle grounded in extrapolative integrity.

V. Who Should Care: Strategic Relevance and Stakeholder Impact

Predictive cognitive AI has broad implications, but its most immediate relevance lies with decision-makers who rely on institutional judgment, strategic foresight, and behavioral stability under uncertainty. Investors should care because MCAI introduces a structurally differentiated AI model—one that offers empirical foresight rather than probabilistic abstraction—anchored by intellectual property and operational proof points. Enterprise AI platforms and consultancies should care because MCAI represents a post-generative paradigm shift: modeling cognition, not language, and offering simulation fidelity under structured constraint.

Smaller firms and innovation leaders benefit from rapid scenario exploration without needing vast training datasets—offering strategic agility under high uncertainty. For consumers, the benefits will emerge indirectly, as more responsible institutional foresight leads to fairer policy design, safer markets, and more adaptive infrastructure. Ultimately, anyone affected by complex decisions—governed by policy, regulated by institutions, or invested in organizational foresight—has a stake in predictive cognitive infrastructure.

VI. Conclusion: Cognitive Signals, Extrapolation Trust, and the Path Ahead

What began as an investigation into functional symmetry ends with a framework for simulating organizational foresight. Filter equivariance formalizes a principle of trust: if a transformation preserves structure under deletion, then the system's output can be trusted to scale. MCAI adopts the same logic in designing foresight simulations that scale from low-signal to high-impact. In both DeepMind’s function class and MCAI’s CDT logic, coherence is not an artifact—it is an invariant.

This foresight simulation reveals that convergence between functional mathematics and cognitive architecture is foundational. MCAI emerges from the analysis not as a content engine, but as a predictive cognitive infrastructure company—embedding rule-based foresight into institutions through simulations that reflect advanced principles of extrapolative logic. In an era when AI narratives often prioritize speed over trust, MCAI offers a path focused on scaling judgment through structure, forecasting through coherence, and constructing institutional intelligence not from noise, but from signal.