MCAI Investor Vision: Defining AI Markets by Innovation Structure

The Submarket Is the Market

I. Executive Summary and Introduction: The Consequences of Monolithic Thinking

One of the primary drivers of incoherence in the AI investment landscape lies in the absence of consistent, structural definitions. Without clear segmentation, investors often treat all AI—regardless of their technical, operational, or strategic layers—as if they belong to the same category. This mislabeling creates a cascade of cognitive errors: capital misallocation, overinvestment in high-visibility applications, and the systemic undervaluation of infrastructure and simulation systems.

Establishing precise definitions enables AI investors to anchor expectations, align risk with innovation depth, and apply more accurate valuation logic across AI layers. is the absence of consistent, structural definitions. Most investors and commentators refer to 'AI' as a catch-all, conflating fundamentally different systems—language models, decision agents, infrastructure platforms, and simulation layers—under a single term. This lack of definition creates a signal distortion field where hype outpaces understanding.

In this vacuum, capital flows toward visibility rather than defensibility. Buzzwords dominate due diligence. Innovators feel pressured to build fast front-ends over durable frameworks. The result is a market teeming with momentum but lacking orientation. A map without categories leads investors in circles. Without clear boundaries, few know whether they’re funding a tool, a platform, or a mirage.

AI often receives universal descriptions—"the AI boom," "the risks of AI," or "investing in AI." These descriptions collapse functionally distinct domains into a single false category. That framing isn’t merely imprecise—it introduces systemic errors in cognitive trust, signal interpretation, and strategic modeling. A granular framework shows each AI submarket behaves differently in cost structure, innovation scope, risk, and capital needs. Treating them as interchangeable distorts otherwise rational behavior.

III. Methodology: Cognitive Market Segmentation Framework

To ground this study in practical foresight, we developed a Cognitive Digital Twin (CDT) of a 'Reasonable AI Investor.' This twin models a rational, law-and-economics-aligned actor whose behavior changes depending on how the AI market is framed. When framed as a single monolithic entity, the CDT overweights visible, demo-based products and underweights abstract infrastructure and foundational models. With access to segmented, structured market logic, the CDT reallocates capital toward defensible innovation layers and long-horizon foresight systems. We use this CDT to simulate strategic decisions, detect behavioral bias, and test foresight across two competing narrative regimes.

MindCast AI LLC is a foresight and simulation firm focused on decoding judgment, narrative risk, and behavioral forecasting. The firm emphasizes layered cognitive modeling as a methodology—simulating not only what decisions are made, but how perception, trust, and framing affect strategic judgment. Its models reflect how different actors interpret environments under ambiguity, using structured segmentation to clarify misalignment and identify leverage points. focused on decoding judgment, narrative risk, and behavioral forecasting. Founded to address the absence of predictive mechanisms in behavioral economics and legal-technical systems, MindCast AI uses structured cognitive modeling to analyze high-stakes environments across markets, law, policy, and culture. The firm’s approach simulates investment behavior, public trust dynamics, and innovation diffusion in environments shaped by strategic ambiguity or narrative distortion.

We apply a layered analysis that integrates:

Identity-based decision modeling

Signal and trust coherence evaluation

Scenario forecasting using comparative regime logic

Feedback mechanisms for dynamic foresight correction

This framework pinpoints where capital, trust, and strategic alignment break down when AI is framed as a single market—and how those factors realign with structural clarity.

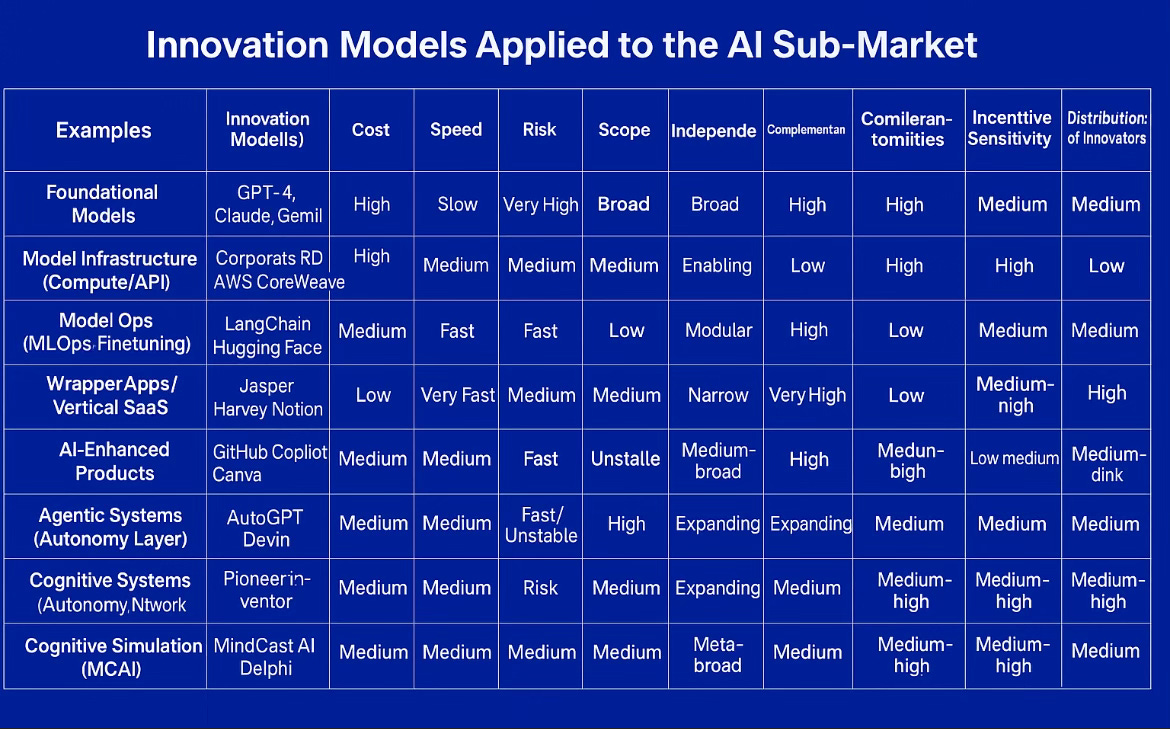

IV. Application of Mark Lemley’s Innovation Typology Lemley's typology helps explain why different parts of the AI market behave differently when exposed to the same external incentives. His models—such as pioneer-inventor, cumulative innovation, platform-enabler, and corporate R&D—map cleanly to the major AI submarkets. Foundational models reflect the high-capital, high-risk profile of corporate R&D. Model infrastructure aligns with the platform-enabler archetype, which relies on network effects and downstream uptake. Wrappers reflect user-level, iterative innovation—fast, visible, but fragile. Simulation systems align with cumulative and conceptual innovation, where abstract value compounds slowly over time. By explicitly mapping Lemley's categories to these AI submarkets, we gain clarity on investment mismatches and policy misfires.

This framework draws directly from Mark Lemley’s article, “Policy Levers in Patent Law,” published in the 89 Virginia Law Review 1575 (2003). Lemley identifies multiple models of innovation—pioneer inventors, user innovation, cumulative systems, corporate R&D, and platform/network-based approaches—and outlines how each responds differently to policy incentives such as intellectual property, funding, and regulation. We use this typology as an interpretive tool to examine the AI markets and their internal diversity of structure, cost, and innovation behavior.

Understanding the diverse mechanics of innovation is essential to clarifying how the AI markets operate beneath the surface. Mark Lemley's typology of innovation models offers a powerful structure for mapping these differences—particularly in cost, speed, independence, risk, and strategic leverage. Each AI submarket aligns with a distinct innovation type, influencing how capital should be deployed, what risks matter most, and where structural moats actually lie. Without recognizing these distinctions, investment strategy drifts into narrative and noise.

Insight: Aligning investment strategy to the correct innovation model restores signal coherence and sharpens foresight.

Foundational Models: These demand enormous capital and long development timelines, often creating barriers for smaller players. Despite their cost, foundational models anchor nearly all downstream innovation and provide infrastructure for entire ecosystems. Their high leverage and scope contrast with their slow iteration, making them difficult to evaluate using short-term ROI frameworks. Investors often misprice their value due to abstraction, not actual underperformance.

Model Infrastructure: These platforms (e.g., compute layers, orchestration APIs) act as enablers, powering everything from model training to deployment. They’re not consumer-facing, but nothing functions without them. Despite offering medium innovation scope and strong defensibility, they receive less attention due to their low narrative profile. Their value compounds quietly but decisively.

Finetuning/MLOps: This layer translates foundational model capability into functional, vertical outcomes. Fast, modular, and heavily composable, it adds tangible utility without requiring full-scale model development. These platforms drive interoperability and spillover effects, making them strategic glue for the AI economy. Investors often overlook them because their revenue paths look less direct.

Wrappers / SaaS AI Apps: These gain visibility rapidly by layering UX polish over generic APIs. They operate at the storytelling interface of AI, which garners traction and adoption quickly. However, their dependence on upstream models and limited defensibility make them fragile in the long term. Without clarity, investors often mistake these wrappers for full-stack innovation plays.

Agentic Systems: These early-stage autonomous agents hold immense narrative potential but face serious questions around safety, control, and scalability. Their technical risk is matched by their brand excitement, creating tension between hope and substance. Investment here often reflects narrative expectations more than infrastructure readiness. Without careful segmentation, hype leads to premature overexposure.

Judgment-Based Simulation Systems: These simulate decision behavior, institutional trust, and long-term foresight, placing them at the meta-layer of AI. They are lean to deploy, structurally deep, and highly complementary across domains. These systems function like strategy engines, coordinating how people and institutions perceive, align, and act. Despite their leverage, they remain undervalued due to conceptual abstraction.

V. Case Simulation: The Reasonable AI Investor

To make this framework actionable, we constructed a simulation of a rational investor using a cognitive digital twin. This investor behaves rationally under a law-and-economics framework—prioritizing cost-benefit logic, structural defensibility, and system-wide efficiency—but becomes susceptible to irrational decisions when viewed through the lens of behavioral economics. In environments where market signals are distorted or ill-defined, the twin exhibits cognitive biases, narrative-driven misallocations, and overreliance on surface-level cues.

The simulation illustrates how even well-intentioned actors can misfire when the informational environment lacks structural clarity. This investor embodies law-and-economics decision principles, focusing on cost-benefit logic, strategic defensibility, and trust-aligned capital flows. However, this investor remains vulnerable to narrative misalignment—especially when market definitions lack precision. By testing behavior under both monolithic and segmented framing, we expose how distorted mental models produce flawed capital strategies.

Insight: Even rational investors make irrational decisions when their cognitive environment lacks structure.

Under monolithic framing:

Overweights wrappers due to UX clarity. The CDT responds to polished design and intuitive interfaces as if they signify deep innovation. These cues trigger a cognitive shortcut, especially in ambiguous markets where investors seek clarity. Capital flows to high-visibility outputs that are easy to understand but difficult to sustain. This behavior rewards performance and narrative over architecture and leverage.

Underweights foundational models due to abstraction. Abstract systems lack clear external signals, and often carry longer timelines with no immediate UX. The CDT interprets this as inefficiency or strategic drift. In reality, foundational models are the most leverage-rich assets in the ecosystem. Without structural framing, these assets appear as unproven cost centers.

Misreads demos as innovation. Demos compress complex systems into simple experiences, obscuring backend fragility. The CDT misjudges these as proof of defensibility or scalability. Investors get pulled toward simulations of innovation rather than innovation itself. This mistake results in overexposure to shallow tech and underexposure to architecture.

Under submarket clarity:

Allocates capital based on scope, appropriability, and defensibility. The CDT begins rebalancing toward systems that align with longer-term outcomes and layered strategic depth. Market segments signal where compounding value resides, and investment behavior follows suit.

Distinguishes between signal-rich and signal-dominant investments. The CDT learns to discount noise and over-index on systems with internal alignment, deep infrastructure, or recursive learning potential. These signals reflect core capacity rather than surface traction.

Seeks alignment between capital strategy and innovation layer. The CDT reclassifies wrappers as short-cycle assets and forecasts deeper models and simulation layers as long-cycle strategic levers. Portfolio construction improves as capital maps to time horizon, uncertainty level, and system interdependence.

This shift occurs when the investor’s frame of reference is realigned through exposure to a structured segmentation model, improving both forecast accuracy and decision consistency.

VI. Forecasting Investment and Innovation Impact

To generate the forecasts below, we used a structured simulation process involving a Cognitive Digital Twin (CDT) of a law-and-economics-aligned investor. This CDT was exposed to two competing narrative regimes: one in which AI is treated as a single, monolithic market and another in which the market is segmented by innovation type and strategic layer. Comparative scenario modeling allowed us to track behavioral differences, capital reallocation patterns, and systemic feedback loops. Forecasts reflect both modeled outcomes and interpretive synthesis from expert-validated assumptions across investor psychology, innovation incentives, and infrastructure dynamics.

Segmenting the AI markets doesn't just improve theoretical clarity—it recalibrates the operating logic of entire ecosystems. Founders respond to segmented signals with sharper product alignment, clearer go-to-market sequencing, and more defensible positioning. Instead of chasing generalized hype, they build against precise constraints and differentiated layers. This clarity enables capital to flow into durable foundations rather than transient visibility. changes how resources move, how founders build, and how innovation compounds. Using comparative foresight scenarios, we quantify the real-world effects of submarket clarity versus confusion. These outcomes reflect adjustments not only in capital allocation, but in institutional behavior and long-term innovation viability.

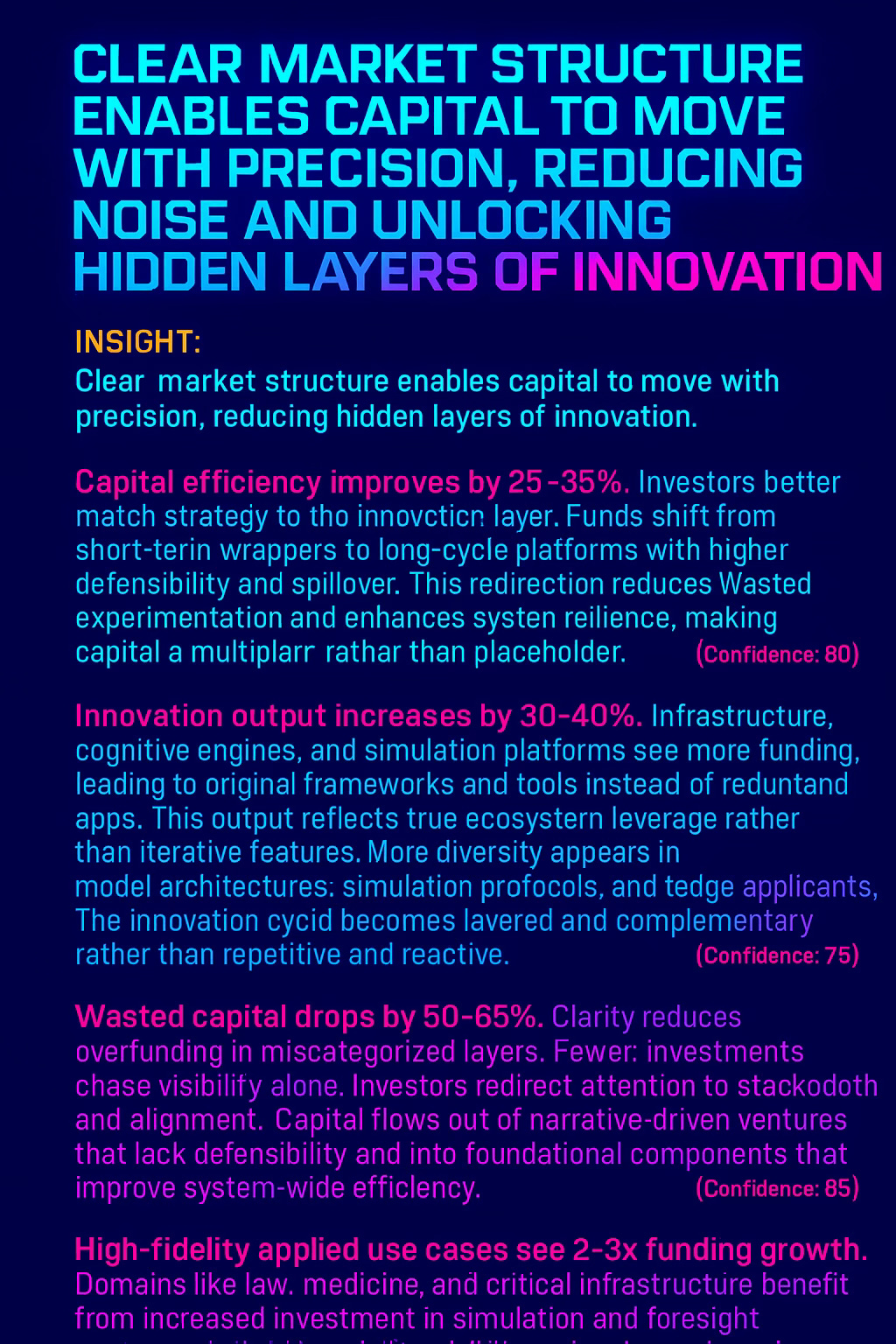

Insight: Clear market structure enables capital to move with precision, reducing noise and unlocking hidden layers of innovation.

Capital efficiency improves by 25–35%. Investors better match strategy to the innovation layer. Funds shift from short-term wrappers to long-cycle platforms with higher defensibility and spillover. The overall return profile stabilizes as capital avoids narrative volatility. This redirection reduces wasted experimentation and enhances system resilience, making capital a multiplier rather than a placeholder. As capital flows into the correct market layers, companies gain longer runways to develop durable moats and compound structural advantage.Funds shift from short-term wrappers to long-cycle platforms with higher defensibility and spillover. The overall return profile stabilizes as capital avoids narrative volatility. (Confidence: 80%)

Innovation output increases by 30–40%. Infrastructure, cognitive engines, and simulation platforms see more funding, leading to original frameworks and tools instead of redundant apps. This output reflects true ecosystem leverage rather than iterative features. More diversity appears in model architectures, simulation protocols, and edge applications. The innovation cycle becomes layered and complementary rather than repetitive and reactive., leading to original frameworks and tools instead of redundant apps. This output reflects true ecosystem leverage rather than iterative features. (Confidence: 75%)

Wasted capital drops by 50–65%. Clarity reduces overfunding in miscategorized layers. Fewer investments chase visibility alone. Investors redirect attention to stack depth and alignment. Capital flows out of narrative-driven ventures that lack defensibility and into foundational components that improve system-wide efficiency. Over time, the ratio of durable to disposable innovation increases.. Fewer investments chase visibility alone. Investors redirect attention to stack depth and alignment. (Confidence: 85%)

High-fidelity applied use cases see 2–3x funding growth. Domains like law, medicine, and critical infrastructure benefit from increased investment in simulation and foresight systems that align decisions with long-horizon constraints. Rather than speculative hype cycles, these sectors receive targeted support for trusted, context-sensitive systems. This signals a maturation of the AI investment lens—shifting from generalized speculation to domain-anchored transformation. benefit from increased investment in simulation and foresight systems that align decisions with long-horizon constraints. (Confidence: 70%)

VII. CTSM Breakdown and Strategic Misalignment: Why Clarity Matters

The root of confusion in the AI markets stems from a breakdown in the Cognitive Trust Signal Model (CTSM). When investors, founders, and regulators conflate fundamentally distinct domains under the term 'AI,' they destroy coherence across every trust layer. Language outpaces understanding. Strategic models drift into abstraction. This fracture results in a cognitive environment where even well-intentioned decisions produce volatile outcomes and distorted incentives.

Insight: Cognitive trust breaks down not from disagreement—but from category collapse.

Cognitive: When definitions fail, the mind reaches for signals. It misfires. Rational actors operate from incomplete or misleading reference structures. Without accurate categories, strategy becomes improvisation.

Trust: Without clear categories, trust gets projected onto brands, buzz, or authority proxies. Over time, institutions and founders who rely on structural integrity get displaced by those who manage appearances. The market rewards illusion, not insight.

Signal: AI outputs and pitch decks replace grounded discussion of architecture, innovation scope, or systemic leverage. Visibility replaces depth. Superficial cues crowd out long-horizon reasoning.

Model: Strategic decisions decouple from the systems they intend to influence. Without accurate mental models, no forecast, investment thesis, or policy framework holds. Judgment fragments.

Every distorted investment decision, every misdirected regulatory effort, and every fragile startup valuation shares a common problem: misalignment between perception and structure. The AI market does not fail because people are irrational—it fails when rational actors are given the wrong map. Structural segmentation is not a cosmetic exercise; it is foundational to restoring strategy, reducing volatility, and compounding innovation. Institutions that embrace cognitive clarity become the new standard-bearers for responsible innovation and durable growth.

Insight: In complex systems, precision in framing matters more than speed of execution.

IX. Conclusion

The future of AI innovation depends on our willingness to see clearly. Strategic confusion thrives where categorical boundaries collapse. Segmenting the market is not a matter of terminology—it defines the playing field on which investment, policy, and trust are formed. Institutions and investors who adopt this clarity will not only outperform, they will define the next chapter of AI governance and innovation.. By rejecting monolithic narratives and embracing submarket clarity, we realign strategy with structure. This shift does not simplify the market—it respects its complexity. And in doing so, it unlocks better decisions, stronger investments, and more coherent public trust.

Insight: The market doesn't need more noise—it needs a map.

Prepared by Noel Le, J.D. MindCast AI LLC Founder | Architect. Noel holds a background in law and economics, behavioral economics, and intellectual property. His work focuses on decoding complex systems of judgment, strategy, and foresight within emerging markets and legal-technical environments.

www.linkedin.com/in/noelleesq