MCAI Innovation Vision: NVIDIA’s SLM Thesis and Apple’s Cognitive AI Future

Extending NVIDIA’s 2025 Study into Platform Competition and Apple’s Predictive Operating System

The vision statement itself is a demonstration of MindCast AI’s predictive cognitive foresight capabilities. Using Cognitive Digital Twins and foresight simulation, it extends NVIDIA’s 2025 study beyond technical benchmarks to explore how Apple, Google, Microsoft, and OpenAI may adapt in practice. By weaving cost curves, hardware architecture, trust narratives, and adoption dynamics into coherent pathways, the document shows how predictive cognitive AI can anticipate competitive futures.

Executive Summary

Imagine your phone knowing what you need before you ask. Picture devices that learn your patterns, anticipate your tasks, and seamlessly coordinate your digital life. The inevitable next phase of computing is arriving faster than most realize.

NVIDIA's groundbreaking research, Small Language Models are the Future of Agentic AI (June 2025), reveals the path forward. Small Language Models have reached a tipping point. They match massive cloud-based systems while running efficiently on devices in your pocket. While the research centers on agentic AI systems, its efficiency and deployment arguments apply broadly across language model applications.

Four technology giants will determine how this unfolds: Apple commands integrated hardware and trusted memory systems. Google controls Android distribution with advancing Tensor capabilities. Microsoft dominates enterprise through Windows and Office integration. OpenAI leads research but lacks device platforms.

Apple stands at the center of this transformation. While competitors scramble to build new infrastructures, Apple already possesses the integrated platform needed to deliver persistent, predictive intelligence. The company that redefined phones, tablets, and watches is poised to redefine computing itself.

Contact mcai@mindcast-ai.com to partner with us on predictive cognitive AI.

I. The Great AI Shift

The era of massive, cloud-dependent AI is ending. NVIDIA's research proves what many suspected: bigger isn't always better.

Small Language Models now rival giants while consuming fractions of resources. Microsoft's Phi-2, with just 2.7 billion parameters, matches models ten times larger while running 15× faster. DeepSeek-R1 variants outperform GPT-4o on reasoning tasks. Salesforce's specialized models beat frontier systems at practical applications.

The economics are stunning. Running small models costs 10-30× less than operating massive ones. Development teams fine-tune specialized variants overnight using ordinary hardware. What once required million-dollar data centers now runs on consumer devices. NVIDIA's Dynamo framework enables instant inference. Parameter-efficient techniques like LoRA make model customization accessible to any development team.

The strategic implications are profound. AI will fragment into specialized components. Large models will handle the most complex reasoning when needed. Small models will manage everything else—email composition, calendar optimization, document analysis, content creation. Companies controlling local processing will capture increasing value as compute shifts from cloud to edge.

As NVIDIA’s study emphasizes, most agentic tasks are repetitive and scoped, ideally suited for SLMs. We're witnessing the birth of truly personal AI. Not agents you visit in browsers, but cognitive partners living in your devices, learning your patterns, anticipating your needs. The transformation extends beyond agentic systems to reshape how we interact with all AI-powered applications.

II. Apple's Perfect Storm

This section applies NVIDIA’s thesis to the consumer archetype. Apple demonstrates how SLMs can be deployed across tightly integrated hardware, memory, and software ecosystems, making it the benchmark for consumer-facing predictive AI.

Apple didn't stumble into this opportunity. The company systematically built the ideal platform for the cognitive revolution through integrated hardware and software development spanning over a decade.

The Silicon Foundation

A- and M-series processors feature unified memory architecture sharing fast memory pools across CPU, GPU, and Neural Engine. The design perfectly suits low-latency inference that small cognitive models require. Apple controls both silicon and software, enabling optimization no fragmented ecosystem can match.

Neural Engine already powers sophisticated on-device learning across photography, voice recognition, and predictive text. Expanding to support 3-7B parameter cognitive models requires evolution, not revolution. Performance advantages emerge from tight integration that competitors cannot easily replicate through hardware partnerships.

The Memory Ecosystem

Apple operates something competitors lack: a complete memory ecosystem spanning device and cloud. Local memory maintains immediate awareness—active applications, current location, recent interactions. iCloud provides encrypted, persistent storage of behavioral patterns, communication histories, and personal preferences.

Combined architecture mirrors how human cognition actually works: working memory for immediate processing, long-term memory for continuity. Local memory enables instant responses. Cloud memory provides persistent learning across devices, contexts, and time periods. Cognitive agents can remember, evolve, and anticipate across extended relationships.

The Trust Advantage

"What happens on your iPhone, stays on your iPhone" messaging establishes consumer confidence essential for cognitive AI adoption. Predictive intelligence requires intimate personal data access—browsing patterns, communication habits, location histories, financial behaviors.

Apple alone commands widespread trust for such intimate AI integration. Competitors face skepticism about data collection motivations and privacy protection capabilities. Regulatory environments increasingly favor local processing over cloud-based data aggregation, strengthening Apple's competitive positioning.

Apple can activate cognitive agents across existing billion-device installations through software updates. Competitors must simultaneously manufacture hardware, build distribution, and establish consumer trust—a vastly more complex undertaking.

As NVIDIA’s study suggests, platforms that align hardware, memory, and trust are best suited to realize the SLM-first vision. Apple’s ecosystem makes it the consumer archetype for NVIDIA’s thesis in action.

III. Microsoft's Enterprise Vision

This section explores the enterprise archetype. Microsoft illustrates how SLM-first approaches extend NVIDIA’s thesis into business workflows through Windows and Office integration, emphasizing hybrid edge–cloud models and enterprise trust.

Microsoft pursues cognitive AI through business workflow integration rather than consumer device control. Windows desktop dominance and Office ecosystem create direct pathways for embedding predictive intelligence into professional environments where adoption dynamics differ significantly from consumer markets.

Productivity Transformation

Copilot integration across Word, Excel, PowerPoint, and Outlook provides immediate deployment channels for cognitive assistance. Predictive document drafting leverages communication patterns and project contexts. Automated meeting preparation analyzes calendars and reviews relevant documents. Email composition draws on organizational knowledge and user preferences.

Windows-level integration extends cognitive capabilities across enterprise software environments. Professional workflows become anticipatory rather than reactive. Productivity software transforms from tools into cognitive partners understanding organizational contexts and individual work patterns.

Hybrid Intelligence Architecture

Microsoft's strength lies in balancing local efficiency with Azure cloud capabilities. Routine predictive tasks execute on Windows devices while complex reasoning leverages cloud infrastructure. Hybrid architecture optimizes costs while maintaining enterprise security and compliance requirements that purely local solutions cannot address.

Local processing handles immediate operations—email filtering, calendar optimization, document formatting. Cloud processing manages sophisticated tasks—strategic analysis, regulatory compliance, advanced data synthesis. The division maximizes both cost efficiency and capability coverage across enterprise workflows.

Enterprise Trust Foundation

Microsoft benefits from decades of relationships with business customers and government agencies. While Apple leads consumer privacy narratives, Microsoft's regulatory credibility and enterprise security positioning provide different but equally valuable trust foundations for business-critical AI deployment.

Enterprise customers prioritize operational reliability and compliance over consumer privacy messaging. Microsoft's established track record with mission-critical systems enables faster cognitive AI adoption than consumer-focused alternatives requiring new trust-building efforts.

Microsoft demonstrates that cognitive revolution extends beyond consumer devices into professional environments where different trust models and deployment requirements create viable competitive alternatives. This aligns with NVIDIA’s thesis that modular SLM deployment can serve diverse contexts, not only consumer assistants.

IV. Google's Scale Paradox

This section examines the mobile archetype. Google shows how Android’s global reach and Tensor hardware could turn NVIDIA’s efficiency thesis into mass-market deployment, but faces challenges of trust and privacy.

Google commands global smartphone distribution through Android but faces fundamental contradictions between cognitive AI requirements and advertising-dependent business models. Over 70% market share provides massive deployment potential while privacy positioning gaps create significant adoption barriers.

Distribution Supremacy

Android's device diversity spans premium smartphones to budget handsets across emerging markets worldwide. SLM efficiency enables sophisticated AI capabilities on lower-powered hardware, potentially democratizing cognitive assistance beyond Apple's premium ecosystem constraints. Google reaches billions of users through Play Store distribution and Android system updates.

Device fragmentation that historically challenged Android development becomes advantageous for SLM deployment. Different hardware capabilities enable specialized model variants—powerful cognitive agents on flagship devices, efficient variants on budget hardware. Such flexibility could enable broader global adoption than Apple's uniform but expensive hardware requirements.

Tensor Acceleration

Custom silicon development progresses rapidly through Tensor G-series processors emphasizing on-device AI acceleration. While current generations lag Apple's Neural Engine maturity, Google's iteration speed and AI model development leadership could close hardware gaps quickly.

Deep integration between Google's language models and Tensor optimization creates potential advantages in cognitive reasoning capabilities. Google's research consistently produces frontier models that could be distilled into highly capable SLMs optimized for Tensor hardware architecture.

The Privacy Contradiction

Google's advertising revenue depends on personal data collection and analysis—precisely the information streams cognitive agents require. Persistent cognitive monitoring systems need intimate access to browsing patterns, communication habits, location histories, purchasing behaviors.

Consumer skepticism about Google's data practices could limit acceptance of always-on cognitive systems. Users increasingly distinguish between voluntary data sharing for services and continuous surveillance for advertising optimization. Cognitive agents requiring deep personal access may face resistance that fundamentally limits adoption potential.

Google possesses distribution scale and technical capabilities for global SLM deployment. Privacy credibility represents the primary barrier to competing with Apple's intimate cognitive positioning or Microsoft's enterprise trust advantages. This reinforces NVIDIA’s warning that operational suitability requires not only model efficiency but also alignment with user trust.

V. OpenAI's Strategic Crossroads

This section highlights the research archetype. OpenAI’s leadership in frontier models illustrates the innovation engine of the field, but its lack of device platforms and dependence on cloud deployment expose vulnerabilities within NVIDIA’s SLM-first framework.

OpenAI's proposed hardware strategy faces mounting challenges as SLM capabilities mature and demonstrate comparable performance at dramatically reduced costs. Cloud-dependent devices carrying ongoing inference expenses compete poorly against on-device alternatives offering similar capabilities at fractions of operational cost.

The Infrastructure Burden

Dedicated AI devices depending on continuous cloud access inherit significant limitations. Each interaction generates billable inference expenses that accumulate across device lifespans. Latency overhead degrades user experience compared to instant local processing.

Platform owners like Apple activate cognitive agents across billion-device installations through software updates—requiring no hardware manufacturing, distribution networks, or ongoing infrastructure investments. OpenAI must build entire hardware ecosystems while competitors leverage existing platforms for immediate deployment.

The Memory Gap

Persistent cognitive AI demands both immediate context awareness and longitudinal memory continuity spanning extended user relationships. Apple's local-cloud memory architecture provides both layers through established, trusted infrastructure users already accept.

OpenAI lacks comparable distributed memory substrate, limiting cognitive capabilities to session-based interactions that restart with each engagement. Without persistent memory, cognitive agents cannot build understanding of user preferences, behavioral patterns, or evolving needs that enable truly predictive assistance.

Platform Dependencies

Hardware development requires partnerships with manufacturers, distributors, and support networks that dilute control over user experience and economic value capture. Third-party relationships subordinate OpenAI's strategic interests while competitors maintain direct user relationships through owned platforms.

Consumer device success demands retail presence, marketing capabilities, and customer support infrastructure that OpenAI must build from zero. Apple, Google, and Microsoft leverage existing consumer relationships and distribution channels.

OpenAI's frontier research capabilities remain valuable for advancing AI development. However, consumer deployment at scale requires platform assets and infrastructure the company currently lacks, making strategic positioning increasingly vulnerable without major pivots. NVIDIA’s study underscores this vulnerability: without efficient on-device deployment and persistent memory, OpenAI’s approach is structurally disadvantaged.

VI. The Coming Battle: 2025–2030

The cognitive revolution will unfold through distinct waves, each favoring different competitive strengths. Apple's integration advantages must be weighed against rivals' adaptation capabilities and alternative deployment pathways.

Phase 1: Foundation Building (2025–2026)

Apple deploys 3–7B parameter cognitive models through iOS and macOS, transforming Siri from reactive assistant into predictive partner. Models run locally on Neural Engine hardware, offering instant responses without cloud dependencies. Apple brands the evolution as Intelligence 2.0, emphasizing privacy and seamless integration.

Microsoft embeds similar capabilities into Windows and Office applications for enterprise productivity enhancement. Copilot becomes truly anticipatory, preparing documents before meetings, drafting emails before requests, coordinating schedules proactively.

Google accelerates Tensor development while constructing privacy frameworks balancing advertising revenue with cognitive AI requirements. Android's scale enables rapid deployment despite trust limitations.

OpenAI pursues strategic partnerships or hardware development to establish consumer device presence. Cloud-first approach faces mounting economic pressures as on-device alternatives prove comparable capabilities.

Phase 2: Ecosystem Expansion (2027–2028)

Apple extends App Store into cognitive agent marketplace. Developers release specialized models for legal drafting, education, design, personal finance, and domain-specific applications. iCloud ensures agents maintain user memory and preferences across devices, creating persistent, personalized intelligence that evolves with users.

Microsoft facilitates enterprise agent development through Azure integration and Windows platform access. Business-focused cognitive assistance accelerates through trusted enterprise channels.

Google leverages Android's openness for rapid agent proliferation while addressing privacy framework requirements. Distribution advantages enable global cognitive AI access despite trust limitations.

Phase 3: Cognitive Partnership (2029–2030)

Successful platforms transcend reactive assistance to achieve predictive cognitive partnership. Devices anticipate needs, forecast tasks, coordinate applications seamlessly. The iPhone evolves from communication tool to cognitive companion integrated with daily life rhythms.

Competition centers on trust, memory architecture, and integration quality rather than raw model capabilities. Winners achieve persistent cognitive memory enabling agents that learn, remember, and predict across extended user relationships.

VII. Apple's Cognitive Future

Apple will redefine what devices can become. The iPhone transforms from communication tool to cognitive companion. The Mac evolves from productivity machine to creative partner. The Apple Watch becomes a health and wellness advisor that truly understands your body and goals.

The Predictive Operating System

By 2029, Apple's ecosystem becomes the world's first Predictive Operating System. Devices don't wait for commands—they anticipate needs. Your phone prepares your commute before you think about leaving. Your Mac queues relevant documents before meetings start. Your Watch suggests workouts based on stress patterns and schedule availability.

Apps coordinate seamlessly through cognitive layer understanding your intentions across contexts. Calendar apps communicate with travel apps. Health apps inform productivity apps. Financial apps coordinate with shopping apps. The entire ecosystem works together as unified cognitive partner.

Personal AI That Stays Personal

Apple's privacy-first architecture enables intimacy without surveillance. Cognitive processing happens locally on your devices. Personal patterns remain encrypted in your iCloud. No third parties access your behavioral data. No advertising algorithms monetize your private life.

Cognitive agents learn your communication style, work patterns, creative preferences, and life goals while keeping this knowledge completely private. They become extensions of your own thinking rather than external services monitoring your behavior.

The Developer Renaissance

Apple's cognitive marketplace will unleash unprecedented creativity. Developers will create specialized cognitive agents for every domain imaginable. Legal professionals get agents trained on case law and contract patterns. Musicians get creative partners understanding their artistic style. Students get tutors adapting to their learning preferences.

Each agent maintains persistent memory through iCloud while processing privately on Neural Engine hardware. Users build collections of cognitive partners specialized for different aspects of their lives while maintaining complete control over their data and preferences.

VIII. The Competition Responds

Apple's advantages are significant but not insurmountable. Google, Microsoft, and OpenAI each possess formidable capabilities that could reshape competitive dynamics.

Google's Distribution Power: Android reaches billions of devices globally. Tensor hardware accelerates rapidly. Google's AI research consistently produces breakthrough models. Privacy positioning remains the primary limitation for intimate cognitive applications.

Microsoft's Enterprise Dominance: Windows and Office provide immediate deployment channels for business cognitive assistance. Enterprise customers prioritize productivity over privacy narratives. Hybrid cloud architecture balances efficiency with security requirements.

OpenAI's Research Leadership: Frontier model capabilities drive industry advancement. Developer community strength creates ecosystem advantages. Platform dependencies limit consumer scaling without strategic partnerships or acquisitions.

Competitive success requires three elements: efficient local processing, persistent memory architecture, and user trust for cognitive partnership. Apple currently leads across all dimensions. Rivals possess resources and motivation to adapt quickly. NVIDIA’s thesis reminds us that efficiency and modularity, not size, will decide the winners.

IX. The Path Forward

The cognitive revolution is inevitable. NVIDIA's research validates technical feasibility. Economic advantages compel adoption. Consumer demand for predictive assistance grows as AI capabilities mature, though adoption hurdles remain and must be addressed with care.

Critical Success Factors:

Platform integration trumps standalone development. Companies lacking device control should pursue partnerships or focus on specialized applications. Consumer education and trust-building determine adoption speed more than technical capabilities.

Cognitive agents require intimate personal data access that many users currently resist. Successful deployment demands transparent consent frameworks, clear value propositions, and gradual capability introduction building confidence over time.

The Stakes:

Companies successfully navigating this transformation will define human-AI collaboration for the next decade. The cognitive revolution represents more than technological evolution—it signals computing platforms becoming thinking partners.

Apple's positioning provides significant advantages in capturing these opportunities. Unified memory architecture, iCloud integration, and privacy trust create competitive moats that rivals must overcome through different strategic pathways.

The Vision Realized:

By 2030, Apple's ecosystem becomes humanity's first Cognitive Operating System. Devices anticipate rather than react. Intelligence becomes ambient and personal. Privacy remains absolute. The iPhone evolves from tool to partner, from device to cognitive extension of human capability.

The SLM era rewards platforms, not just models. Apple starts ahead in the race to cognitive partnership. The future belongs to whoever best balances capability, trust, and seamless integration.

The cognitive revolution begins now.

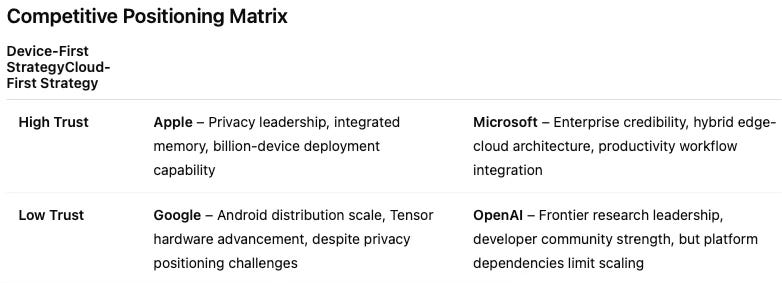

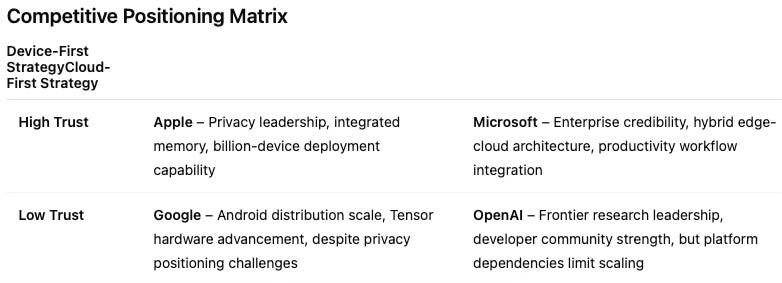

X. Archetype Summary Table

The table provides a synthesis of the four archetypes examined throughout the vision statement. By condensing Apple, Microsoft, Google, and OpenAI into a comparative framework, it highlights how NVIDIA’s SLM-first thesis manifests differently across consumer, enterprise, mobile, and research contexts. This summary allows readers to see at a glance the distinct strengths and challenges each competitor faces in the transition to predictive cognitive AI.

Each archetype reflects how NVIDIA’s SLM-first thesis manifests in different contexts. Apple exemplifies consumer deployment, Microsoft anchors enterprise transformation, Google represents mobile scale, and OpenAI drives research. Together they define the competitive landscape of the cognitive revolution.