MCAI Education Vision: Trust Before Harm, A Vision for Proactive Digital Safety in Our Schools

A proposal for anticipatory student protection in the digital age

📱 The Moment Everything Changed

It was 2:30 AM when Sarah's phone buzzed. Her 14-year-old daughter Emma had finally worked up the courage to show her the messages—weeks of what started as friendly conversation from a "classmate" who gradually became more personal, more isolating, more manipulative. By the time Emma realized something was wrong, she felt trapped, ashamed, and convinced no one would believe her.

Emma's story isn't unique. It's happening in our community, in our schools, to our children. And our current approach to digital safety means we're always responding after the damage is done.

What if we could change that?

🌐 The Reality of Digital Childhood

The recent passage of the Take It Down Act is a step in the right direction—it creates a narrow exception to Section 230, requiring platforms to remove non-consensual explicit content involving minors. But its structure remains reactive: it depends on victims or families flagging content after harm has already occurred. Like other legal efforts, it punishes harm post-facto rather than empowering communities to prevent it. We need tools that allow us to anticipate and interrupt patterns of harm before they escalate.

Our children inhabit a world we're still learning to understand. They collaborate on Google Classroom, message friends on Discord, express themselves on TikTok, and navigate social dynamics that play out across multiple platforms simultaneously. These digital spaces aren't separate from their "real" lives—they are their real lives.

Yet our approach to protecting them online remains fundamentally reactive. We wait for reports. We respond to crises. We implement policies after problems have already caused harm. Like trying to prevent car accidents by only installing traffic lights after crashes have occurred.

The consequences of this reactive approach are profound:

For students: By the time adults intervene, social damage has often become psychological damage. Screenshots have been shared. Reputations have been attacked. Trust has been broken. The harm has already reshaped how a child sees themselves and their place in their community.

For families: Parents feel helpless, simultaneously worried about their children's safety and uncertain about how much oversight is appropriate or even possible.

For educators: Teachers and counselors want to support struggling students but often don't know problems exist until they've escalated into crisis situations.

For our community: We're collectively failing to provide the kind of protective environment that allows young people to explore, learn, and grow safely in digital spaces.

🛡 A Different Approach: Anticipatory Protection

Important Note: MindCast AI (MCAI) is a proposed approach. The ideas presented in this vision statement are intended to open a collaborative conversation about what responsible, anticipatory student protection could look like—not to market or deploy a commercial solution.

Imagine if we could identify concerning patterns before they became crises. Not by reading private messages or surveilling student conversations, but by recognizing the early warning signals that suggest a child might be in danger or distress.

This is the promise of proactive digital safety: using advanced pattern recognition to spot manipulation, grooming, cyberbullying, and emotional distress in their early stages, when intervention can prevent rather than merely respond to harm.

Think of it as creating a digital immune system for our school community—one that can recognize threats and mobilize protective responses before damage occurs.

🛠️ How MindCast AI Could Support Student Protection

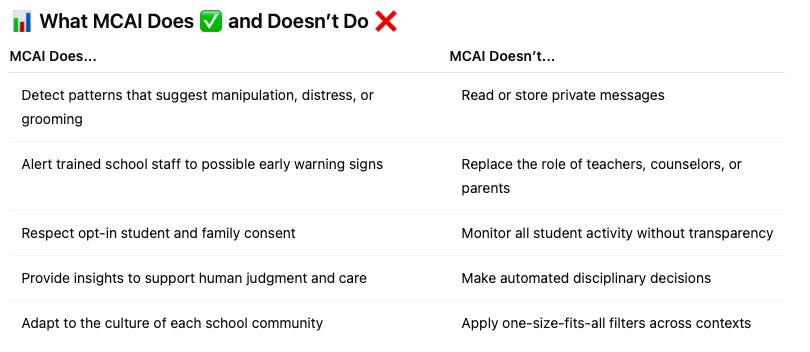

MindCast AI (MCAI) represents a new approach to digital safety built on three core principles:

1. Pattern Recognition Over Content Surveillance

Rather than reading messages, MCAI analyzes communication patterns. It learns what healthy peer interactions look like and flags deviations that might indicate problems:

An adult account exhibiting linguistic patterns inconsistent with typical student communication

Gradual shifts in conversation tone that suggest manipulation or grooming

Changes in a student's digital behavior that might indicate emotional distress

2. Early Warning Over Crisis Response

The system provides alerts when concerning patterns emerge, not after harm has occurred:

A counselor receives an early alert that a student's communication patterns suggest depression or anxiety

Administrators are notified when interactions across platforms suggest coordinated harassment

Teachers become aware that a student might be masking distress behind seemingly normal behavior

3. Human Judgment Enhanced by Technology

MCAI doesn't make decisions—it provides information to caring adults who do:

School counselors use pattern insights to guide supportive conversations

Teachers gain awareness of students who might need additional attention

Administrators can intervene in developing situations before they require disciplinary action

🔍 Real-World Impact: What Protection Could Look Like

Scenario 1: Preventing Predatory Behavior An adult creates an account claiming to be a student and begins messaging several children. While individual conversations seem innocent, MCAI detects linguistic patterns inconsistent with adolescent communication and behavioral anomalies across multiple interactions. School administrators are alerted within hours, not weeks.

Scenario 2: Supporting Students in Crisis A student's digital communication patterns begin showing signs of depression and social withdrawal. Instead of waiting for grades to drop or a crisis to occur, a counselor receives an early alert and can reach out with support resources and caring intervention.

Scenario 3: Stopping Cyberbullying Campaigns What appears to be isolated teasing across different platforms is actually a coordinated harassment campaign. MCAI identifies the pattern and scope of the targeting, allowing administrators to address the full situation rather than just individual incidents.

⚖️ Addressing Essential Concerns

Privacy and Student Rights

Any implementation of proactive digital safety must begin with ironclad privacy protections:

Data Minimization: The system analyzes patterns, not content. No messages are stored or read by humans unless a serious safety concern is identified.

Consent-Based Participation: Families opt into enhanced protection rather than being automatically enrolled. Students and parents understand exactly what data is analyzed and how.

Transparent Operations: Regular community reporting on how the system is used, what alerts are generated, and how they're handled.

Independent Oversight: External auditing to ensure the technology serves only safety purposes and doesn't expand beyond its protective mission.

Accuracy and False Positives

No automated system is perfect, and false positives are inevitable:

Human-Centered Decision Making: All alerts require human review and judgment. Technology informs, humans decide.

Learning and Improvement: The system improves over time as it learns from our specific school community's communication patterns.

Appeals and Corrections: Clear processes for students and families to address any misunderstandings or errors.

Proportional Response: Early alerts lead to caring conversations, not punitive actions.

Educational Values and Student Expression

Digital safety technology must support, not undermine, the educational environment:

Encouraging Healthy Communication: By reducing risks, we create space for more authentic and creative student expression.

Teaching Digital Citizenship: The system becomes a tool for helping students understand healthy vs. concerning online interactions.

Preserving Learning Opportunities: Protection enables the kind of risk-taking and exploration essential to education.

🏫 Implementation: A Community-Centered Approach

Phase 1: Community Engagement (Months 1-3)

Before any technology is implemented, we engage in comprehensive community dialogue:

Parent forums to understand concerns and priorities

Student focus groups to hear directly from those most affected

Educator workshops to align safety goals with educational values

Community input on privacy standards and oversight structures

Phase 2: Volunteer Pilot (Months 4-9)

Implementation begins with families who choose to participate:

200-300 volunteer student participants across multiple grade levels

Focus on school-managed platforms initially (Google Classroom, student forums)

Intensive monitoring of system performance and community feedback

Regular community reporting on results and challenges

Phase 3: Evaluation and Decision (Months 10-12)

Comprehensive assessment determines whether to expand, modify, or discontinue:

Independent evaluation by digital safety and education researchers

Student, parent, and educator feedback analysis

Cost-benefit assessment compared to current safety approaches

Community decision on long-term implementation

💸 The Economics of Proactive Safety

Annual Cost: Approximately $8-12 per student Comparison: Less than a month of many families' streaming service subscriptions Value: Potential prevention of situations that currently require expensive crisis counseling, legal intervention, and long-term therapeutic support

Return on Investment: The human costs of digital harm—damaged self-esteem, social isolation, academic disruption, family stress—far exceed the financial investment in prevention.

🌱 Beyond Technology: Building a Culture of Digital Care

MCAI is not a silver bullet. Technology alone cannot create the kind of supportive, protective community our children deserve. But it can be a powerful tool within a broader approach to digital wellness that includes:

Digital Citizenship Education: Teaching students to recognize manipulation, practice healthy communication, and support peers in distress.

Parent Education: Helping families understand digital risks and develop appropriate boundaries and communication strategies.

Educator Training: Ensuring teachers and counselors can effectively respond to digital safety concerns with both technological insights and human wisdom.

Community Standards: Developing shared expectations for how we treat each other in digital spaces.

🚨 The Stakes: Why This Matters Now

We are raising the first generation of truly digital natives in an environment where the adults charged with protecting them are still learning the landscape. The cost of our learning curve is measured in damaged childhoods, disrupted educations, and fractured family relationships.

Meanwhile, threats are evolving faster than our protective responses:

Artificial intelligence makes sophisticated manipulation easier to scale

Social media algorithms can amplify harassment campaigns

Anonymous platforms create new opportunities for predatory behavior

Digital harm leaves lasting psychological imprints that affect academic performance, social development, and mental health

We cannot afford to remain reactive when proactive protection is within reach.

✨ A Vision for Digital Childhood

Imagine a school community where:

Children can explore digital creativity without fear of harassment or manipulation

Parents feel confident their children are protected without being surveilled

Educators can focus on teaching rather than crisis management

Technology serves human flourishing rather than creating new vulnerabilities

This vision requires courage: the courage to try new approaches, to learn from mistakes, to prioritize protection over convenience, and to center children's wellbeing in our decision-making.

It also requires wisdom: the wisdom to implement powerful technologies thoughtfully, to preserve the values that make education transformative, and to remain accountable to the families we serve.

🔎 The Choice Before Us

We can continue responding to digital harm after it occurs, accepting the inevitable damage to children who deserve better protection. Or we can pioneer a new approach that puts trust before harm, prevention before crisis, and children's wellbeing before technological convenience.

The choice isn't whether our children will inhabit digital spaces—they already do. The choice is whether we'll develop the tools and wisdom to make those spaces as safe as the physical environments we work so hard to protect.

Our children are growing up in a world we're still learning to navigate. We owe it to them to navigate it thoughtfully, with their safety and flourishing as our highest priority.

The technology exists. The need is urgent. The only question is whether we have the collective will to act before more children are harmed by threats we had the power to prevent.

This vision statement represents the beginning of a community conversation, not the end. The most important part isn't the technology—it's the commitment to putting our children's safety and wellbeing first in our rapidly evolving digital world.