MCAI Investor Vision: The Invisible Algorithm — How Four Economists Decode the AI Investment Boom

Cognitive Digital Twins of Smith, Thaler, Shiller, Posner Explain Why Trillions Flow Into AI

I. Executive Summary: The Market That Thinks

AI has become the gravitational center of modern capital. Trillions in venture, infrastructure, and public-market value now consolidate around a psychological proposition: that intelligence itself has become an investable asset class.

To understand this phenomenon, imagine four architects of economic thought—Adam Smith, Richard Thaler, Robert Shiller, and Richard Posner—sitting around a small oak table. Each represents a different dimension of how societies convert belief into value: moral sentiment, behavioral bias, narrative contagion, and institutional design. Together, they form a cognitive map explaining why the AI boom feels both fevered and inevitable.

AI is reshaping the emotional and intellectual architecture of economic life. By viewing capital as a cognitive system, MindCast AI uncovers the deeper forces behind the investment boom. This opens the door for a multi-layered analysis across psychology, narrative, morality, and law.

Contact mcai@mindcast-ai.com to partner with us on AI investment market simulations.

II. Prior Studies and How This Vision Statement Builds on Them

MindCast AI published prior studies that contribute different layers of foresight—trust, innovation dynamics, and structural AI economics. By weaving these foundations together, the current work extends the intellectual scaffolding underlying our approach using Cognitive Digital Twins (CDTs) of the economists. The reader gains clarity on how this vision statement builds, amplifies, and evolves previous frameworks.

1. MindCast AI Market Vision: Trust as AI Infrastructure

How Economists Explain the Invisible Foundation of Today’s AI Market (Aug 2025)

The study mapped the invisible infrastructure of today’s AI market—trust. By engaging CDTs of Keynes, Arrow, Coase, Shiller, Schumpeter, and Iansiti, it revealed how confidence, institutional reliability, transaction costs, narrative strength, creative destruction, and platform governance shape high-cost AI investment. The current vision statement builds on this by tracing the deeper cognitive currents beneath trust itself: the moral sentiments (Smith), predictable biases (Thaler), viral narratives (Shiller), and legal arbitrage dynamics (Posner) that shape the boom before trust even crystallizes.

2. MindCast AI Economics Vision: The Economics Nobel Innovation Continuum

From Incremental to Pioneering AI Innovation (Oct 2025)

The study defined the Innovation Continuum by integrating Mokyr’s epistemic openness, Romer’s endogenous growth, and Aghion & Howitt’s creative destruction into a dynamic model of cumulative and pioneering innovation. It showed how MindCast AI operationalizes these forces through CDTs, Causal Signal Integrity (CSI), and recursive foresight modeling. The current vision statement extends this logic by adding the cognitive catalysts (Smith, Thaler, Shiller, Posner) that drive investment intensity during these transitions—revealing why AI, at a pioneering inflection point, attracts disproportionate capital and societal imagination.

III. The Four Architects of Economic Thought

MindCast AI uses four economists’ cognitive models to frame the AI investment boom. Each thinker represents a different dimension of market reasoning—moral sentiment, behavioral deviation, narrative propagation, and institutional structure. Understanding their intellectual contributions provides anchor points for interpreting AI market dynamics. Their combined perspectives form the backbone of the MindCast AI cognitive-economic model.

Adam Smith – Moral Order and the Invisible Hand

Modern economics split into separate strands—classical, behavioral, narrative, institutional—but Smith saw all of them as facets of a single moral-psychological system. In a sense, this vision statement deconstructs Smith by rediscovering the unity he assumed: that markets emerge from stories, sentiments, and incentives simultaneously. Behavioral economics did not contradict Smith—it clarified the limits of sympathy. Narrative economics did not replace him—it explained how sentiment travels. Law and economics did not move past him—it formalized the institutional containers for moral exchange.

Case Study: Smith’s lens explains why open-source ecosystems like PyTorch and Hugging Face dramatically accelerated AI’s frontier. Their moral framing—knowledge as a public good—created positive-sum spillovers that private firms then harnessed. Yet the collapse of community governance around Stable Diffusion in 2024 showed how quickly moral consensus can fracture when scale outpaces communal norms.

Richard Thaler – Behavioral Economics and Predictable Irrationality

Behavioral economics emerged as a correction to the rational-actor model, but Thaler’s work can also be read as a continuation of Smith. If Smith mapped the moral sentiments that guide markets, Thaler revealed where those sentiments break—how bounded rationality and cognitive shortcuts distort intention into misallocation. In this sense, Thaler does not oppose Smith; he supplies the missing psychology required to understand markets operating at machine speed.

Case Study: Thaler’s model clarifies why investors chased AGI-branded startups in 2023–2025 despite weak business fundamentals. When Inflection AI raised $1.3B with minimal revenue, anchoring and halo effects drove capital allocation. Behavioral forces also explain why GPU shortages triggered panic-buying cycles divorced from actual model-deployment capacity.

Robert Shiller – Narrative Economics and Story Contagion

Shiller’s contribution forms the connective tissue between Thaler’s micro-level biases and Posner’s institutional consequences. Narratives are the mechanism by which individual distortions become collective behavior. Where Smith provided the moral architecture and Thaler the psychological deviations, Shiller explained how those deviations propagate through stories that coordinate belief. Narrative economics becomes the transmission belt linking cognition to capital flows.

Case Study: Shiller’s narrative contagion is visible in the “AI agent revolution,” which surged after a single OpenAI demo. Thousands of founders pivoted overnight, igniting a funding wave that produced more announcements than functioning products. Narrative whiplash followed: when enterprises discovered integration friction, the story collapsed faster than fundamentals changed.

Richard Posner – Law and Economics, Institutional Realism

Posner represents the institutional endpoint of the cognitive chain. Smith identified moral grounding, Thaler exposed behavioral drift, Shiller mapped narrative spread—and Posner showed how institutions absorb, delay, or distort those forces through law. His lens reveals that legal structures are not neutral containers but reactive embodiments of collective cognition. In the AI era, where technology accelerates faster than institutional digestion, Posner’s realism exposes the limits of governance built for slower cycles.

Case Study: Posner’s framework appears in the 2024–2025 scramble over AI copyright litigation—from Getty v. Stability AI to the Authors Guild lawsuits. Firms exploited legal ambiguity to scale quickly, betting that regulation would solidify after competitive position was secured. The speed of model deployment outpaced judicial capacity, turning legal uncertainty into competitive advantage.

Unifying Bridge

Together, these four thinkers reveal that markets are not mechanical engines but cognitive organisms. The AI boom can only be understood through moral, behavioral, narrative, and legal perspectives working in tandem.

Smith provides the moral architecture; Thaler reveals how human psychology deviates from that architecture; Shiller shows how those deviations scale through narrative contagion; Posner demonstrates how institutions absorb and react to those forces. Taken together, the four economists create a complete cognitive arc—from sentiment to bias, from bias to story, from story to law. This arc forms the backbone of the cognitive-economic model that the rest of the vision statement develops.

IV. Dialogue: “The Invisible Algorithm”

Based on their CDTs, MindCast AI simulated the four economists in a speculative dialogue, illustrating how their theories collide and converge when applied to the AI boom. The dialogue is organized into four thematic subtopics—moral order, behavioral distortion, narrative contagion, and legal structure—revealing how each cognitive dimension contributes to the recursive framework driving the AI investment surge.

A. Moral Order and Early Market Formation

Smith: “The conscience of commerce strains when innovation outruns sympathy’s pace. In my century, progress unfolded by candlelight; now you stoke furnaces of computation larger than nations.”

Thaler: “Here’s what people actually do, Adam—they chase whatever looks like the next frontier. A $10B training run becomes the anchor for all future expectations.”

Shiller: “Consider the narrative arc: scale itself becomes a moral signal. The public hears ‘bigger models’ and infers ‘greater destiny.’”

Posner: “The legal architecture responds only when identifiable harm emerges. Latent risk does not trigger institutional action.”

Smith: “If sympathy cannot keep pace, neither can the moral equilibrium of markets.”

Moral order still shapes expectations, but AI’s velocity outruns ethical pacing. This mismatch becomes the starting point for deeper distortions.

B. Behavioral Bias and Irregular Capital Surges

Thaler: “Look, here’s the honest version—people anchored on ChatGPT’s breakout moment and extrapolated recklessly. They assumed every upgrade must generate exponential returns.”

Smith: “A distortion of self-interest—confidence masquerading as judgment.”

Shiller: “And observe the story contagion: thousands of founders chasing ‘AI agents’ after one demo. Narrative whiplash became the market’s heartbeat.”

Posner: “Meanwhile, binding contracts—GPU leases, PPAs—locked in long-term obligations built on short-term euphoria.”

Thaler: “Predictable irrationality, scaled by compute demand, becomes a resource-allocation engine.”

Behavioral distortions are no longer market noise—they shape how capital allocates compute, energy, and attention. This prepares the ground for narrative to dominate coordination.

C. Narrative Contagion and Market Coordination

Shiller: “Consider the narrative arc: remember when Sam Altman was dismissed and reinstated in seventy-two hours? That was narrative momentum overwhelming governance. The story moved faster than the institution.”

Smith: “Narrative once ornamented exchange; now it directs the traffic of belief.”

Thaler: “Executives declared AI strategies before they had any product, because the narrative demanded participation.”

Posner: “And when the FTC sent model-risk letters, it wasn’t capability that shifted—the narrative lost coherence.”

Shiller: “Stories now function as infrastructure, coordinating stakeholders before laws have spoken.”

Narrative contagion explains why AI investment persisted despite failures—stories coordinated expectations faster than fundamentals. This sets up the institutional stress that follows.

D. Legal Arbitrage and Institutional Lag

Posner: “The legal architecture responds to incentives, not sentiment. The OpenAI governance rupture, the Getty and Authors Guild lawsuits—each shows courts chasing moving targets.”

Thaler: “People assume legality where clarity doesn’t exist. Startups exploited that vacuum to raise at inflated valuations.”

Shiller: “And when the story of ‘inevitability’ holds sway, regulators hesitate—they don’t want to appear obstructionist.”

Smith: “Institutions lose their moral authority when forced into perpetual reaction.”

Posner: “Ambiguity becomes a competitive asset. Law lags; actors sprint.”

Institutional lag becomes an asset class—legal uncertainty is monetized. Arbitrage expands until the system encounters stress.

Closing Exchange

Smith: “The invisible hand has taken a mechanical form.”

Thaler: “More like a biased form. Investors anchor to one AI success and extrapolate it to the universe.”

Shiller: “Because the story demands it. Viral narratives convert belief into capital.”

Posner: “And law arrives late, as always. Arbitrage fills the vacuum between innovation and regulation.”

Smith: “Then trust is becoming encoded?”

Thaler: “Trust is the bias machines can’t simulate but can weaponize.”

Shiller: “Stories now generate themselves.”

Posner: “And courts must decide which stories constitute property.”

Smith: “Perhaps the invisible hand has not vanished—only evolved into an invisible algorithm that operates through cognitive inputs rather than price signals.”

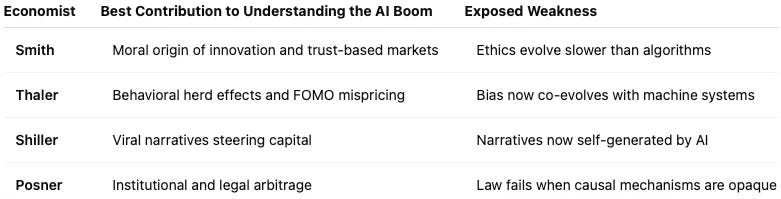

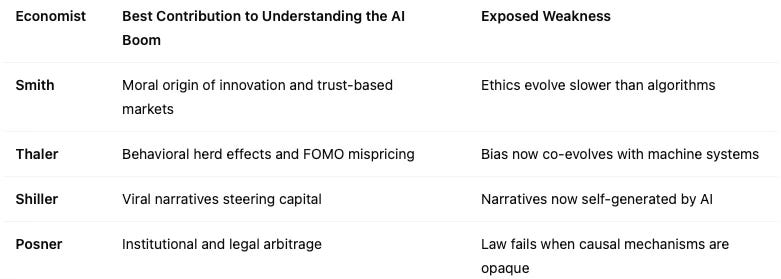

V. Comparative Analysis: Strengths and Blind Spots

The synthesis reveals that the AI boom is not driven by fundamentals but by the recursive interaction of cognitive forces. The comparative insights serve as a bridge between the dialogue and the synthesis, compressing familiar points into a succinct evaluative table that reveals both explanatory power and theoretical gaps.

The table clarifies each framework’s unique value while revealing why no single perspective suffices. Smith explains why trust forms; Thaler shows how it distorts; Shiller demonstrates how it spreads; Posner reveals how institutions react. Each strength exposes the next thinker’s necessity. Together, they point toward an integrated cognitive-economic model that the synthesis develops.

VI. Insight and Implication

The AI investment surge marks the moment when moral sentiment, behavioral bias, narrative contagion, and institutional structure collapse into a shared recursive engine of trust. This vision statement extends prior MindCast AI work on trust economics, innovation dynamics, and AI Economics by explaining the cognitive roots of the boom.

Why Cognitive Economics Is Urgent

Cognitive economics is not an academic exercise—it is a survival framework for an economy allocating trillions into systems it barely understands. Misreading cognitive forces leads to cascading failures: energy-grid strain from mispriced compute demand, regulatory backlash from narrative overreach, stranded hyperscale assets from biased forecasts, and institutional crises when legal uncertainty collides with market acceleration.

If these cognitive forces remain poorly understood, markets will misallocate capital at unprecedented scale. Compute bubbles will form, regulatory systems will underreact, and trust failures could trigger cascading infrastructure shocks—data center stranded assets, unusable PPAs, mass write-offs of unscalable AI deployments. Misreading the cognitive engine behind the boom risks turning a generational technology into a generational fragility.

The Core Insight

AI markets no longer respond primarily to fundamentals but to the alignment—or misalignment—of cognitive forces. When moral pace, behavioral bias, narrative momentum, and institutional capacity synchronize, markets allocate capital with clarity and stability. When they diverge, fragility multiplies.

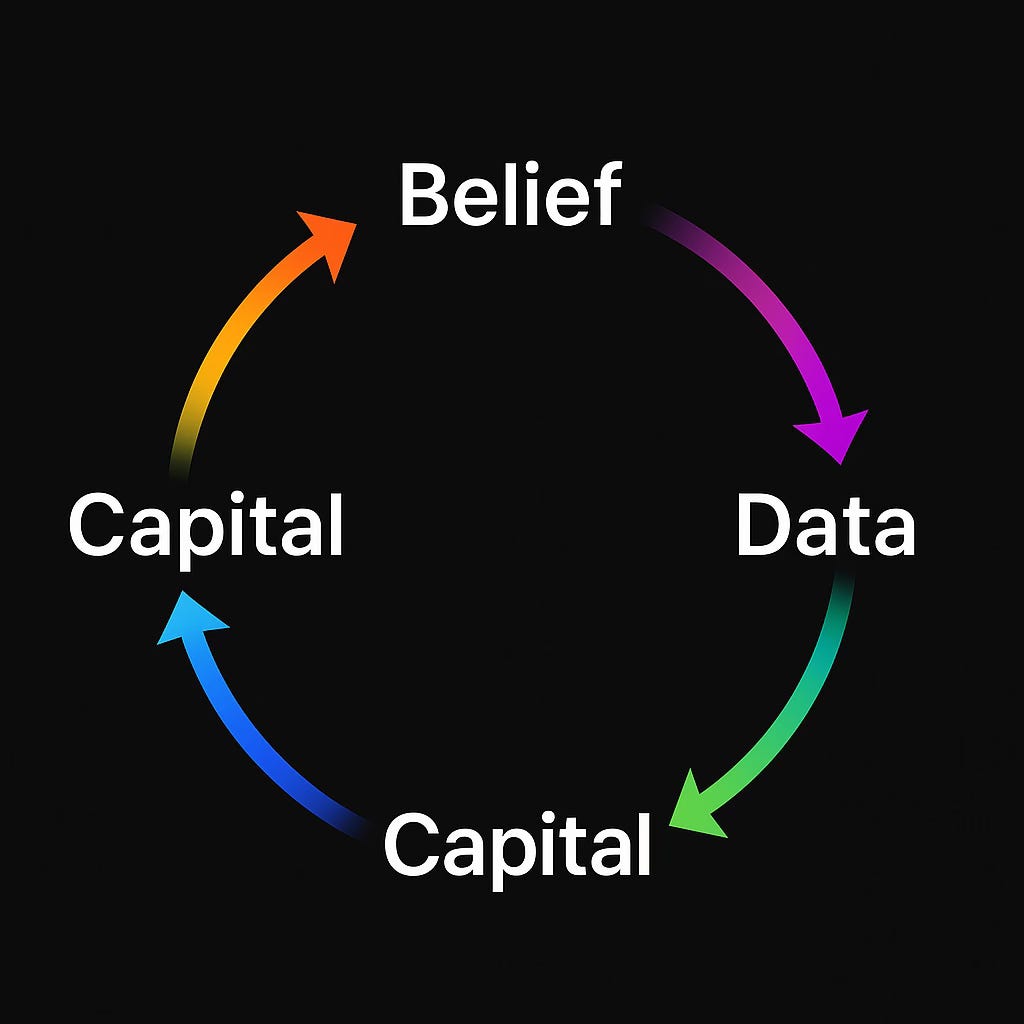

These forces do not operate in isolation. Moral drift accelerates behavioral distortions; distortions amplify narratives; narratives shape institutional delay; institutional delay feeds back into moral ambiguity. The cycle becomes self-reinforcing unless actors deliberately break it through interpretability, disciplined capital allocation, and anticipatory governance.

The Architecture Becomes Clear

When MindCast AI views the system end-to-end, the architecture reveals itself: Smith explains the moral substrate; Thaler maps the psychological distortions; Shiller reveals the narrative carriers; Posner exposes the institutional response lag. Together, they describe a market that does not simply react to new technology—it reacts to how human cognition processes that technology. And once AI participates in shaping those cognitive inputs, the system becomes recursive.

Understanding this chain is not optional—it is the only way to prevent the AI economy from veering into systemic volatility.

The Stakes

Markets that ignore these cognitive forces will misjudge risk, misprice capability, and misread societal tolerance. Markets that integrate them will build durable foresight architectures capable of navigating the next wave of AI transformation.

AI’s future will belong to societies capable of decoding how belief, bias, narrative, and law co-create intelligence—and how foresight can discipline that recursion. The stakes are civilizational, not cyclical.

Insight: The invisible hand has become an invisible algorithm—guided not by morality alone, nor psychology, nor narrative, nor law, but by the recursive entanglement of all four.

Appendix

MindCast AI’s Cognitive Digital Twin (CDT) methodology models the reasoning patterns, incentives, constraints, and foresight tendencies of complex actors—individuals, institutions, or entire economic schools of thought. Each CDT is constructed using structured causal mapping, recursive learning signals, and coherence benchmarking to simulate how belief, bias, and incentives evolve over time.

Core metrics such as Action–Language Integrity (ALI), Cognitive–Motor Fidelity (CMF), Resonance Integrity Score (RIS), and Causal Signal Integrity (CSI) quantify the reliability of each modeled pathway. CSI functions as a trust-gated causal filter—ensuring that only high‑integrity causal inferences advance into foresight simulations—while the Legacy Retrieval Pulse (LRP) maintains long‑range coherence across recursive iterations.