MCAI Culture Vision: Discretion and the Architecture of Systemic Trust

How Public and Private Systems Lose Legitimacy Within the Boundaries of Legality

I. Executive Summary

In every system—public or private—trust decays long before legality fails.

The MCAI Culture Vision series began with Power Integrity, which modeled how coercive systems distort cognition, and Public Trust, which mapped how that distortion erodes legitimacy.

This third installment, Systemic Trust, explores a subtler frontier: how lawful discretion—actions taken within rules yet outside fairness—quietly fractures moral coherence.

Modern institutions now confuse procedural compliance with legitimacy.

They operate “within policy,” “by the book,” and “under authority”—but these phrases have become moral cover for outcomes that erode collective faith in justice, leadership, and merit.

When discretion becomes detached from integrity, institutions appear functional while trust silently collapses.

MindCast AI identifies this collapse through a new metric: the Discretion Integrity Index (DII), which measures the gap between an actor’s stated justification and the system’s perceived fairness.

This vision statement shows how to detect and forecast that gap—before discretion becomes distortion, and before legality becomes moral decay.

Contact mcai@mindcast-ai.com to partner with us on cultural innovation and law | economics.

II. The Problem: When Compliance Fails to Create Legitimacy

The deepest trust fractures now occur inside compliance, not outside it.

Institutions preserve legal form but abandon moral function. The public watches lawful acts that feel illegitimate, and employees experience management decisions that follow policy but betray principle.

Each event reinforces the same dissonance: what is legal is not always right, and what is right rarely feels safe.

Consider two examples:

Public (Comey indictment scenario):

A lawful prosecution or political investigation follows established procedure but feels retaliatory in motive. Even if evidence is sound, the intent appears partisan.The system functions, but the perception of fairness—public trust—collapses.

The cognitive signal: Law is intact, legitimacy is gone.Private (Manager–Employee demotion):

A manager demotes a high-performing employee through HR mechanisms that satisfy due process. On paper, it’s justified. But the motive—resentment, insecurity, retaliation—is transparent to everyone.

The action is legal, yet relationally corrupt. The cognitive signal: Policy upheld, trust destroyed.

Both examples expose the same pattern: discretionary power misaligned with moral intention.

This is Systemic Abuse of Discretion—not illegal, but illegitimate. It operates beneath thresholds of audit, ethics review, or legal accountability, eroding confidence in both the fairness of authority and the integrity of institutions.

III. The Architecture of Systemic Trust

Trust within systems behaves like a distributed moral network.

Good faith is the operational expression of trust. It translates expectation into action—the confidence that discretion will be exercised honestly, transparently, and without hidden motive. Both fiduciary duty and systemic trust depend on good faith; when it erodes, compliance may persist, but legitimacy disintegrates.

Just like fiduciary duty, it depends on expectation—the belief that those with power will act in the best interests of others even when unobserved. This is why fiduciary law formalizes what Systemic Trust measures—the duty to act in another’s best interest even when unobserved, not merely within policy.

Trust is built not through rules, but through judgment exercised transparently within rules.

When discretion is fair, legitimacy compounds; when discretion is self-serving, legitimacy decays. The public can tolerate error, but it cannot tolerate manipulation disguised as process.

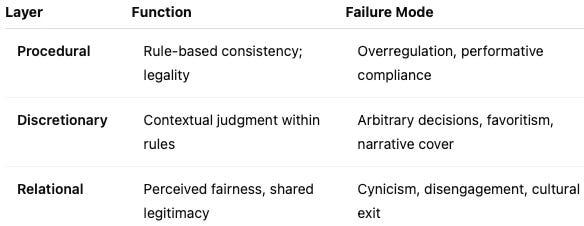

MindCast AI models Systemic Trust as a dynamic equilibrium among three fields:

When the discretionary layer weakens, procedural legitimacy becomes brittle and relational legitimacy evaporates.

Most modern institutions collapse not from legal violation, but from moral fatigue—the exhaustion of trust in the integrity of decision-making.

IV. Measurement Framework: The Discretion Integrity Index (DII)

Consider referencing fiduciary duty as an empirical test for the DII: when fiduciary integrity (acting loyally and prudently) erodes, the DII drops even though procedures appear compliant. This illustrates how fiduciary law formalizes what Systemic Trust measures—alignment between discretion and the beneficiary’s interest. The DII Measurement Framework:

Power Integrity measured distortion.

Public Trust measured relational erosion.

Systemic Trust measures moral intention within lawful discretion.

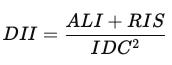

The DII quantifies how decision-making diverges from principled integrity under legal cover. It is defined as:

Where:

ALI (Action–Language Integrity): alignment between official justification and actual behavior.

RIS (Relational Integrity Score): perceived fairness, empathy, and reciprocity in the decision context.

IDC (Intent Divergence Coefficient): the inferred gap between stated rationale and underlying motive, derived from linguistic tone, sequencing, and stakeholder reaction.

IDC is squared because intent divergence compounds distrust exponentially—a small gap in motive creates disproportionate legitimacy loss. ALI and RIS are additive because either can partially compensate for the other in edge cases. The threshold of 60 marks the point at which relational coherence and procedural trust remain strong enough to sustain legitimacy; below that, perception of fairness begins to collapse.

Interpretation:

DII > 60 = high-integrity discretion (trust-building).

40–60 = lawful but contested discretion (neutral or eroding).

< 40 = systemic abuse of discretion (trust collapse).

Example Scoring:

These cases reveal a paradox of discretion: the more technically correct the process, the easier it becomes to mask moral failure.

V. Forecasting Legitimacy Erosion

MindCast AI simulates legitimacy erosion through DII trend mapping.

Each organization maintains a latent “trust reserve” built through history, performance, and transparency.

When the DII declines across decision cycles, that reserve depletes—predicting reputational crisis even if the institution remains compliant.

Erosion Curve Model:

Justification Inflation: language becomes procedural, defensive, or pre-emptive (“consistent with our values…”).

Perception Lag: insiders sense hypocrisy before outsiders notice.

Trust Collapse: legitimacy falls suddenly after a single triggering event (whistleblower, scandal, audit leak).

Predictive foresight depends on catching stage two—Perception Lag—when alignment metrics (ALI, RIS) still appear normal but linguistic and relational indicators show rising divergence.

MindCast AI’s linguistic coherence engine quantifies this lag by measuring shifts in tone entropy and intent polarity—identifying when discretion is protecting power rather than principle. For instance, when a CEO’s quarterly letters shift from ‘we made mistakes’ (ALI 75) to ‘consistent with our long-term strategy’ (ALI 48) while stakeholder sentiment drops (RIS 82→56), our linguistic coherence engine flags a 12-month trust collapse window.

VI. Applications Across Domains

For the corporate and governance domains, you could add a fiduciary lens: directors, managers, and public officials are fiduciaries whose legal compliance may still erode legitimacy if intent diverges from the beneficiary’s welfare. This ties Systemic Trust to existing accountability standards.

1. Governance and Justice

Scenario: Politically charged investigations, selective prosecutions, or delayed disclosures.

Insight: High ALI, low RIS, rising IDC → lawful conduct, illegitimate motive.

Forecast: 12–18 months to public trust crisis if intent divergence persists.

2. Corporate Leadership

Scenario: HR compliance used as reputation shield (e.g., performance review retaliation).

Insight: Moderate ALI, low RIS, high IDC → procedural integrity masking managerial insecurity.

Forecast: Team disengagement and attrition within 6–9 months; culture decay measurable by falling RIS.

3. Academia and Meritocracy

Scenario: Tenure or promotion decisions shaped by ideological pressure or donor influence.

Insight: High ALI, declining RIS, growing IDC → loss of moral coherence in knowledge institutions.

Forecast: Credibility collapse within 3–5 years; brain drain and donor trust reduction follow.

4. Technology and Platforms

Scenario: Policy enforcement inconsistencies (e.g., selective content bans).

Insight: ALI–RIS divergence and NC > 80 = narrative governance over fairness.

Forecast: 6–12 months to perceived censorship crisis.

Across all domains, the pattern holds: lawful acts that betray fairness erode systemic trust faster than unlawful acts that align with moral principle.

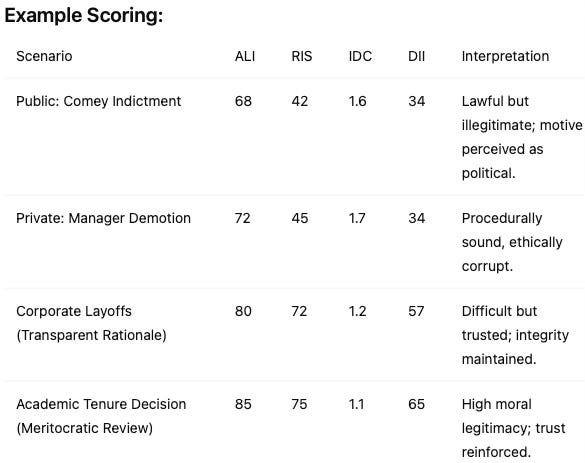

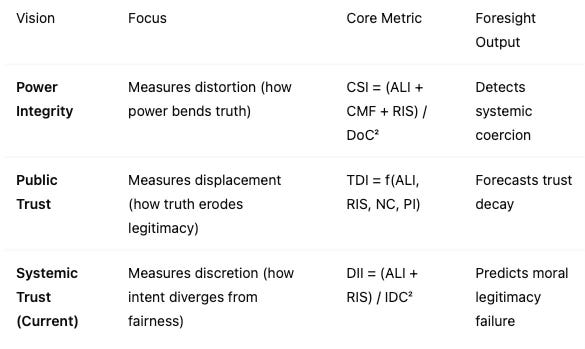

VII. Integration with the MCAI Culture Vision Framework

MindCast AI’s three Vision Statements form an integrated foresight triad:

Together they form a cognitive moral architecture:

Distortion → Displacement → Discretion.

Coercion distorts signals, erosion displaces trust, and misaligned discretion corrupts systems from within.

Each framework builds on what the prior revealed: Power Integrity showed what distorts cognition. Public Trustshowed how that distortion erodes institutions. Systemic Trust reveals where erosion hides—inside the discretionary choices that shape culture one decision at a time.

This triad converts qualitative moral phenomena into quantitative foresight: institutions can now measure not just what they do, but why they are trusted—or not.

VIII. Conclusion

Institutions fail long before they fall.

Their collapse is moral before it is structural, emotional before it is visible, and discretionary before it is illegal.

When power hides behind process, and discretion replaces judgment, coherence collapses from the inside out.

The Power Integrity, Public Trust, and Systemic Trust frameworks together form MindCast AI’s moral foresight architecture.

They show how power distorts, how trust decays, and how discretion corrodes legitimacy—each measurable, forecastable, and correctable through coherent intent and transparent alignment.

MindCast AI’s vision is simple: to measure moral gravity at the speed of governance.

Systems that align intention with integrity will endure. Those that confuse procedure with principle will fragment, however lawful they appear.

Because in the architecture of trust, legality is the floor—but legitimacy is the foundation.

Appendix: Prior MindCast AI Works Cited

1. MCAI Culture Vision: Power Integrity and the Future of Coercive Narrative Governance

Subtitle: How Foresight, Trust, and Moral Architecture Can Rebuild Truth in an Era of Narrative Control

Publication: MindCast AI, May 2025

URL: www.mindcast-ai.com/p/cngmisinfo

Summary: Introduces the Power Integrity framework, showing how coercive systems distort cognition and truth. It defines the Causal Signal Integrity (CSI) metric to detect when narrative control replaces transparency, establishing a foundation for measuring institutional distortion.

2. MCAI Culture Vision: Public Trust and Institutions

Subtitle: How MindCast AI Measures, Forecasts, and Helps Restore Trust in a Culture of Coercive Narrative Governance

Publication: MindCast AI, June 2025

URL: www.mindcast-ai.com/p/cngtrust

Summary: Extends Power Integrity into relational dynamics, introducing the Trust Displacement Index (TDI). It explains how institutions lose legitimacy even while appearing functional, and outlines measurable recovery strategies through the Truth & Repair Window process.