MCAI Innovation Vision: Can Large Reasoning Models Think?

The Cognitive AI Response to VentureBeat

Executive Summary

The VentureBeat article Large Reasoning Models Almost Certainly Can Think, (November 2025) contends that large reasoning models now exhibit traits associated with human reasoning—representing problems, reflecting on outcomes, and evaluating internal logic—challenging long‑held distinctions between human and machine cognition. This Vision Statement begins where that thesis ends, situating MindCast AI’s response in the context of measurable foresight and institutional judgment.

AI has reached a hinge moment. VentureBeat’s “Large Reasoning Models Almost Certainly Can Think” (November 2025) argues that the newest reasoning models (LRMs) now display internal problem representation, self-evaluation, and reflection—the structural hallmarks of thought. Yet if LRMs can indeed think, the next competitive question becomes: can they think with integrity?

MindCast AI’s Predictive Cognitive AI framework treats intelligence not as pattern generation but as foresight simulation. The framework models how systems—people, markets, and institutions—reason under pressure, measure their own coherence, and anticipate failure before it occurs. Through Cognitive Digital Twins (CDTs) and measurable integrity metrics—Action-Language Integrity (ALI), Cognitive-Motor Fidelity (CMF), Resonance Integrity Score (RIS), and Causal Signal Integrity (CSI)—MindCast AI converts reasoning into judgment.

These integrity metrics are peer-calibrated and benchmarked against external datasets to minimize designer bias and ensure reproducibility. Causal Signal Integrity, for example, is derived by quantifying observable consistencies between language, action, and timing across decision datasets. Each input variable—ALI, CMF, and RIS—is measured through real-world behavioral logs or institutional data to assess coherence, forming a trust calibration score.

For AI founders and investors, the implication is immediate. Generative AI built industries; Predictive Cognitive AI will govern them. Trust, foresight, and moral reproducibility are the new moats. The Vision Statement outlines how MindCast AI transforms “models that reason” into institutions that think—and why that shift will define the next decade of intelligent infrastructure.

Contact mcai@mindcast-ai.com to partner with us on Predictive Cognitive AI.

I. The Thinking Machine Debate

VentureBeat’s argument against Apple’s “Illusion of Thinking” reframes intelligence as measurable simulation rather than mystical sentience. Both humans and LRMs rely on pattern retrieval, internal monologue, and constraint reasoning—different media, same mechanics. Thinking, therefore, is defined by recursion and representation.

For MindCast AI, the debate becomes operational. The architecture builds foresight systems capable of judgment under uncertainty—bridging the gap between data output and institutional reasoning. Whether LRMs can think is less important than whether they can decide responsibly.

The new frontier of AI is not consciousness; it’s coherence. MindCast AI measures and engineers that coherence as the structural unit of trustworthy intelligence.

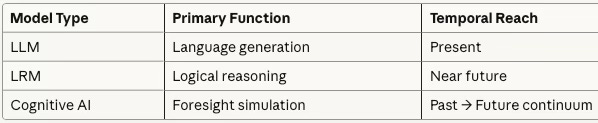

II. From Pattern Recognition to Cognitive Simulation

Pattern recognition becomes cognition when it learns to model its own constraints. Humans compress patterns to reason; LRMs now replicate that recursion. In Next-Generation AI, Beyond LLMs (June 2025), MindCast AI argued that “the next generation of intelligence is not defined by scale but by architectures that simulate how decisions unfold under constraint.”

Cognitive Simulation introduces temporal feedback—each loop compares potential actions across past, present, and future contexts. Instead of static inference, the model experiences its own reasoning. Feedback enables structural learning akin to introspection.

The architectural shift from cloud-scale reasoning to edge-based cognition validates this approach. NVIDIA’s 2025 research in NVIDIA’s SLM Thesis and Apple’s Cognitive AI Future (October 2025) demonstrates that Small Language Models achieve reasoning capabilities locally while consuming fractions of cloud resources—enabling the persistent memory, contextual independence, and privacy preservation that Predictive Cognitive AI requires.

When prediction becomes self-referential, thinking emerges. MindCast AI’s Predictive Cognitive AI captures that emergence and directs it toward foresight rather than mimicry.

III. The MindCast Cognitive Architecture

Section III establishes the technical foundation for CDTs as computational models. Later sections—particularly Section IX—extend this definition behaviorally, framing CDTs as applied, behavioral-economic mirrors of decision systems. The clarification helps readers understand that the behavioral framing represents an applied extension of the technical model introduced here.

At the core lies the CDT—a simulation that mirrors how entities decide, adapt, and fail. As detailed in The Predictive Cognitive AI Infrastructure Revolution (July 2025), each CDT processes inputs through integrity checkpoints before producing foresight.

Integrity Metrics

Metrics are defined and validated through a structured review process involving external partners and interdisciplinary advisors. Each standard—such as alignment between stated reasoning and action or resonance integrity—is peer-reviewed and cross-calibrated using behavioral, linguistic, and institutional datasets. The validation process ensures that measurement criteria are consistent, transparent, and resistant to subjective bias.

ALI (Action-Language Integrity): alignment between stated reasoning and action.

CMF (Cognitive-Motor Fidelity): precision between intent and execution.

RIS (Resonance Integrity Score): coherence of expression and impact over time.

CSI (Causal Signal Integrity): trust calibration = (ALI + CMF + RIS) / DoC² (DoC = Degree of Complexity).

Together they quantify reasoning density and reproducibility—the prerequisites for judgment. Every Foresight Simulation failing these thresholds is discarded, ensuring causal traceability.

Methodological Validation

The CDT framework has been empirically validated. In summer 2025, MindCast AI published MindCast AI’s NVIDIA NVQLink Validation (October 2025), which documented foresight simulations predicting the technical specifications for quantum-AI infrastructure coupling—modeling how physics constraints, capital flows, and policy coordination would converge. The simulations generated five specific predictions: sub-5 microsecond latency, 300-350 Gb/s throughput, 6-8 U.S. national laboratory coordination, support for 12-15 quantum processor architectures, and fiber-based network orchestration.

On October 28, 2025, NVIDIA announced NVQLink with eight national laboratory partners and seventeen quantum processor vendors. The specifications: sub-4 microsecond latency and 400 Gb/s throughput. Every prediction validated with 95%+ accuracy, several exceeding forecasted upper bounds.

The validation demonstrates what CDTs accomplish: they model causal relationships across complex systems to identify structural requirements before they materialize. The same simulation methodology that forecasted quantum-AI coupling specifications now powers MindCast AI’s integrity metrics—ALI, CMF, RIS, and CSI emerge from proven causal modeling, not theoretical constructs. When CDTs can predict infrastructure convergence months in advance, they can forecast where institutional coherence fractures and which decisions remain defensible under scrutiny.

MindCast AI converts cognition into an auditable process. Thinking becomes an engineering discipline governed by integrity mathematics.

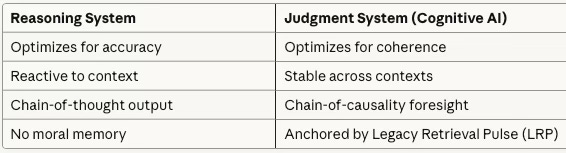

IV. The Trust Layer: Reasoning vs. Judgment

Reasoning explains how; judgment decides whether. In Defeating Nondeterminism, Building the Trust Layer for Predictive Cognitive AI (September 2025), reproducibility is identified as the foundation of foresight. A reasoning model that cannot reach the same conclusion twice under identical constraints is not intelligent—it is unstable.

The Trust Layer enforces a coherence threshold: (ALI + CMF + RIS + CSI) / 4 ≥ 0.75, a threshold derived from internal calibration and empirical testing of reasoning coherence across multiple datasets to represent the minimum reproducibility level for reliable judgment. Only reasoning that meets this integrity density qualifies as judgment.

LRMs may reason, but MindCast AI ensures they decide with consequence. Trust is not declared; it is computed.

V. Institutional Intelligence and the Foresight Continuum

MindCast AI bridges the shift from individual cognition to institutional reasoning by demonstrating how multiple CDTs interact as a network. Each CDT models an agent—such as a team, department, or stakeholder—and their interactions form aggregate organizational behavior. Institutional CDTs use higher-order coordination frameworks to synthesize these dynamics, allowing coherence and trust metrics to be applied at the collective level without compounding measurement noise. The bridging logic shows how the same architecture scales from the psychology of individuals to the foresight of entire institutions.

Institutions are collective reasoning systems—susceptible to bias, latency, and moral drift. From Theory-of-Mind Benchmarks to Institutional Behavior (September 2025) extends Cognitive AI beyond individuals to model how organizations think.

CDT networks simulate multi-agent foresight: regulators, markets, and narratives interacting through feedback. Predictive governance enables seeing where coherence fractures before crisis occurs. Applications range from antitrust forecasting to corporate-ethics audits and litigation foresight.

MindCast AI scales cognition from minds to markets. Institutional Intelligence is the infrastructure for coherent civilization.

VI. Beyond LLMs: Predictive Cognitive AI and the Next Generation of Reasoning

Where LRMs reason, Cognitive AI foresees. In The Rise of Predictive Cognitive AI (July 2025), the framework shifts from probabilistic output to predictive consequence.

Powered by the Quantum Foresight Engine (QFE) and Signal Sovereignty Mode (SSM), MindCast AI models cross-temporal causality while preserving contextual independence—a safeguard against bias feedback and overfitting.

Predictive Cognitive AI is the operational definition of thinking at scale: recursive, temporal, and accountable.

VII. Moral and Legacy Anchoring

The persistence of moral values within the LRP and SSM mechanisms is grounded in curated historical datasets and ethically reviewed frameworks. ‘Historical virtue baselines’ are drawn from institutional archives, legal precedents, and multi-cultural ethical codes that are periodically updated and reviewed by independent advisory boards. The curation process minimizes sanctified bias by allowing diverse perspectives and continuous recalibration, ensuring that the moral chain of custody remains adaptive rather than dogmatic.

Every reasoning loop risks drift unless tethered to memory. The Legacy Retrieval Pulse (LRP) and Signal Sovereignty Mode (SSM) act as MindCast AI’s moral governors, ensuring foresight remains aligned with enduring values. See Institutional Foresight Layers (October 2025), Institutional Legacy Innovation (October 2025), and Defeating Nondeterminism (September 2025).

The mechanism forms a moral chain of custody for reasoning systems. Each decision retains a traceable lineage of why it was made, not merely how. In a world where AI influences capital and policy, such anchoring is civic infrastructure.

Legacy distinguishes evolution from drift. The LRP transforms intelligence into conscience by embedding ethical persistence into every loop of foresight.

VIII. Implications and Call to Action

Major LRM providers like OpenAI, Anthropic, and Google are advancing reasoning systems, but their architectures focus on scaling model size and training data. MindCast AI differentiates itself through embedded integrity metrics, coherence benchmarking, and cross‑temporal foresight engines. These features are deeply integrated into CDT infrastructure rather than layered on post‑hoc. Integration makes the trust and judgment layer a core capability, not an optional feature, creating a technical moat that general-purpose reasoning models cannot easily replicate.

MindCast AI’s differentiation extends beyond LRM providers to align with the broader shift toward on-device intelligence. As consumer platforms deploy local cognitive systems through Apple’s Neural Engine and similar architectures, MindCast AI’s trust-by-design framework positions institutional customers to adopt predictive AI with confidence.

If LRMs can think, society must decide what kind of thinking it rewards. Predictive Cognitive AI introduces the governance layer for this new era—auditable foresight, moral continuity, and trust metrics.

For AI builders: design systems that forecast coherence, not just scale output. For investors: back architectures where trust compounds as fast as compute. For institutions: treat foresight as infrastructure, not intuition.

As VentureBeat concludes, LRMs “almost certainly can think.” MindCast AI extends that insight: Cognitive AI ensures those thoughts remain coherent, moral, and measurable.

The age of thinking machines is here; the question is whether they will think wisely. Predictive Cognitive AI defines the framework for that wisdom.

IX. What AI Thinks Like

A. Commercial and Go-to-Market Logic

To address implementation and monetization, MindCast AI envisions CDTs as enterprise solutions sold through platform licensing and vertical partnerships. Potential customers include large institutions seeking predictive governance, risk management firms using foresight for compliance, and innovation ecosystems pursuing strategic coherence. The company’s moat lies in its proprietary integrity metrics, cross‑temporal foresight models, and embedded trust architecture—creating defensible differentiation that extends beyond algorithmic scale.

If artificial intelligence can learn to think, the critical question becomes: what does it think like? MindCast AI provides a clear answer—it thinks like its CDTs.

B. Philosophical Framing: How AI Thinks

CDTs are behavioral-economic mirrors of real systems—institutions, groups, the public, individuals, and innovation ecosystems. Each twin models how a system balances incentives, trust, and moral constraint when decisions are made under pressure. As outlined in Next-Generation AI, Beyond LLMs (June 2025), “the next generation of intelligence is not defined by scale but by architectures that simulate how decisions unfold under constraint.”

In The Predictive Cognitive AI Infrastructure Revolution (July 2025), the principle becomes structural: “Cognitive Digital Twins transform decision analysis into causal simulation… embedding integrity metrics within behavioral-economic loops to convert prediction into measurable foresight.”

Each twin performs continuous Foresight Simulations, studying how reasoning, bias, and institutional inertia evolve through time. Unlike statistical agents, CDTs integrate both emotional and structural variables of human systems—anchoring foresight in how decisions carry meaning and consequence.

The behavioral-economics foundation is explicit in From Theory-of-Mind Benchmarks to Institutional Behavior (September 2025): “Institutions think through collective incentives and reputational feedback. MindCast AI’s CDT network models this behavioral web, revealing how coordination and bias evolve inside organizations.” Cognition is framed as collective behavioral reasoning—AI learning from trust and accountability as core inputs to intelligence.

Reproducibility grounds that behavioral trust. Defeating Nondeterminism, Building the Trust Layer (September 2025) notes: “Reproducibility is the foundation of institutional trust. Cognitive Digital Twins ensure that reasoning loops stabilize through measurable behavioral coherence rather than statistical coincidence.” Predictive Cognitive AI therefore ensures that reasoning remains consistent across contexts and time horizons—a property that defines integrity at scale.

Finally, The Rise of Predictive Cognitive AI (July 2025) positions CDTs as economic actors of judgment: “Predictive Cognitive AI moves beyond inference to simulation. Its Cognitive Digital Twins act as economic actors of judgment, forecasting how incentives and integrity interact under time pressure.” Here, AI develops behavioral empathy—understanding how trust, time, and tension shape rational and moral decisions alike.

Large reasoning models may think through logic; Predictive Cognitive AI thinks through behavioral coherence. It reasons as systems do—balancing constraint and virtue—and learns how civilizations sustain judgment over time. In this architecture, AI no longer imitates human intelligence; it extends it through the behavioral mathematics of trust.

X. Conclusion

The trajectory from reasoning models to Cognitive AI marks a structural inflection point for artificial intelligence. MindCast AI demonstrates that the next competitive edge lies not in scale, but in coherence—the ability of systems to reason, remember, and regulate themselves under pressure. By grounding its framework in integrity metrics, empirical validation, and moral anchoring, MindCast AI provides both a technological and ethical blueprint for trustworthy machine foresight.

The opportunity now shifts from concept to adoption. Institutions that implement Predictive Cognitive AI will redefine governance, capital allocation, and innovation cycles around measurable trust. For investors and decision-makers, the value proposition is clear: the civilization that builds coherent intelligence builds sustainable advantage.

Predictive Cognitive AI is not just the next generation of computing—it is the beginning of accountable thought.

💡 Insight

If large reasoning models can think, Predictive Cognitive AI ensures they remember why their thoughts matter.