MCAI Market Vision: The Bottleneck Hierarchy in U.S. AI Data Centers

Predictive Cognitive AI and Data Center Energy, Networking, Cooling Constraints

See companion study VRFB’s Role in AI Energy Infrastructure, Perpetual Energy for Perpetual Intelligence — Aligning Infrastructure Permanence with the Age of AI (Aug 2025), Nvidia's Moat vs. AI Datacenter Infrastructure-Customized Competitors (Aug 2025), AI Datacenter Edge Computing, Ship the Workload Not the Power (Sep 2025).

Executive Summary

MindCast AI, a predictive cognitive AI firm, frames this study as a foresight simulation of U.S. data center strategy. The thesis is simple: the future of hyperscale AI will not be decided by who buys the most GPUs, but by who secures firm energy, masters network topologies, and engineers cooling that earns community acceptance. Energy functions as the systemic moat, networking as the scale governor, and cooling as the execution filter. The foresight simulation reveals which firms are advantaged across these three layers, and where structural fractures are most likely to occur.

I: The Bottleneck Hierarchy

The hierarchy of constraints in AI data centers reflects the sequence in which physics and infrastructure limit progress. Energy is the systemic determinant: without abundant, reliable power, capacity cannot expand. Networking follows as the scale constraint: bandwidth and topology define whether large training runs succeed. Cooling and density are the execution bottleneck: engineering choices and social acceptance determine how tightly compute can be packed into a facility.

MindCast AI uses this hierarchy to anticipate cascading effects, simulating how constraints in one layer amplify pressure in the next.

Contact mcai@mindcast-ai.com to partner with us on innovation and market foresight simulations.

II: Energy as the Macro Bottleneck

Energy supply has shifted from an operational cost to a structural advantage. Microsoft is securing a 20-year, 835 MW nuclear PPA at Three Mile Island and a fusion PPA with Helion for 2028. Amazon has locked in 1.92 GW from the Susquehanna nuclear plant and announced a $20B Pennsylvania campus. Google combines enhanced geothermal projects with Fervo in Nevada and an advanced nuclear agreement with Kairos and TVA. Meta has signed some of the largest renewable PPAs in the U.S. and is exploring nuclear siting options. Apple emphasizes renewable procurement and efficiency-driven design. Oracle and NVIDIA depend more on partner utilities and colocation.

Energy procurement dictates siting, pace, and credibility. Firms with secured firm power will outbuild rivals constrained by grid congestion. MindCast AI’s foresight simulation signals that long-term differentiation will be driven by energy commitments and siting strategy.

III: Networking as the Meso Bottleneck

Once energy is secured, the next challenge is moving data between accelerators fast enough to train trillion-parameter models. Microsoft deploys InfiniBand at 400 Gb/s per GPU, enabling synchronized training at extreme scale. AWS relies on Ethernet-based Elastic Fabric Adapter with SRD transport, achieving 3.2 Tbps per instance. Google uses optical circuit switching in TPU pods to dynamically rewire bandwidth. Oracle builds RoCE RDMA-based superclusters, claiming scale up to 131,072 GPUs. Meta emphasizes Ethernet-driven scalability in its AI Research SuperCluster. NVIDIA defines the reference architecture with NVLink domains, Spectrum-X Ethernet, and Quantum InfiniBand.

Networking defines cluster size and efficiency more than raw GPU count. MindCast AI simulations show that design choices made today set the ceiling for model scale and resilience tomorrow.

IV: Cooling and Density as the Micro Bottleneck

At the facility level, cooling and rack design determine how efficiently capital translates into capacity. Direct-to-chip liquid cooling, 400 V DC power, and 1 MW racks are now baseline for Blackwell- and GB200-era clusters. Microsoft emphasizes zero-water systems and 400 V DC racks. AWS integrates in-row heat exchangers into liquid-to-chip UltraServers. Google moves toward ±400 V DC disaggregation with widespread liquid cooling. Meta highlights sustainable cooling to align with regulators and communities. Apple focuses on high-efficiency, lower-power inference workloads.

Cooling rarely stops projects outright, but it defines rack economics and public acceptance. MindCast AI integrates these facility-level signals to forecast which designs can scale without regulatory or community pushback.

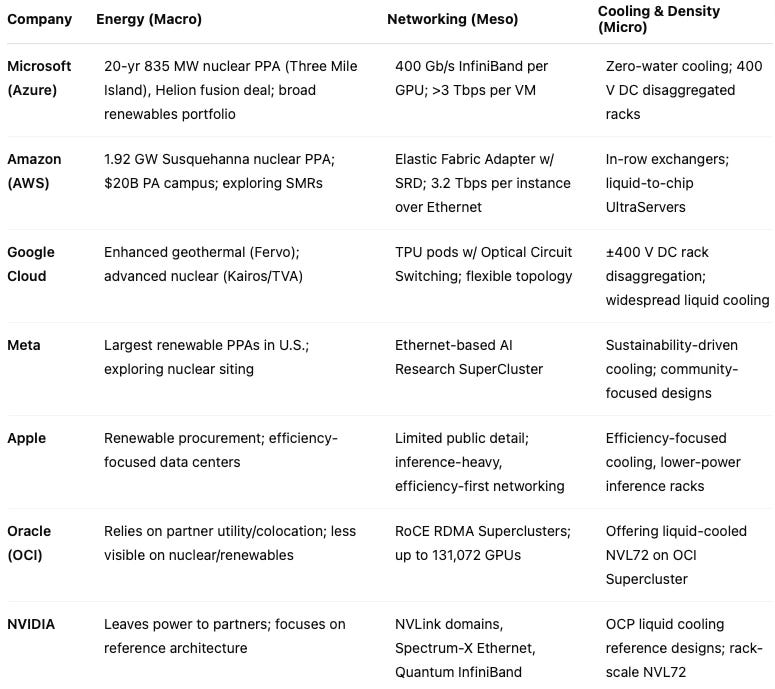

V: Comparative Matrix by Bottleneck

The comparative matrix distills how U.S. hyperscalers and vendors address the three layers of the bottleneck hierarchy. Arranging energy, networking, and cooling responses side by side makes clear where firms have secured durable advantages and where they depend on partners.

MindCast AI’s foresight simulation reveals clear divergence: Microsoft, Amazon, and Google lock in firm power; Meta and Apple emphasize renewables and efficiency; networking splits between InfiniBand, Ethernet, and optical switching; cooling converges on liquid-to-chip with different community strategies.

VI: Cross-Cutting Insights

The three bottlenecks reinforce one another. Energy scarcity magnifies networking costs by forcing clusters into suboptimal locations. Networking inefficiencies amplify cooling loads as GPUs idle or overheat. Capital alone cannot solve these problems—alignment across layers is required.

MindCast AI highlights systemic interdependence as the hidden driver of outcomes. The foresight simulation demonstrates that true competitive advantage lies in coordinated breakthroughs across all three layers.

VII: Strategic Takeaways

The bottleneck hierarchy provides a simple test: not how many GPUs a company can buy, but whether it has the energy, network, and cooling to use them at scale. Microsoft and Google are strongest on power diversification; AWS on scale and custom silicon; Meta on renewable commitments; Apple on efficiency; Oracle on niche clustering; and NVIDIA as the architectural reference.

Energy is the defining moat. Networking sets the ceiling of model scale. Cooling determines whether expansion proceeds without friction. MindCast AI frames these takeaways as foresight signals—indicators of where institutional strategies will converge or fracture.

VIII: Closing Perspective

The bottleneck hierarchy demonstrates how predictive cognitive AI transforms analysis into foresight. MindCast AI models not just current infrastructure but how constraints cascade and where fractures or breakthroughs will occur. Encoding energy, networking, and cooling into a layered causal structure allows outcomes to be anticipated long before conventional analysis detects them.

MindCast AI’s broader mission is to apply predictive cognitive intelligence across law, economics, and institutional behavior. In each domain, the system translates complexity into foresight-ready hierarchies, reveals hidden dependencies, and simulates long-term scenarios. The datacenter foresight simulation shows that the same architecture used to forecast litigation strategies or market shifts can also map the physical limits of AI infrastructure.

As AI reshapes global systems, foresight becomes the most valuable currency. MindCast AI delivers it—providing not just analysis of the present but a structured vision of the future that decision-makers can act on today.