MCAI Market Vision: Broadcom's Cataclysmic $10B OpenAI Deal

Forecasting the Custom AI Silicon Wave (2025–2030)

Executive Summary

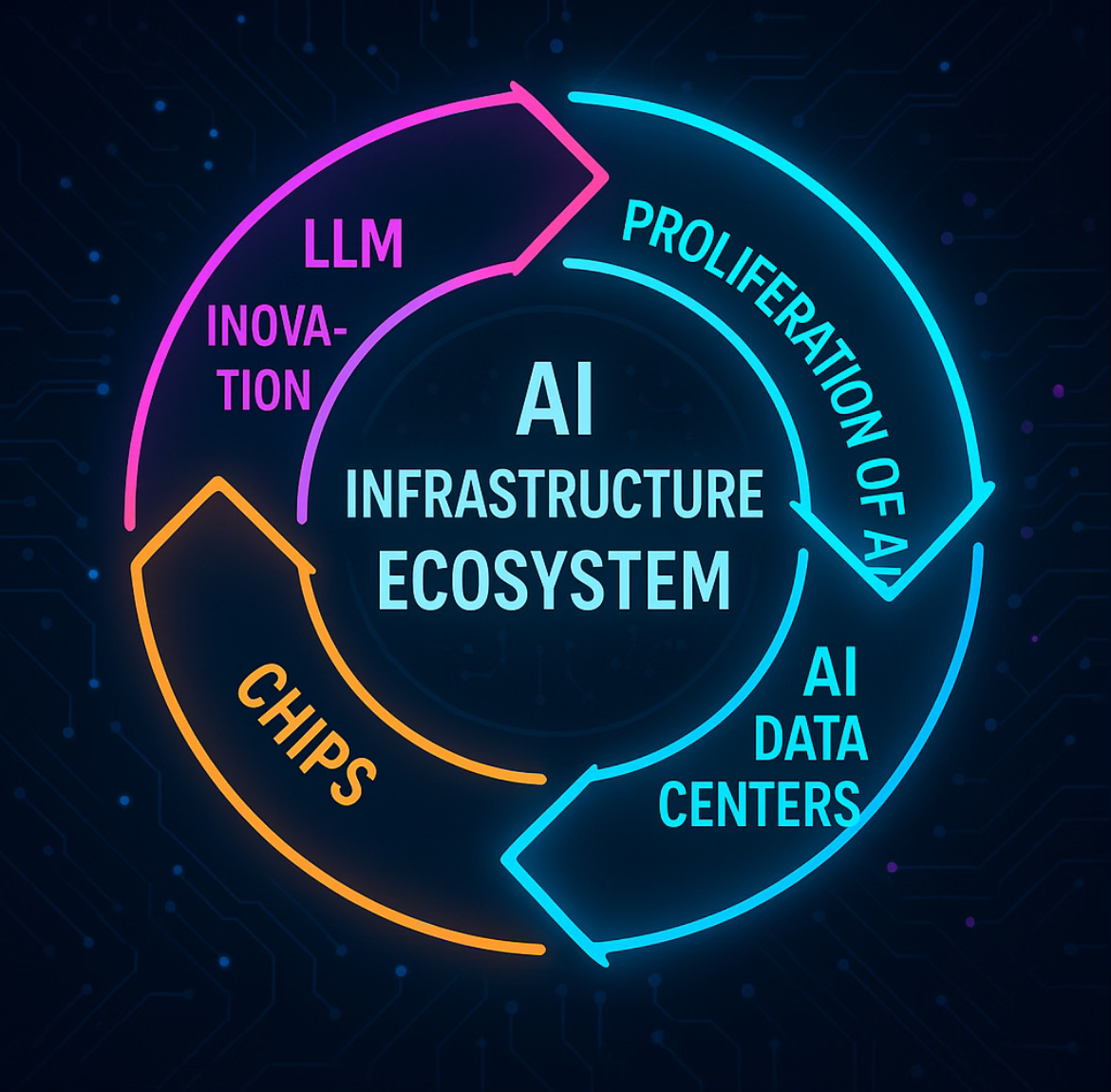

The Broadcom–OpenAI agreement marks one of the largest bespoke AI hardware deals in history, instantly shifting the balance of power in semiconductor markets. A $10 billion commitment from the leading generative AI lab signals that custom silicon has moved from speculative option to industrial necessity. MindCast AI’s foresight simulation examines how this deal becomes the opening surge in a broader wave of AI‑specific silicon adoption that will reshape global infrastructure and investment flows.

Broadcom’s $10 billion OpenAI order crystallizes the scale of demand for specialized AI chips. The transaction shows that custom silicon has shifted from experimental projects into mainstream production, redefining the economics of large-scale inference. It also creates a template that hyperscalers, frontier labs, and vertical integrators are likely to replicate, accelerating the broader custom AI silicon wave.

The Catalyst Effect: Broadcom's success proves custom silicon economics work at scale, providing a reference model that other companies will adapt and replicate. The deal structure, technical approach, and financial outcomes create a template for similar partnerships across the AI ecosystem. Market validation removes execution uncertainty for both chip designers and AI companies considering custom silicon strategies.

Forward Deal Flow Forecast: We project 15-20 similar deals exceeding $1B each through 2028, with total industry custom silicon partnerships reaching $75-100B annually by 2030. Success patterns from the Broadcom-OpenAI partnership provide specific indicators for timing, structure, and participants in the next wave of custom AI infrastructure deals.

I. MindCast AI Foresight Simulation: Methodology and Approach

The vision statement exemplifies MindCast AI's approach to transforming single catalytic events into systematic industry foresight through multi-horizon simulation modeling. The Broadcom-OpenAI deal serves as an ideal case study for demonstrating how predictive cognitive AI can extract broader patterns from specific transactions and generate actionable forecasts across entire market ecosystems. Our methodology combines signal detection, flywheel modeling, and scenario design to bridge the gap between immediate news events and structural industry transformation.

Signal Detection and Pattern Recognition:

Identification of the Broadcom deal as a "Category 1 Catalyst" - a transaction that validates new business models rather than just scaling existing ones

Recognition of timing convergence between AI workload maturation, manufacturing capacity availability, and economic optimization pressures

Detection of structural shifts from general-purpose to workload-optimized silicon across multiple customer categories simultaneously

Multi-Horizon Foresight Integration:

Immediate (2025-2026): Direct replication deals following Broadcom's proven model

Medium-term (2026-2028): Partnership structure evolution and supply chain adaptation

Long-term (2028-2030): Market structure transformation and competitive response stabilization

Systems Impact: How custom silicon wave reshapes semiconductor industry competitive dynamics

Flywheel Modeling Application:

Identification of self-reinforcing cycles: Successful deals → Reduced risk perception → Increased customer adoption → Supply chain investment → Lower costs → More deals

Quantification of compounding effects through manufacturing capacity expansion and design expertise accumulation

Recognition of constraint migration from demand uncertainty to supply chain capacity limitations

The analysis demonstrates MindCast AI's ability to transform headline-driven news into structured foresight that executives can use for strategic planning, investors can apply to portfolio construction, and policymakers can leverage for regulatory framework development. By extending from one $10B transaction to forecasting $75-100B in industry deal flow, the simulation illustrates how advanced AI can identify inflection points and project systematic adoption patterns across complex technology ecosystems.

Contact mcai@mindcast-ai.com to partner with us on AI market foresight simulations.

II. The Broadcom Catalyst: What Makes The Deal a Blueprint

Broadcom's OpenAI partnership establishes a replicable model that addresses systematic cost and performance challenges across AI infrastructure deployments. The deal validates specific economics around custom silicon development, manufacturing partnerships, and customer co-development that were previously theoretical. The validation creates a reference framework that other companies can adapt for their specific workload requirements and business models.

Key Validation Elements:

Custom silicon delivers 30-40% better cost-per-token than general-purpose alternatives for high-volume inference

18-month development cycles prove fast enough for AI model evolution timelines

Co-development partnerships reduce customer risk while enabling silicon optimization

Manufacturing partnerships with TSMC and others provide scalable production capacity

Blueprint Components:

Technical: Co-located design teams, workload-specific optimization, modular architectures

Business: Risk-sharing partnerships, volume commitments, integrated software stacks

Financial: Development cost amortization across multiple customers, pre-payment structures

The Broadcom model demonstrates that custom silicon partnerships can deliver superior economics while reducing deployment risk for AI companies. The proof of concept removes the biggest barriers to custom silicon adoption across the industry. The replicable nature of the approach ensures that similar deals will emerge as other companies recognize the cost and performance advantages demonstrated by the OpenAI partnership.

III. Market Catalyst Analysis: Why Now and What's Next

The timing of major custom silicon deals reflects the maturation of AI workloads from research experiments to production systems where cost optimization becomes paramount. Broadcom's success occurs precisely when AI companies face scaling economics that make custom solutions financially attractive rather than just technically interesting. The convergence of technical feasibility, economic necessity, and market validation creates conditions for systematic adoption across the AI ecosystem.

Market Maturation Drivers:

AI inference costs now represent 60-70% of total AI infrastructure spending

Model architectures stabilizing around transformer variants that can be optimized in silicon

Production workloads reaching scales where custom silicon development costs amortize effectively

Manufacturing capacity and design tools mature enough to support 12-18 month development cycles

Catalyst Timeline:

2024: Broadcom proves technical feasibility with initial hyperscaler partnerships

2025: OpenAI deal validates economics and removes market uncertainty

2026-2027: Second wave of deals across multiple customer categories

2028-2030: Custom silicon becomes standard practice for large-scale AI deployments

Economic Tipping Point: AI companies spending >$500M annually on inference hardware reach break-even for custom silicon investment, creating a clear addressable market of 20-30 companies by 2027.

The market catalyst effect extends beyond individual company decisions to create industry-wide momentum toward custom silicon adoption. Financial validation from early deals reduces perceived risks for subsequent partnerships while establishing supply chain relationships and design capabilities that accelerate future development cycles. The combination of economic pressure and proven solutions creates systematic adoption rather than isolated experiments.

IV. Partnership Structure Evolution: From Broadcom's Model to Industry Standard

The Broadcom-OpenAI deal establishes partnership frameworks that subsequent deals will adapt and refine based on specific customer requirements and market conditions. Understanding these structural innovations is crucial for predicting how future partnerships will be organized, financed, and executed across different industry segments. The evolution from Broadcom's pioneering approach to standardized industry practices will determine the accessibility and scalability of custom silicon solutions.

Broadcom's Original Structure:

Co-located design teams sharing customer workload data and performance requirements

Risk-sharing through development cost amortization and volume commitments

Integrated software stacks reducing customer deployment complexity

Manufacturing partnerships providing capacity guarantees and yield optimization

Emerging Structure Variants:

Consortium Model (Multi-Customer):

Shared development costs across 3-5 similar customers (e.g., frontier AI labs)

Common base architecture with customer-specific optimization layers

Reduced per-customer investment while maintaining customization benefits

Examples: AI safety consortium, healthcare privacy-preserving compute

Vertical Integration Model:

Customer takes equity stake in silicon partner or establishes joint venture

Shared IP ownership and exclusive access to certain optimizations

Higher commitment level but greater control over roadmap and pricing

Examples: Tesla-style partnerships where silicon becomes core competitive advantage

Platform Extension Model:

Incumbent platform companies (Nvidia, Intel, AMD) offer custom variants

Leverage existing software ecosystems while providing workload optimization

Lower switching costs for customers but potentially limited optimization depth

Market response to prevent pure-play custom silicon market share capture

The structural evolution reflects learning from early partnerships and adaptation to different customer risk profiles and business models. Standardization of partnership frameworks will reduce transaction costs and development timelines while enabling more companies to participate in custom silicon markets. The progression toward industry-standard approaches indicates that custom silicon will become a standard procurement option rather than experimental technology partnership.

V. Supply Chain and Ecosystem Implications

With partnership models now established as viable, the next challenge shifts from deal structure to execution: can the global supply chain keep pace with demand for bespoke silicon? The wave of custom silicon partnerships triggered by Broadcom's success will stress-test manufacturing and design ecosystems that were not built for simultaneous large-scale custom projects. Understanding these capacity constraints and infrastructure investments is essential for predicting which deals will succeed and when they can be executed effectively. The ecosystem implications extend beyond individual partnerships to reshape competitive dynamics across semiconductor supply chains.

The wave of custom silicon partnerships triggered by Broadcom's success will stress-test manufacturing and design ecosystems that were not built for simultaneous large-scale custom projects. Understanding these capacity constraints and infrastructure investments is essential for predicting which deals will succeed and when they can be executed effectively. The ecosystem implications extend beyond individual partnerships to reshape competitive dynamics across semiconductor supply chains.

Manufacturing Capacity Constraints:

TSMC advanced nodes (3nm, 2nm) already operating near capacity through 2027

Custom silicon requires longer allocation lead times than standard product manufacturing

15-20 major deals could consume 30-40% of available advanced node capacity

New fab construction requires 3-4 years, creating near-term bottlenecks

Design and IP Ecosystem Expansion:

Current merchant silicon design teams sized for 2-3 major custom projects annually

Industry demand for 10+ simultaneous projects requires 3-5x team expansion

Specialized AI silicon design talent remains scarce with 18-24 month hiring cycles

IP licensing and EDA tool capacity needs to scale proportionally

Optical and Interconnect Dependencies:

Custom accelerators drive demand for high-bandwidth optical components (800G, 1.6T)

Current optical component manufacturing capacity insufficient for projected demand

Supply chain concentration in Asia creates geopolitical and logistics risks

New optical manufacturing requires 2-3 year capacity expansion cycles

Strategic Ecosystem Responses:

Foundry Expansion: TSMC, Samsung, Intel investing $100B+ in advanced node capacity through 2028

Design Service Scaling: Broadcom, Marvell, AMD expanding custom silicon design teams 200-300%

Optical Investment: Coherent, Lumentum, others raising capacity 150% through 2027

Geographic Diversification: Efforts to establish optical and packaging capacity outside Asia

The supply chain implications create both opportunities and constraints for custom silicon adoption. Companies that secure manufacturing capacity and design resources early will have competitive advantages in winning and executing custom silicon partnerships. However, industry-wide capacity expansion will eventually accommodate projected demand, making custom silicon partnerships more accessible and reducing development timelines.

VI. Competitive Response Patterns: How Incumbents Will React

Broadcom's success in custom silicon partnerships will trigger systematic competitive responses from established players who cannot afford to cede this growing market segment. Understanding these response patterns is crucial for predicting market structure evolution and identifying which custom silicon partnerships will face the most competitive pressure. The responses will range from direct competition to strategic partnerships that attempt to neutralize custom silicon advantages.

Nvidia's Platform Defense Strategy:

Expanded CUDA ecosystem with customer-specific optimization tools

Grace CPU integration providing system-level customization without full custom silicon

Acquisition of custom silicon design teams to offer "CUDA-compatible custom solutions"

Competitive pricing for high-volume customers to match custom silicon economics

Intel's Foundry + Design Integration:

Intel Foundry Services targeting custom AI silicon with integrated design support

Leveraging x86 ecosystem and software compatibility for easier customer migration

Geographic supply chain advantages for customers concerned about Asia dependency

Government and defense market positioning for secure custom silicon requirements

AMD's Accelerated Partnership Model:

EPYC + Instinct integration offering system-level optimization

Expanded ROCm ecosystem with customer co-development programs

Strategic partnerships with cloud providers for custom Instinct variants

Competitive response to prevent further market share erosion to custom solutions

New Entrant Strategies:

Cerebras, Graphcore, SambaNova: Positioning specialized architectures as "pre-customized" solutions

Startup Formation: New companies specifically targeting custom AI silicon design services

Private Equity Consolidation: Roll-up of smaller design teams to compete with established players

The competitive response intensity will determine how much market share custom silicon partnerships can ultimately capture from general-purpose solutions. Incumbents with strong software ecosystems and customer relationships have defensive advantages, but economic pressure from custom silicon will force strategic adaptations across the industry. The most successful responses will combine platform benefits with customization capabilities rather than defending pure general-purpose approaches.

VII. Comprehensive Deal Flow Forecast (2026-2030)

Based on the Broadcom catalyst model and market analysis, we project three distinct waves of custom silicon partnerships totaling $75-100B by 2030. Each wave reflects different economic drivers, technical requirements, and partnership structures that build on lessons learned from earlier deals. The forecast incorporates both demand-side pressures and supply-side capacity constraints that will determine actual deal timing and structure.

Wave 1: Hyperscaler Expansion (2026 H1-2027)

Microsoft-OpenAI Ecosystem: $8-12B custom inference accelerators for ChatGPT cost optimization

Timeline: 2026 H2 announcement, 2027 H2 deployment

Partners: Broadcom, Marvell, or AMD as lead designer

Economics: 35% cost reduction vs. GPU-based inference at scale

Probability: 85% - Strong economic drivers and proven partnership model

Amazon AWS: $6-8B next-generation Inferentia with merchant co-design elements

Timeline: 2026 H1 announcement, 2027 production

Partners: Internal teams + Broadcom networking integration

Rationale: Expand beyond internal workloads to third-party customer base

Probability: 75% - AWS has internal capability but merchant partnerships provide faster scaling

Google Cloud: $4-6B TPU complementary accelerators for non-Google software stacks

Timeline: 2026 H2-2027 H1 announcement

Partners: Merchant silicon partner for customer workload diversity

Market Impact: Validates hybrid internal/external silicon strategies

Probability: 65% - Google's internal preference may limit merchant partnerships

Wave 2: Frontier Lab Consortium (2027-2028)

Anthropic + AI Safety Consortium: $3-5B shared custom inference program

Structure: Multi-lab consortium sharing development costs and manufacturing capacity

Technical Focus: Constitutional AI and safety-optimized inference architectures

Partners: Specialized AI safety features requiring custom silicon implementation

Probability: 70% - Economic benefits of shared development costs compelling

Meta AI Research: $4-7B low-power multimodal accelerators for AR/VR integration

Applications: On-device AI assistants, real-time language translation, content moderation

Innovation: Edge inference optimization with sub-10W power envelopes

Timeline: 2027 development, 2028-2029 integration with consumer devices

Probability: 80% - Critical for Meta's metaverse strategy and competitive positioning

xAI/Twitter Integration: $2-4B custom silicon for real-time social media AI

Applications: Content moderation, recommendation engines, real-time translation

Partnership Model: Musk's access to Tesla silicon expertise applied to social media scale

Timeline: 2027-2028 development and deployment

Probability: 60% - Dependent on xAI scaling and integration with Twitter infrastructure

Wave 3: Vertical Industry Applications (2028-2030)

Tesla Autonomous Systems: $5-8B follow-on to Dojo for production vehicle deployment

Scale: Million+ vehicle deployment requiring cost-optimized inference silicon

Partnership Model: Tesla specifications with merchant manufacturing and support

Market Validation: Proves custom silicon economics extend beyond cloud deployments

Probability: 90% - Critical for Tesla's autonomous driving competitive advantage

Healthcare AI Consortium: $2-4B HIPAA-compliant inference accelerators

Participants: Major hospital systems, pharmaceutical companies, medical device manufacturers

Requirements: Privacy-preserving computation, regulatory compliance, edge deployment

Innovation: Custom silicon enabling federated learning and differential privacy

Probability: 55% - Regulatory complexity and fragmented customer base create challenges

Financial Services AI: $3-5B fraud detection and algorithmic trading accelerators

Participants: Goldman Sachs, JPMorgan, Citadel, Two Sigma consortium approach

Requirements: Ultra-low latency, regulatory compliance, security features

Applications: Real-time fraud detection, high-frequency trading, risk management

Probability: 70% - Strong economic incentives and existing technology investment culture

International and Government Programs

China Domestic Silicon: $10-15B across Alibaba, Baidu, ByteDance, Tencent

Driver: Export control restrictions creating necessity for domestic alternatives

Timeline: 2026-2028 aggressive development programs

Technical Approach: Domestic foundries (SMIC, others) with government support

Probability: 95% - Policy-driven necessity ensures execution

EU Sovereign AI: $2-3B consortium led by SiPearl and European partners

Focus: Government and defense applications requiring European supply chains

Timeline: 2027-2029 development with EU funding support

Applications: Sovereign AI capabilities, defense systems, industrial automation

Probability: 60% - Bureaucratic complexity may delay execution

Aggregate Forecast Summary

Total Deal Value 2026-2030: $75-100B across 15-20 major partnerships

Annual Run-Rate by 2030: $25-30B in custom silicon partnerships

Market Share Impact: Custom solutions capture 15-20% of total AI hardware spending

Geographic Distribution: 60% US, 25% China, 10% Europe, 5% Rest of World

The forecast reflects supply chain capacity constraints becoming the primary limiting factor by 2027-2028, with manufacturing allocation determining which deals can execute on schedule rather than customer demand limitations.

VIII. Investment and Strategic Implications

The custom silicon wave triggered by Broadcom’s deal will redirect capital, reshape valuation logic, and redefine competitive boundaries across AI infrastructure. Understanding these shifts is essential for anticipating who captures value and how leadership evolves between 2025 and 2030.

Investment Flow Redirection: Capital will migrate toward high‑margin design services, with fabless chipmakers commanding premium multiples. Foundry expansion is set to exceed $200B by 2028, while optical interconnect firms could see 40–60% demand growth. Early movers that lock in manufacturing capacity will become pivotal nodes in the new supply chain.

Valuation Framework Evolution: Custom silicon partnerships resemble multi‑year software contracts, giving investors visibility into recurring revenue streams. Intellectual property portfolios, co‑design capabilities, and time‑to‑market advantages will drive valuation premiums of 40–60%, a shift that MCAI foresight identified ahead of market consensus.

Market Structure Changes: Companies without the scale for billion‑dollar silicon investments risk marginalization. Industry dynamics are tilting from transactional chip sales toward long‑term equity‑based alliances and joint ventures. MCAI Market Vision forecasts these partnership architectures as structural features of the next AI hardware cycle, not anomalies.

For investors, operators, and policymakers, the pattern is clear: capital will concentrate around those who master integration of silicon, supply chains, and software ecosystems. MCAI’s predictive framework highlights how Broadcom’s breakthrough is less an isolated windfall than a systemic signal — a template others will be forced to follow.

Appendix: MindCast AI AI Infrastructure Series

Oracle AI Supercluster — Infrastructure Advantage in the Age of AI Sovereignty (Sept 2025): Oracle's landmark $300B partnership with OpenAI signals a fundamental transformation in AI infrastructure, where computational scale depends more heavily on energy, cooling, and interconnect capabilities than raw GPU procurement. Analysis demonstrates how Oracle can convert contractual arrangements into sustained competitive advantage through strategic investments in energy-secure AI superclusters. Market forecasting indicates that infrastructure specialization, rather than general-purpose cloud parity, will define Oracle's long-term market positioning. www.mindcast-ai.com/p/oracleopanai

AI Datacenter Edge Computing, Ship the Workload Not the Power — AI Datacenter Edge Computing as the Adaptive Outlet for Infrastructure Bottlenecks (Sept 2025): Forward-looking analysis demonstrates how hyperscalers confront grid limitations, cooling challenges, and community resistance that will constrain centralized scale-out strategies. Research forecasts a structural migration toward distributed edge data centers, where computational workloads relocate rather than requiring additional megawatts at centralized facilities. Edge computing emerges as an adaptive pressure release mechanism within the broader AI infrastructure build-out cycle. www.mindcast-ai.com/p/edgeaidatacenters

The Bottleneck Hierarchy in U.S. AI Data Centers — Predictive Cognitive AI and Data Center Energy, Networking, Cooling Constraints (Aug 2025): A comprehensive reframing of the AI infrastructure race reveals that scaling extends far beyond GPUs to encompass energy, cooling, and interconnect bottlenecks that will determine long-term competitiveness. The study introduces the "Bottleneck Hierarchy" as a systemic analytical framework for investors, operators, and regulators. Analysis anticipates a fundamental shift in value capture from compute chips to the physical infrastructure layers underlying AI operations. www.mindcast-ai.com/p/aidatacenters

VRFB's Role in AI Energy Infrastructure — Perpetual Energy for Perpetual Intelligence (Aug 2025)

In-depth examination positions vanadium redox flow batteries (VRFBs) as scalable, long-duration energy storage solutions critical for stabilizing AI data center operations. By enabling continuous renewable power availability, VRFBs significantly reduce hyperscaler dependence on increasingly strained electrical grids. Strategic foresight positions VRFB adoption as fundamental infrastructure required for sustainable AI sector growth. www.mindcast-ai.com/p/vrfbai

Nvidia's Moat vs. AI Datacenter Infrastructure-Customized Competitors — How Infrastructure Bottlenecks Could Reshape the Future of AI Compute (Aug 2025)

Strategic analysis challenges Nvidia's market dominance by examining competitor strategies focused on custom, bottleneck-aligned infrastructure approaches. Research illuminates how merchant silicon providers including Broadcom, Marvell, and Intel Foundry Services can erode CUDA's market grip through workload-specific chip co-design strategies. Competition in AI emerges not as a singular GPU race but as a comprehensive systemic contest encompassing networks, packaging, and energy infrastructure. www.mindcast-ai.com/p/nvidiachallenges