MCAI Cultural Innovation Vision: Introduction to Algorithmic Culture Trilogy

Identity, Trust, and Coordination Under AI Acceleration

I. The Trilogy Arc

Algorithmic engagement systems compress the tempo of identity formation, social trust calibration, and institutional response across the entire cultural system. The critical word is tempo. AI-driven recommendation engines do not merely increase the volume of content—they accelerate feedback loops, tighten reinforcement cycles, and outpace the adaptive capacity of individuals and institutions alike. A 2018 analysis of “Facebook harms teens” captured scale; the 2025 problem is that AI compresses the interval between stimulus and behavioral modification to hours rather than weeks, making traditional regulatory and parental response cycles structurally inadequate.

The problem extends far beyond youth safety. Every layer—youth, adults, institutions—adapts simultaneously, fails to adapt simultaneously, and generates externalities that cascade through the others. The dominant framing treats algorithmic harm as a child safety problem requiring parental vigilance and platform accountability. Political convenience drives that framing; analytical adequacy does not. Algorithmic mediation reshapes cognition, attention, trust, and social calibration at every level of the cultural stack. Youth function as the diagnostic surface—where harm manifests first and most visibly—but the pathology extends through adults who model the behaviors they regulate, and into institutions whose response velocity cannot match technological acceleration.

Installment I: Growing Up Algorithmic (forthcoming) examines youth as the early-warning system. Adolescents absorb algorithmic distortion first because their identity architectures remain under construction, their impulse regulation systems lack full development, and their social calibration mechanisms optimize for peer sensitivity. The data here diagnoses what algorithmic compression does to developing systems. The analysis reveals that youth outcomes depend heavily on adult coherence and institutional response—they do not stand alone.

Installment II: Living Algorithmic (forthcoming) examines adults as the transmission layer. Adults occupy a paradoxical position: they control formal gatekeeping (parental controls, school policies, platform regulation) while modeling the behaviors they regulate and experiencing their own algorithmic reshaping. The central question asks whether adults amplify youth resilience or transmit their own fragmentation. Evidence suggests the latter dominates: adults cannot transmit coherence they do not possess, trust they do not feel, or attention discipline they do not practice.

Installment III: Design Accountability or Fragmentation (forthcoming) examines institutions as the coordination architecture. Courts, legislatures, platforms, and regulatory bodies constitute the formal mechanisms through which society might respond. The question asks whether coordination architecture can adapt faster than algorithmic systems can destabilize it—or whether fragmented, constitutionally contested, partisan-captured responses will produce more harm than the underlying problem. The legal pivot from content moderation to design accountability marks the critical juncture.

The trilogy’s core insight holds that these three layers form a causal chain with feedback loops rather than independent domains requiring separate analysis. Youth harm generates political pressure, which drives institutional response (Installment III). Adult incoherence undermines intervention effectiveness, which worsens youth outcomes (Installment I). Institutional trust collapse reduces adult confidence, which fragments cultural transmission (Installment II). Legal fragmentation creates compliance arbitrage, which advantages platforms, which maintains engagement mechanics, which sustains harm (Installment I).

Single-layer interventions will fail. Parental controls without platform design changes produce workarounds. Platform design changes without adult modeling coherence produce credibility gaps. Legal mandates without constitutional durability produce fragmentation. The trilogy maps the full system to identify intervention points with cascading positive effects.

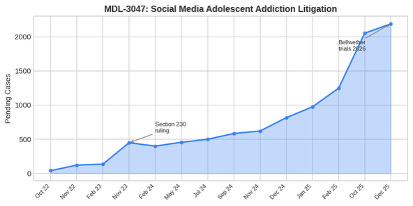

MDL-3047 litigation grew from 39 cases at formation (October 2022) to 2,191 pending cases (December 2025). The judicial system attempts to address what legislative and regulatory systems have not—Installment III made concrete. Outcomes will depend on what courts learn about youth harm (Installment I) and whether adult coherence can translate legal victories into cultural change (Installment II).

CORE FORESIGHT

Design-specific liability, driven by litigation rather than legislation, will partially stabilize youth outcomes by 2028—but only for families with sufficient adult coherence. The design-versus-content distinction emerging in MDL-3047 will prove judicially workable; platforms will modify engagement mechanics pre-emptively to manage liability exposure. These modifications will help youth in the “managed engagement” trajectory class shift toward resilient adaptation. However, fragmentation will persist elsewhere: low-coherence families will see limited benefit from design changes they cannot reinforce; partisan-captured trust will produce differential compliance; and state-level regulatory patchwork will consolidate market power in large platforms while doing little for users in non-compliant jurisdictions. The feedback loop between litigation, design change, and outcome improvement operates too slowly to stabilize outcomes for the current adolescent cohort.

Contact mcai@mindcast-ai.com to partner with us on Cultural Innovation foresight simulations.

II. Installment I: Growing Up Algorithmic

Youth as Early-Warning System

Primary audience: Public health researchers, pediatricians, school administrators, parents, and educators seeking evidence-based understanding of youth digital harm patterns.

Youth serve as the entry point to the trilogy, not its subject. Adolescents function as the diagnostic surface where algorithmic stress first becomes visible—not because the problem belongs uniquely to them, but because their developmental stage makes them most sensitive to perturbation. Data in Installment I establishes baseline harm distribution, identifies trajectory classes distinguishing resilient adaptation from spiraling harm, and reveals upstream dependencies on adult coherence (Installment II) and institutional response (Installment III).

II.A. Position in the Trilogy

Identity architectures still forming show greater susceptibility to compression. Impulse regulation systems still maturing show greater vulnerability to variable-ratio reinforcement. Social calibration mechanisms optimized for peer sensitivity show greater capture by algorithmic amplification. Youth outcomes partially depend on the adult coherence environment in which development occurs and the institutional responses that shape platform behavior. Youth represent where harm surfaces, but causes and solutions extend through the entire cultural stack.

II.B. Evidence Base

II.B.1. Platform Saturation

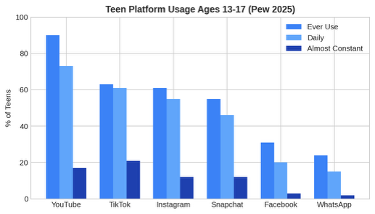

Pew Research Center (December 2024, April 2025) documents near-universal platform penetration among teens ages 13-17. YouTube leads at 90% usage, with 73% daily use and 17% “almost constant” use. TikTok follows at 63% usage but leads in engagement intensity: 21% report almost constant use—the highest rate of any platform. Instagram (61%), Snapchat (55%), and Facebook (31%) complete the major platform roster. WhatsApp grew from 17% (2022) to 24% (2025)—the only platform showing significant growth.

The aggregate metric demands attention: 36% of teens use at least one platform “almost constantly.” Ambient immersion has replaced occasional engagement. The attention architecture of adolescence now revolves around algorithmic feeds.

Demographic disparities shape intervention design. Black teens show significantly higher “almost constant” use: 35% for YouTube (vs. 8% White), 28% for TikTok (vs. 8% White), 22% for Instagram (vs. 6% White). Hispanic teens fall between these groups. Differential access to alternative activities, differential targeting by recommendation algorithms, and differential community adoption patterns likely drive these disparities. Interventions producing disparate compliance burdens will exacerbate existing inequities.

II.B.2. Causal Direction

The UCSF longitudinal study (Nagata et al., JAMA Network Open, May 2025) resolves a critical methodological debate. Researchers tracked 11,876 children from ages 9-10 through ages 12-13 and established directional causality: increased social media use predicts later depressive symptoms, but depressive symptoms do not predict increased social media use. Temporal precedence with controlled confounds delivers causation, not merely correlation.

Effect magnitude proves substantial. Over three years, average daily social media use rose from 7 minutes to 73 minutes (10x increase), while depressive symptoms increased 35% from baseline. Controls for baseline mental health, socioeconomic factors, and family environment did not eliminate the relationship. The “depressed kids seek social media” counter-narrative fails empirically in this dataset.

Connection to Installment II: The UCSF study tracks children through the age range where parental oversight transitions to adolescent autonomy. The 7-to-73-minute trajectory reflects parental decisions about device access, household screen norms, and modeling behaviors—not platform design alone. Installment II’s analysis of adult transmission proves essential: changing platform design without changing adult behavior produces limited effects.

II.B.3. Youth Self-Perception

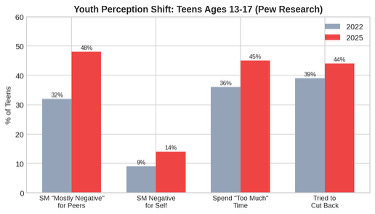

Pew Research (April 2025) documents a striking shift in teen self-awareness. The share believing social media has a “mostly negative” effect on people their age rose from 32% (2022) to 48% (2025)—a 16-percentage-point increase in three years. Teens increasingly recognize the problem yet continue the behaviors they critique.

Revealed preference under constraint explains the pattern, not ignorance. When asked about their own experience, 45% say they spend “too much time” on social media, and 44% report having tried to cut back. Platform design defeats self-regulation. Variable-ratio reinforcement, notification interrupts, infinite scroll, and social comparison mechanics create engagement that persists despite conscious resistance. Teens understand the problem; they cannot escape it.

Connection to Installment III: The gap between teen awareness (48% see harm) and teen behavior (36% almost constant use) defines precisely the gap institutional intervention must close. Teen self-regulation fails despite awareness. Adult enforcement fails due to coherence problems (Installment II). Only design changes or legal mandates can alter the equilibrium. The design-versus-content legal distinction (Installment III) proves decisive: it determines whether courts can reach the engagement mechanics that defeat self-regulation.

II.B.4. Gender Disparity

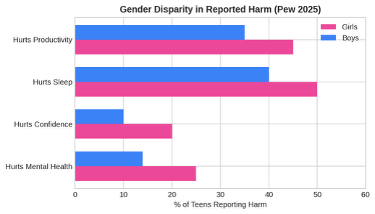

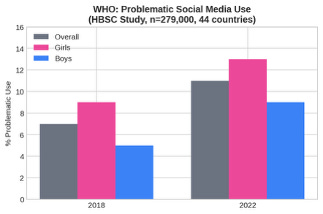

Teen girls report significantly higher harm rates across every metric measured. Mental health impact: 25% of girls vs. 14% of boys report social media hurts their mental health. Confidence: 20% vs. 10%. Sleep: 50% vs. 40%. Productivity: 45% vs. 35%. The WHO HBSC study confirms the pattern globally: 13% of girls show problematic social media use versus 9% of boys.

Multiple mechanisms likely contribute. Girls show higher rates of social comparison, appearance-based feedback seeking, and relational aggression exposure on visual platforms like Instagram. Algorithmic amplification of idealized images and social hierarchies interacts with developmental sensitivities around identity and peer acceptance. Interventions ignoring gender-differentiated harm patterns will underperform.

Connection to Installment II: Gender disparity in youth harm mirrors gender patterns in adult social media use. Adult women show higher Instagram engagement and higher rates of appearance-related social comparison. Mothers’ social media behaviors may disproportionately model the patterns daughters then absorb. Transmission operates through gendered channels, not generic ones. Interventions targeting adult modeling may require gender-specific components.

II.B.5. Global Validation

The WHO Health Behaviour in School-aged Children study provides the largest cross-national dataset: 279,117 adolescents across 44 countries. Problematic social media use rose from 7% (2018) to 11% (2022)—a 57% relative increase in four years. The pattern holds across geographies, regulatory regimes, and cultural contexts. Platform design drives the phenomenon, not American cultural factors.

Connection to Installment III: Global consistency of harm patterns undermines platform claims that problems reflect local cultural factors or inadequate parenting. The same engagement architectures produce the same outcomes across 44 countries with radically different educational systems, family structures, and regulatory environments. The platforms represent the common cause—strengthening the case for design accountability.

II.C. Analytical Framework

The Chicago School framework applies behavioral precision to youth outcomes through three analytic lenses.

Coase Function: Transaction costs determine whether youth, parents, or platforms bear harm mitigation burden. Currently, platforms externalize costs to families while monetizing engagement. Parental monitoring carries high cost and low efficacy (teens evade; parents lack technical knowledge). Design changes would internalize costs to platforms but require legal mandate. Absent legal intervention, the low-transaction-cost party (platforms) will not voluntarily bear costs it can externalize. Chicago School Accelerated, Part I: Coase and Why Transaction Costs ≠ Coordination Costs (December 2025).

Becker Function: Teens rationally respond to incentive structures. Platforms offer variable-ratio reinforcement (social validation, entertainment, connection) at near-zero marginal cost. Alternative activities (sports, arts, in-person socializing) require coordination, scheduling, and often money. The rational teen maximizes reward per unit effort—and algorithmic feeds dominate that calculus. Interventions restricting access without improving alternatives will generate substitution to unregulated platforms or workarounds. The Chicago School Accelerated Part II, Becker and the Economics of Incentive Exploitation (December 2025).

Posner Function: Legal learning velocity determines how quickly courts develop workable standards. The design-versus-content distinction crystallizes through MDL-3047 and state litigation. Courts establishing that engagement mechanics (notifications, infinite scroll, autoplay, algorithmic amplification) can trigger liability separate from user-generated content will cause platforms to modify designs pre-emptively. The Chicago School Accelerated Part III, Posner and the Economics of Efficient Liability Allocation (December 2025).

II.D. Predictions

Four predictions emerge from Installment I’s analysis, each with explicit falsification criteria.

Prediction I-1: Trajectory Divergence. Youth outcomes will increasingly bifurcate into three trajectory classes: (a) resilient adaptation (15-20%), characterized by high self-regulation and alternative activity engagement; (b) managed engagement (50-60%), characterized by awareness of harm but continued high use; (c) spiraling harm (20-25%), characterized by problematic use, mental health decline, and self-reinforcing feedback loops. The middle category will prove unstable—most will drift toward (a) or (c) based on adult and institutional environment.

Prediction I-2: Gender Gap Widening. Gender disparity in harm will increase through 2027 absent design intervention. Visual platforms optimized for appearance-based engagement will continue disproportionately affecting girls. The gap will narrow only if platforms modify recommendation algorithms to reduce social comparison content—modifications they will not make voluntarily because such content drives engagement.

Prediction I-3: Self-Regulation Ceiling. Teen self-regulation efforts will plateau. The share reporting attempts to cut back (44%) will not significantly increase because platform design, not awareness, represents the binding constraint. Teens who want to cut back but cannot already appear in the count; additional awareness campaigns will not move the needle. Only design changes or external constraints will shift the distribution.

Prediction I-4: Litigation Driver. Youth harm data will prove decisive in bellwether trials (Summer 2026). UCSF causal-direction findings and WHO global consistency will survive Daubert challenges and establish the evidentiary foundation for design accountability. Platforms will settle significant numbers of individual cases to prevent jury verdicts that could anchor future damages.

II.E. Falsification Criteria

Prediction I-1 fails if trajectory distribution remains stable (no bifurcation) or if the middle category proves durable. Prediction I-2 fails if gender gap narrows without platform design changes. Prediction I-3 fails if self-regulation rates increase significantly (above 50%) through awareness interventions alone. Prediction I-4 fails if courts exclude UCSF and WHO data or if platforms do not settle to avoid jury verdicts.

III. Installment II: Living Algorithmic

Adults as Transmission Layer

Primary audience: Cultural commentators, organizational leaders, HR executives, religious and civic institutions, and anyone responsible for transmitting norms across generations.

Adults represent a parallel layer of the same problem, not the solution to a youth problem—and they carry the additional burden of transmission responsibility. Installment II asks whether adults amplify youth resilience or transmit their own fragmentation. The answer determines whether Installment I’s predictions about youth trajectories skew toward adaptation or harm, and whether Installment III’s institutional responses translate into cultural change.

III.A. Position in the Trilogy

Adults experience their own algorithmic reshaping: attention fragmentation, trust recalibration, identity compression. Adults also occupy the formal positions from which cultural transmission occurs: parents, teachers, coaches, employers, legislators. The question becomes whether adults can transmit coherence they do not possess.

Evidence suggests they cannot. Adults use the same platforms, exhibit the same engagement patterns, and experience the same cognitive effects as the youth they nominally regulate. Institutions deploy AI systems while restricting student use. Parents scroll during dinner while lecturing about screen time. Legislators demonstrate profound misunderstanding of the technologies they regulate. The credibility gap undermines every formal intervention. Youth perceive incoherence correctly; they do not fail to listen.

III.B. Evidence Base

III.B.1. Platform Parity

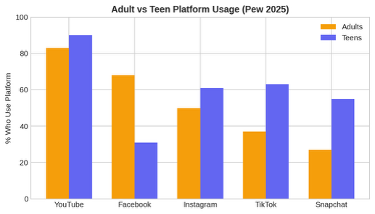

Pew Research (November 2025) documents adult use of comparable engagement architectures. YouTube: 83% of adults vs. 90% of teens. Facebook: 68% of adults vs. 31% of teens (Facebook skews older). Instagram: 50% of adults vs. 61% of teens. TikTok: 37% of adults vs. 63% of teens. Snapchat: 27% of adults vs. 55% of teens.

Engagement mechanics function identically across age groups. Adults experience the same infinite scroll, the same notification interrupts, the same algorithmic curation. A parent lecturing a teen about TikTok while scrolling Instagram transmits no coherent message about attention management—the parent demonstrates the same vulnerability to the same design patterns. The credibility problem reflects functional incoherence, not moral hypocrisy.

Connection to Installment I: The UCSF study tracks children ages 9-13—the period of maximum parental influence on media habits. Parental modeling during that window partially determines whether children enter adolescence with self-regulation capacity or algorithmic dependency. The 7-to-73-minute trajectory reflects household media environments shaped by adult behavior, not platform design alone.

III.B.2. The Credibility Gap

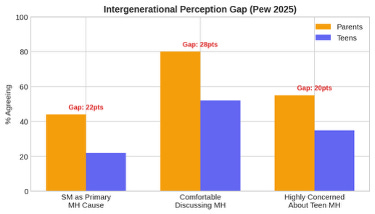

Pew data reveals profound asymmetries in parent-teen perceptions. Among parents concerned about teen mental health, 44% cite social media as the primary cause. Among similarly concerned teens, only 22% agree—a 22-percentage-point gap. Parents believe they understand the problem; teens believe parents do not understand their experience.

The communication gap extends further. Parents report 80% comfort discussing mental health with their teens. Only 52% of teens feel reciprocal comfort—a 28-percentage-point gap. Parents believe communication channels remain open; teens experience them as closed or performative. Credibility failure drives the gap, not effort failure. Teens perceive that adults do not understand their digital environment, model problematic behaviors themselves, and offer guidance disconnected from platform realities.

Connection to Installment III: The credibility gap explains why parental control solutions underperform. Teens who do not trust parental understanding of their digital experience will evade controls rather than internalize limits. Legal mandates relying on parental consent (like age verification requiring parental approval) assume a trust relationship the data shows to be compromised. Effective interventions must either restore adult credibility or operate independently of it.

III.B.3. Institutional Trust Collapse and Partisan Capture

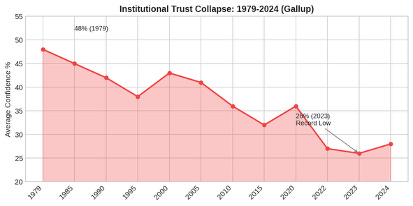

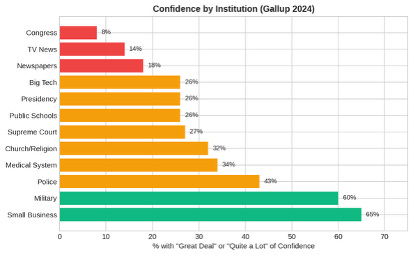

Gallup has tracked confidence in institutions since 1973. Average confidence fell from 48% (1979) to 26% (2023)—nearly halved over four decades, with 2023 marking a record low. Only two institutions command majority confidence: small business (65%) and the military (60%). The institutions most relevant to youth protection fare poorly: public schools (26%), big tech (26%), the presidency (26%), criminal justice (21%), TV news (14%), Congress (8%).

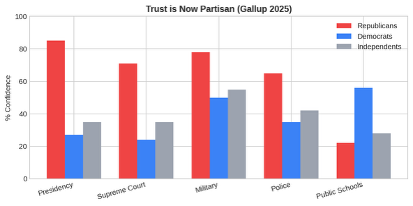

Gallup 2025 data reveals a structural transformation: trust has become entirely contingent on party control. Republicans show 85% confidence in the presidency versus 27% for Democrats—a 58-point gap. The Supreme Court shows 71% Republican confidence versus 24% Democratic—a 47-point gap. When a party controls an institution, its adherents trust it; otherwise they do not. Stable institutional legitimacy independent of political outcomes no longer exists.

Adults cannot transmit trust they do not feel. When half the adult population distrusts whatever institutions the other half trusts, coherent cultural response becomes nearly impossible. Youth absorb this fragmentation: they learn that institutional authority is contingent, contested, and tribal rather than stable, legitimate, and shared.

Connection to Installments I and III: Youth trajectory bifurcation (Prediction I-1) will correlate with household partisan environment—families with higher baseline institutional trust will produce more resilient youth. Institutional trust levels also predict compliance with institutional mandates (Installment III): parents who distrust government will resist age verification; citizens who distrust courts will not change behavior in response to verdicts. The trust substrate constrains institutional effectiveness regardless of legal merit.

III.C. Analytical Framework

The Chicago School framework applies behavioral precision to adult transmission dynamics through three analytic lenses.

Coase Function: Coordination costs determine whether coherent cultural transmission occurs. High-coherence families (consistent messaging, modeled behavior, trusted communication) achieve coordination at low cost. Low-coherence families face high transaction costs for every intervention: arguments about screen time, evasion of controls, credibility challenges. Families below a coherence threshold will not achieve effective coordination regardless of tool availability.

Becker Function: Adults rationally allocate attention based on reward structures. Platform engagement offers immediate, variable-ratio reinforcement. Parenting offers delayed, uncertain returns. The rational adult underinvests in attention-intensive parenting and overinvests in attention-capturing scrolling. Interventions relying on adult attention allocation without changing reward structures will underperform. Incentive compatibility, not knowledge, represents the binding constraint.

Posner Function: Adult behavior change responds to legal and social signals. Litigation producing verdicts that change platform design (Installment III) reduces the need for adult-mediated enforcement. Platforms voluntarily modifying engagement mechanics to reduce liability exposure enable the adult transmission layer to transmit the new default rather than fighting the old one. Legal developments may reduce the burden on adult coherence.

III.D. Predictions

Four predictions emerge from Installment II’s analysis, each with explicit falsification criteria.

Prediction II-1: Coherence as Multiplier. Adult coherence will prove the strongest predictor of youth trajectory outcomes, exceeding platform exposure, school intervention, or peer effects. Identical legal or design interventions applied in high-coherence versus low-coherence family environments will produce divergent outcomes—with effect sizes of 2-3x. Improving adult coherence will amplify the effectiveness of any intervention more than the intervention itself.

Prediction II-2: Credibility Gap Persistence. The parent-teen perception gap (22-28 points) will not narrow through communication programs or digital literacy initiatives. The gap reflects structural incoherence (adults model what they regulate against), not communication failure. Only changes in adult behavior—reduced personal platform engagement, consistent household media policies—will close the gap.

Prediction II-3: Partisan Trust Divergence. Institutional trust will continue to diverge by party, with average gaps exceeding 40 points on politically salient institutions by 2028. Divergence will produce differential compliance with platform regulation: Democratic households will comply with regulations passed under Democratic administrations; Republican households will comply with regulations passed under Republican administrations. Bipartisan legislation will face implementation headwinds from both sides.

Prediction II-4: Adult Harm Recognition. By 2027, surveys will show majority adult recognition of personal algorithmic harm (attention fragmentation, doomscrolling, social comparison), paralleling the youth perception shift documented in Installment I. Recognition will not produce behavior change without design intervention—adults will exhibit the same awareness-behavior gap as teens. Shared recognition may, however, create political constituency for design regulation.

III.E. Falsification Criteria

Prediction II-1 fails if interventions produce equivalent outcomes regardless of family coherence levels. Prediction II-2 fails if communication programs significantly narrow the parent-teen gap without adult behavior change. Prediction II-3 fails if partisan trust gaps narrow or if compliance patterns do not correlate with partisan alignment. Prediction II-4 fails if adult harm recognition does not increase or if recognition produces significant behavior change without design intervention.

IV. Installment III: Design Accountability or Fragmentation

Institutional Coordination or Collapse

Primary audience: Judges, legislators, platform policy teams, regulatory agency staff, litigation strategists, and constitutional law scholars tracking the design accountability doctrine.

Institutions constitute the formal coordination architecture through which society responds to collective challenges—courts interpret law and allocate liability, legislatures write rules, regulators implement mandates, and platforms design systems in response to incentives. Installment III asks whether coordination architecture can adapt faster than algorithmic engagement systems can destabilize it. The outcomes will determine whether Installment I’s youth harm trends reverse or intensify, and whether Installment II’s adult coherence failures can be compensated by institutional intervention.

IV.A. Position in the Trilogy

Evidence presents mixed signals. On one hand, the legal system develops new frameworks: the design-versus-content distinction emerging in MDL-3047 may establish that engagement mechanics can trigger liability separate from user-generated content. State legislatures pass laws at unprecedented rates: 300+ bills across 45+ states in 2025 alone. Federal legislation achieved bipartisan supermajority support in the Senate.

On the other hand, constitutional challenges block laws in multiple states. Federal legislation stalls in the House. Partisan capture of institutional trust (Installment II) undermines implementation. Regulatory fragmentation creates arbitrage opportunities that advantage incumbent platforms. Installment III forecasts which pathways lead to cultural stabilization and which accelerate fragmentation.

IV.B. Evidence Base

IV.B.1. State Legislative Fragmentation

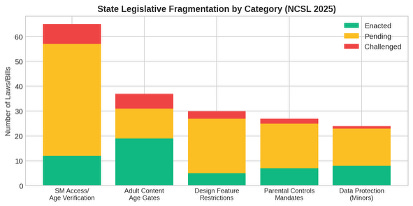

The National Conference of State Legislatures documents over 300 bills pending across 45+ states in 2025. Social media access and age verification leads with 12 enacted laws, 45 pending, and 8 challenged. Adult content age verification shows 19 enacted, 12 pending, 6 challenged. Design feature restrictions: 5 enacted, 22 pending, 3 challenged. Parental control mandates: 7 enacted, 18 pending, 2 challenged.

Proliferation creates compliance complexity that advantages large platforms. Meta, Google, and ByteDance maintain legal teams that can navigate 50-state patchwork. Smaller platforms cannot. The Electronic Frontier Foundation documents that Bluesky and Dreamwidth blocked Mississippi users entirely rather than risk liability under that state’s age verification law. Fragmented regulation produces market consolidation as a side effect.

Connection to Installment I: State fragmentation affects youth outcomes through differential exposure. Teens in states with enacted laws face different platform experiences than teens in states with blocked laws. Age verification causing platform blocking may push teens toward less-regulated platforms or VPNs—potentially concentrating harm rather than reducing it.

IV.B.2. NetChoice Litigation Campaign

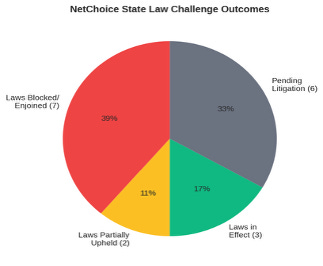

NetChoice, the tech industry group representing Meta, Google, TikTok, and others, has challenged youth protection laws in 18+ states. Results through December 2025: 7 laws permanently blocked or enjoined (Arkansas, Ohio, Georgia, Utah, parts of Texas), 2 laws partially upheld (California SB 976), 3 laws in effect (Florida, Mississippi, Louisiana), and 6 pending litigation.

The pattern reveals constitutional vulnerabilities. Broad age verification requirements trigger strict scrutiny as prior restraints on speech access. Laws requiring parental consent for all social media use face challenges as overbroad. But design-specific provisions—like California’s “addictive feed” ban—survive intermediate scrutiny. The Ninth Circuit (September 2025) upheld provisions requiring parental consent for algorithmic feeds while striking provisions restricting “likes” as content-based regulation.

Connection to Installment II: NetChoice arguments rely partly on parental rights framing: parents should decide children’s media access, not government. The framing resonates because it aligns with adult autonomy preferences. But it assumes the parental coherence that Installment II shows to be compromised. Parents who cannot effectively regulate—because they model the same behaviors and lack technical knowledge—transform the parental rights argument into a vector for regulatory arbitrage rather than a genuine autonomy protection.

IV.B.3. The Design-Versus-Content Pivot

The most significant legal development emerges in the distinction between platform design and user-generated content. Section 230 of the Communications Decency Act immunizes platforms from liability for content posted by users. The open question: does Section 230 immunize liability for design choices that amplify, recommend, and sequence that content?

November 2023: MDL-3047 Judge Rogers rules that Section 230 does not bar negligence claims based on platform design features. Engagement mechanics—notifications, infinite scroll, autoplay, algorithmic recommendation—constitute platform choices, not user content.

July 2024: The Supreme Court in Moody v. NetChoice recognizes platforms as “editors” with First Amendment protection for content curation decisions. The Court does not categorically bar regulation—it remands for proper facial analysis, leaving design accountability theories alive.

September 2025: The Ninth Circuit upholds California’s “addictive feed” ban under intermediate scrutiny. The court accepts that restricting algorithmic feeds for minors constitutes content-neutral regulation of platform conduct, not speech restriction. The “likes” provision fails as content-based, demonstrating the distinction’s granularity.

August 2025: The Supreme Court allows Mississippi’s parental consent law (HB 1126) to remain in effect pending appeal—the first indication that some state regulations may survive.

Summer 2026: MDL-3047 bellwether trials begin. Six school districts will test whether design features (notifications, infinite scroll, autoplay) can trigger liability for youth mental health harm separate from any specific content.

Connection to Installment I: The design-versus-content distinction matters for youth because engagement mechanics that defeat self-regulation (Installment I) constitute design choices. Notifications interrupt attention; infinite scroll defeats satiation; autoplay prevents natural stopping points; algorithmic recommendation amplifies social comparison content. Courts establishing that these mechanics can trigger liability will cause platforms to modify them pre-emptively—and youth self-regulation attempts will face fewer headwinds.

IV.B.4. Federal Legislative Stalemate

Federal legislation has achieved bipartisan support but not enactment. The Kids Online Safety Act (KOSA) passed the Senate 91-3 in 2024—a supermajority crossing party lines. The House December 2025 version removed the “duty of care” provision—the core accountability mechanism that would have required platforms to prevent harm, not merely disclose it. Senator Blumenthal called the removal “an abdication of responsibility.”

COPPA 2.0 (expanding children’s privacy protections to teens) passed the Senate alongside KOSA but remains in House committee. The Kids Off Social Media Act (KOSMA), which would ban under-13 users entirely, sits in Senate committee. The only youth protection measure to become law: the TAKE IT DOWN Act (May 2025), requiring platforms to remove non-consensual intimate images within 48 hours—a narrow provision addressing a specific harm.

Connection to Installment II: Federal stalemate reflects the partisan trust dynamics documented in Installment II. When half of adults distrust whatever institutions the other half trusts, legislative compromise becomes difficult. The 91-3 Senate vote shows bipartisan consensus remains possible, but House fragmentation shows implementation faces headwinds.

IV.C. Analytical Framework

The Chicago School framework applies behavioral precision to institutional coordination dynamics through three analytic lenses.

Coase Function: Transaction costs determine whether coordination occurs at federal, state, or market level. Federal coordination carries high cost (legislative gridlock, constitutional constraints) but produces uniform rules. State coordination carries lower cost but produces fragmentation. Market coordination (platform self-regulation) carries lowest cost but produces inadequate outcomes. Coordination settles at the level where marginal benefits exceed marginal transaction costs—currently state litigation, which explains why MDL-3047 and state AG suits constitute the primary action.

Becker Function: Platforms rationally respond to liability exposure. Pre-litigation, expected cost of design changes exceeds expected cost of harm externalization—so platforms maintain engagement mechanics. Post-verdict, expected costs shift. A bellwether verdict establishing design accountability would change the calculus: platforms would modify designs to reduce liability exposure even without regulatory mandate.

Posner Function: Legal learning velocity tracks how quickly courts develop workable standards. The design-versus-content distinction crystallizes through iterative motion practice. Each ruling refines the boundary: “addictive feeds” are regulable; “likes” are content-based. By Summer 2026 bellwethers, courts will have substantial precedent.

IV.D. Predictions

Five predictions emerge from Installment III’s analysis, each with explicit falsification criteria.

Prediction III-1: Design Accountability Crystallizes. The design-versus-content distinction will prove judicially workable and will reshape platform architecture within 36 months. MDL-3047 bellwether trials will establish that engagement mechanics—separate from user-generated content—can trigger liability. Platforms will modify notification systems, autoplay defaults, and recommendation algorithms in response to litigation risk before regulatory mandates crystallize.

Prediction III-2: Federal Stalemate Persists. No comprehensive federal youth protection legislation will be enacted before 2028. KOSA will either fail or pass in weakened form without duty of care. Federal action will be reactive—responding to state court verdicts and platform changes—rather than proactive.

Prediction III-3: Fragmentation Produces Consolidation. The patchwork of state laws will produce market consolidation as a side effect. Platforms with resources to comply (Meta, Google, ByteDance) will maintain market position. Smaller platforms and new entrants will face prohibitive compliance costs. By 2028, youth social media use will concentrate more heavily in large platforms than in 2025.

Prediction III-4: Pre-emptive Modification. Major platforms will announce voluntary design changes—notification limits, default time restrictions, algorithmic transparency for minors—within 18 months, framed as safety initiatives but driven by litigation risk management. Changes will be real but insufficient: platforms will preserve core engagement mechanics (infinite scroll, variable-ratio reinforcement) while modifying peripheral features.

Prediction III-5: Evasion Cascades. Age verification regimes requiring ID upload or biometric scanning will generate evasion cascades. Teens will use VPNs, borrowed credentials, or platform-specific workarounds. Verification burden will fall disproportionately on legitimate users while motivated users circumvent.

IV.E. Falsification Criteria

Prediction III-1 fails if courts reject the design-versus-content distinction or if platforms do not modify architecture in response to litigation. Prediction III-2 fails if comprehensive federal legislation with duty of care is enacted before 2028. Prediction III-3 fails if market concentration decreases rather than increases. Prediction III-4 fails if platform voluntary changes produce significant (>10%) improvement in Installment I youth metrics. Prediction III-5 fails if age verification produces durable compliance without significant evasion.

V. Integrated Predictions

Installment-specific predictions interact to produce system-level outcomes that no single-layer analysis can capture. Section V synthesizes across the three layers to forecast the most likely trajectories, identify key interaction effects, and map alternative scenarios with their strategic implications.

V.A. Central Prediction: Partial Stabilization Through Litigation

The most likely trajectory produces partial cultural stabilization driven by litigation rather than legislation or voluntary action. MDL-3047 bellwether verdicts (2026) will establish design accountability for engagement mechanics. Platforms will modify designs pre-emptively to manage liability exposure. Modifications will reduce harm at the margins—particularly for the “managed engagement” trajectory class in Installment I—but will not eliminate problematic use patterns for the most vulnerable.

Stabilization will be partial because: (1) platforms will preserve core engagement mechanics while modifying peripheral features; (2) adult coherence failures (Installment II) will continue to undermine intervention effectiveness; (3) institutional fragmentation (Installment III) will prevent uniform implementation; (4) new platforms and features will outpace regulatory response.

V.B. Key Interaction Effects

Youth-Adult Interaction: Adult coherence (Prediction II-1) will prove more predictive of youth outcomes than platform exposure level. Identical design interventions will produce divergent results based on family environment. Installment III legal victories will have differential effects—helping youth in high-coherence families more than youth in low-coherence families, potentially widening outcome disparities even as average harm decreases.

Adult-Institution Interaction: Partisan trust capture (Prediction II-3) will constrain institutional effectiveness regardless of legal merit. Families distrusting the institutions implementing regulations will find ways to opt out or evade. Successful litigation (Prediction III-1) will face implementation headwinds in households with low institutional trust.

Institution-Youth Feedback: Youth harm data (Installment I) will drive litigation success (Installment III), which will modify platform design, which will affect youth outcomes. The positive feedback loop provides the primary mechanism for improvement. The loop operates slowly: litigation takes years, design changes take months to implement and longer to affect outcomes, and measurement lags further. The feedback may not stabilize before a generation of adolescents passes through the system.

V.C. Alternative Scenarios

Optimistic Scenario: Rapid Legal Crystallization. Bellwether verdicts produce clear design accountability standards. Platforms implement substantial changes (not compliance theater). Adult harm recognition (Prediction II-4) creates political constituency for enforcement. Federal legislation passes with duty of care intact. Outcome: significant reduction in problematic use (Installment I) by 2028; narrowing of intergenerational perception gap (Installment II); regulatory convergence reduces fragmentation (Installment III).

Pessimistic Scenario: Constitutional Collapse. The Supreme Court takes NetChoice appeal and rules broadly that platform design choices constitute protected editorial discretion. State laws collapse across the board. MDL-3047 faces First Amendment preemption. Platforms face no liability exposure and no incentive to change. Outcome: continued harm acceleration (Installment I); adult coherence failures compound (Installment II); institutional response capacity exhausted (Installment III).

Base Case: Muddled Middle. Courts draw narrow lines that allow some design regulation but not comprehensive reform. Platforms make incremental changes. Some states have enforceable laws; others face injunctions. Federal legislation remains stalled. Outcome: marginal improvement in aggregate metrics but continued bifurcation (Installment I); persistent credibility gap (Installment II); ongoing legal uncertainty (Installment III). Current evidence supports this trajectory as most likely.

V.D. Strategic Implications

For Policymakers: Focus on design-specific, protocol-based interventions that survive constitutional scrutiny. Avoid broad age-gating that triggers strict scrutiny and produces evasion. Coordinate across states to reduce compliance arbitrage. Accept that litigation will drive more change than legislation in the near term.

For Platforms: Anticipate design accountability verdicts and modify engagement mechanics pre-emptively. Companies moving first will define the new equilibrium and shape regulatory expectations. Resistance through litigation delay represents a short-term strategy with long-term reputation costs.

For Parents and Educators: Recognize that institutional intervention will be slow and partial. Family-level coherence represents the controllable variable. Model the attention behaviors you want to transmit. Acknowledge algorithmic effects on your own cognition before attempting to regulate children’s. The credibility gap closes through demonstrated behavior, not communicated concern.

For Investors: The regulatory crystallization timeline spans 24-36 months. Platforms with engagement mechanics most vulnerable to design accountability (notification-heavy, infinite-scroll-dependent, minor-concentrated user bases) face elevated risk. Platforms pre-emptively modifying designs may incur short-term engagement costs but reduce long-term liability exposure.

Appendix. Sources

Primary sources span peer-reviewed research, government data, legislative records, and court filings. All predictions derive from publicly available evidence subject to independent verification.

Pew Research Center. Teens, Social Media and Mental Health. April 2025.

Pew Research Center. Teens, Social Media and AI Chatbots 2025. December 2025.

Pew Research Center. Americans’ Social Media Use 2025. November 2025.

WHO Regional Office for Europe. Teens, Screens and Mental Health (HBSC Study). September 2024.

Nagata JM et al. Social Media Use and Depressive Symptoms During Early Adolescence. JAMA Network Open. May 2025.

Gallup. Confidence in Institutions. 2022-2025.

Gallup. U.S. Trust in Government Depends Upon Party Control. November 2025.

NCSL. Social Media and Children 2025 Legislation.

MDL-3047 Court Records. In re: Social Media Adolescent Addiction. N.D. Cal.

NetChoice v. Bonta. 9th Cir. September 2025.

Moody v. NetChoice. 603 U.S. ___ (2024).

Electronic Frontier Foundation. Age Verification Resource Hub. December 2025.