MCAI Economics Vision: Chicago School Accelerated — Integrated Application, AI Copyright Liability Migration

When Behavioral Incapacity Forces Risk Upstream

See law and behavioral economics framing for this foresight simulation at MCAI Economics Vision: Chicago School Accelerated — Integrated Application, AI Hallucinations, AI Copyright, and Crypto ATMs (Dec 2025).

Executive Summary

AI copyright litigation concerns where residual risk must land once licensing coordination fails and users cannot protect themselves. Even if courts permit broad model training under fair-use doctrine, liability will migrate upstream because foundation model developers (OpenAI, Anthropic, Meta) and platform integrators (Microsoft, Google) control the only scalable prevention surfaces: retrieval design, excerpt throttling, attribution systems, logging, auditability, and recall.

The completed Coase–Becker–Posner arc from the Chicago School Accelerated series structures the analysis. Coase explains why coordination failed, Becker explains why exploitation persists, and Posner explains where liability must land. Each layer builds on the prior; none suffices alone. Chicago School Accelerated — The Integrated Framework (Dec 2025).

Courts cannot stabilize downstream liability even if they want to. Downstream allocation fails because users cannot reliably avoid harm ex ante and therefore cannot generate deterrence or learning through private precautions. Installment I of the Chicago Accelerated Liability series—Crypto ATM Liability Migration —exhibits the same causal geometry: downstream actors cannot behaviorally adjust, upstream actors control architecture, and liability reallocation becomes the only mechanism capable of producing deterrence.

The thesis does not depend on how courts resolve fair use in isolation. Behavioral incapacity and architectural control force liability migration regardless of doctrinal entry point.

The Capacity Theorem

Classical efficiency analysis asks which actor can prevent harm at the lowest cost. Behavioral economics requires a prior inquiry: which actor can prevent harm at all once cognitive limits bind. The Capacity Theorem extends efficiency analysis by introducing behavioral incapacity as a binding constraint.

Structural non-avoiders cannot serve as efficient risk bearers regardless of cost comparisons. Allocating risk to a non-avoider produces deadweight loss because that party cannot adjust behavior in response to price signals. In AI copyright contexts, users cannot reliably discount, verify, or avoid AI-generated substitution harms due to automation bias, disclaimer habituation, and verification friction.

Capacity Rule. If a party lacks the behavioral ability to avoid harm ex ante, that party cannot serve as an efficient risk bearer as a matter of law, regardless of nominal price sensitivity.

Courts already reallocate duty where reliance and control diverge—product liability, negligent design, and informed consent doctrines all rest on the same logic. AI copyright presents an identical structural condition: users rely on outputs they cannot evaluate, while AI developers control the architecture that produces harm.

Once behavioral incapacity binds, efficiency requires liability to follow prevention capacity rather than nominal price. The Capacity Theorem anchors every downstream prediction in the simulation.

I. Why Artificial Intelligence Copyright Disputes Are Structurally Hard

AI copyright disputes resist resolution because they fragment legal analysis across multiple acts while concealing substitution pathways. Doctrine designed for end-user copying cannot cleanly map onto probabilistic pattern extraction and recomposition. The litigation center of gravity has shifted from metaphysical debates about transformation to observable economic substitution.

A. Layered Legal Acts and Substitution Pathways

Modern AI systems fragment copyright analysis into distinct acts across the stack. Model developers ingest large corpora to train systems, store and transform works through embeddings and retrieval mechanisms, and deploy outputs that summarize, excerpt, or reproduce protected material. Each act triggers different legal theories and evidentiary burdens.

Plaintiffs pursue layered claims that attack training, intermediate use, and output simultaneously. Fragmented legal acts obscure substitution pathways, but substitution evidence—once shown—collapses doctrinal distinctions and forces courts to allocate responsibility across layers.

Structural fragmentation prevents resolution through a single doctrinal holding. Substitution evidence forces cross-layer allocation.

B. Speech Versus Product Classification

Speech doctrines allocate risk downstream based on listener autonomy. Product-safety doctrines allocate risk upstream based on asymmetric control and latent defects. Behavioral evidence demonstrates that AI outputs lack the interpretive autonomy assumed by speech frameworks.

Consumer-facing AI outputs function as products in the efficiency-relevant sense: they deliver informational services where users cannot independently evaluate accuracy, provenance, or licensing status. The classification turns on functional reliance, not expressive content. A search-synthesis response that substitutes for reading an article operates as a functional product regardless of whether it also constitutes expression.

Product-style duties better align liability with prevention capacity. Consumer-facing AI systems resemble defect-prone products because users cannot evaluate risk ex ante, cannot observe provenance, and cannot audit truth or licensing at reasonable cost.

Efficiency favors product-style duties for consumer-facing systems regardless of expressive content. Classification as functional product sets the direction of liability migration.

AI copyright disputes are structurally hard because they fracture doctrine and conceal substitution pathways while undermining speech-based risk allocation. Structural forces push courts toward architectural responsibility.

Contact mcai@mindcast-ai.com to partner with us on law and behavioral economics foresight simulations.

II. Parties and Institutions Modeled

The simulation models actors across the AI copyright ecosystem to identify how asymmetric control and asymmetric learning speeds produce predictable allocation outcomes. Foundation model developers, search-synthesis operators, and platform integrators differ in architectural control; governance institutions differ in adaptation speed. Control variation explains exposure divergence, while throughput variation explains timing.

A. AI Developers and Platform Integrators

Three actor categories exercise different degrees of architectural control over training, retrieval, and output surfaces:

Foundation model developers (OpenAI, Anthropic, Meta) control training corpora and model behavior

Search-and-synthesis operators (Perplexity, AI search features) combine retrieval with generation

Platform integrators (Microsoft, Google) distribute AI through existing products at massive scale

Control variation explains divergence in liability exposure. Search-and-synthesis operators face the highest substitution risk because their outputs directly compete with source material. Platform integrators amplify exposure through scale, distribution, and user habituation.

Control surface area, not intent, differentiates exposure across AI actors.

B. Content Owners and Governance Institutions

Content owners span premium journalism, book publishers, music rights holders, and visual licensors. Governance institutions include the Copyright Office, consumer protection agencies, state attorneys general, courts, and legislatures.

Governance institutions differ sharply in coordination capacity and adaptation speed. State enforcement moves faster than federal legislation; platform integrators adapt faster than courts; insurers price risk before appellate doctrine stabilizes. Divergence in throughput shapes timing rather than direction of outcomes.

Institutional throughput determines when change occurs, not whether it occurs.

The modeled ecosystem contains asymmetric control and asymmetric learning speeds. Asymmetries drive predictable allocation outcomes regardless of doctrinal contingency.

III. Integrated Cognitive Digital Twin Simulation Results

The Cognitive Digital Twin (CDT) simulation integrates five analytical lenses from the Chicago School Accelerated framework. Each lens operates sequentially rather than independently, producing a single causal trajectory. Coordination failure enables incentive exploitation; incentive exploitation produces behavioral patterns that efficiency analysis can allocate; causal validation confirms which pathways bear evidentiary weight; institutional dynamics determine timing.

A. Coordination Failure Analysis

Licensing coordination collapses where open distribution and synthesis architectures bypass market gates. News and music possess clearer substitution channels and stronger rights infrastructure than books. Visual content occupies an intermediate position due to partial licensing maturity.

Coordination failure explains why private ordering did not precede litigation. Absent focal points and shared licensing standards, rational actors defected rather than negotiated.

Coordination collapse is the necessary precondition for every downstream dispute.

B. Incentive Re-Optimization Analysis

Once coordination fails, incentives reward scraping, synthesis, and rapid deployment. Feature-level compliance becomes preferable to exit because output controls cost less than retraining. Litigation persists because expected returns exceed expected penalties until liability crystallizes.

Observed behavior aligns with rational exploitation rather than negligence or confusion. AI developers continue expansion despite legal uncertainty because the payoff gradient rewards growth over caution.

Incentive structure explains persistence of disputed conduct despite litigation risk.

C. Efficient Liability Allocation Analysis

The Hand formula clarifies allocation by comparing prevention costs to expected harm. Annual prevention costs for major AI developers reach approximately $250–450 million across filtering, attribution, licensing, and audit systems. Identifiable spend categories include engineering headcount for retrieval and excerpt controls, licensing outlays for high-risk domains, compliance tooling for provenance workflows, and audit infrastructure for logs and replay.

Annual expected harm from substitution, licensing displacement, litigation, and reputational damage reaches approximately $2.5–5.0 billion. Observable signals include revenue displacement claims, subscription losses, settlement pressure, litigation reserves, and brand-risk costs that insurers and distribution partners increasingly price.

Expected harm exceeds prevention costs by roughly six to fifteen times. Foundation model developers, search-synthesis operators, and platform integrators are therefore the lowest-cost avoiders—and, given behavioral non-avoidance downstream, the only-capable avoiders.

Efficiency requires upstream allocation once behavioral non-avoidance binds.

D. Causal Pathway Validation

Causal Signal Integrity (CSI) analysis confirms that output-layer substitution, lyrics recall, visual memorization, and hallucinated attribution form load-bearing pathways. Training-only claims without output evidence require additional discovery to establish causation.

Logging gaps, prompt–response replayability, and attribution hallucinations provide the clearest causal chain between architecture and market harm. Plaintiffs can connect system design choices to specific substitution events through output analysis.

Output behavior provides the strongest causal anchor for enforcement and the lowest-friction discovery pathway.

E. Institutional Adaptation and Disclosure Dynamics

State enforcement and agency guidance move faster than legislation. Platform integrators adapt faster than courts. Firms prefer process narratives over verifiable provenance until discovery compels disclosure.

Insurers move faster than appellate doctrine. Underwriting exclusions and coverage conditions will require output controls, attribution practices, and logging standards before courts converge on a stable test. Market practices therefore stabilize before doctrine converges.

Institutional speed differentials explain why contracts, underwriting, and settlements precede statutes and appellate clarity.

The integrated simulation produces a single prediction: liability migrates upstream first through output duties, then propagates backward toward training controls.

IV. Structural Isomorphism with Crypto ATM Liability Migration

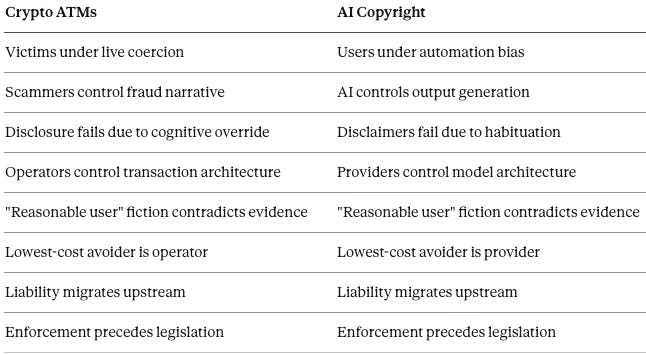

Cross-domain validation strengthens confidence that AI copyright outcomes reflect structural forces rather than contingent doctrinal choices. Installment I of the Chicago Accelerated Liability series—Crypto ATM Liability Migration—exhibits identical causal geometry despite different facts. Both domains demonstrate that behavioral incapacity downstream forces liability upstream regardless of the specific harm mechanism.

Different facts. Identical causal structure.

When downstream actors cannot behaviorally adjust, law, regulation, and insurance converge on upstream architectural control. The framework identifies recurring causal geometry rather than domain-specific narratives.

Isomorphism across installments validates the structural nature of the prediction. AI copyright liability migration follows the same trajectory as crypto ATM liability migration because both domains satisfy the Capacity Theorem’s conditions.

V. Forecasts and Allocation Rules

Predictions flow directly from the integrated simulation. Scenario variation affects speed but not direction. Allocation rules follow from efficiency analysis once behavioral capacity supplies the principled boundary.

A. Scenario Outlook

The most likely equilibrium permits some training while imposing strict output obligations. A secondary equilibrium consolidates standardized licensing and revenue sharing. A lower-probability scenario involves injunction-driven redesign of search-and-synthesis products.

Timing varies by institution, but direction remains stable across scenarios. Platform integrators will move first because they face the highest exposure and possess the highest adaptation velocity.

Scenario variation affects speed, not destination.

B. Rules for Efficient Allocation

Efficient allocation requires strict liability for consumer-facing output substitution, paired with safe harbors for verified licensing and attribution. Disclaimer defenses fail where users lack avoidance capacity. Enterprise deployments justify narrower defenses due to institutional oversight and controlled workflows.

The framework predicts architectural duties and cost internalization, not categorical bans on training or output. AI developers who build licensing infrastructure, attribution systems, and audit capacity can continue operating under safe harbor protection. Innovation survives; externalization does not.

Behavioral capacity supplies the principled boundary for liability rules.

C. Registered Predictions

Five predictions carry explicit timelines and falsification conditions. Failure of a majority would require framework revision.

Registered predictions separate foresight from narrative by specifying conditions under which the framework fails.

Predictable rules emerge once efficiency, behavior, and architectural control align. Explicit predictions anchor the framework to observable outcomes within defined time horizons.

VI. Conclusion

Coordination failure explains why disputes emerged. Incentive exploitation explains why disputes persist. Behavioral incapacity explains where liability must land. AI copyright outcomes will converge on upstream allocation regardless of doctrinal entry point because downstream liability cannot produce deterrence or learning.

Foundation model developers (OpenAI, Anthropic, Meta) and platform integrators (Microsoft, Google) control the scalable prevention surfaces: retrieval design, excerpt throttling, attribution systems, logging, and recall. Courts, regulators, and insurers will converge on that architectural fact and assign responsibility accordingly.

The question is no longer whether liability shifts upstream, but how quickly, through which mechanisms, and at what transition cost.

Appendix: Methodology and Limitations

Core Metrics

Causal Signal Integrity (CSI): Composite score evaluating whether a causal chain is load-bearing. Calculated as CSI = (ALI + CMF + RIS) / DoC², where Action–Language Integrity (ALI) measures alignment between stated rationale and observed conduct, Cognitive–Motor Fidelity (CMF) measures reliability of behavioral prediction, Resonance Integrity Score (RIS) measures consistency across repeated observations, and Degree of Confounding(DoC) measures alternative explanations.

Control Surface Area: Measures the scope of architectural control an actor exercises over harm-producing systems.

Warn-and-Shift Failure Index: Measures the probability that disclaimer-based risk shifting will fail to produce behavioral adjustment.

Limitations

Predictions are time-bound and falsifiable; failure of registered predictions would require framework revision. Consumer–enterprise distinctions affect scope rather than direction of liability migration. Hand formula estimates are order-of-magnitude illustrations; the directional conclusion survives wide parameter variation.