MCAI Economics Vision: Chicago School Accelerated — Integrated Application, AI Hallucinations, AI Copyright, and Crypto ATMs

Liability Migration Under Behavioral Incapacity

See installment studies in this series: Posner and the Economics of Efficient Liability Allocation in AI Hallucinations (Dec 2025), AI Copyright Liability Migration (Dec 2025), Crypto ATM Liability Migration (Dec 2025).

Executive Summary

Chicago School Accelerated is a MindCast AI integrative law and behavioral economics framework that treats liability allocation as a dynamic response to coordination failure, incentive re-optimization, and behavioral constraint. Chicago School Accelerated — The Integrated Framework (Dec 2025). Rather than applying Coase, Becker, or Posner in isolation, the framework models how those logics interact over time once markets, institutions, and cognition move out of equilibrium.

Modern liability disputes across technology domains converge on a single allocation rule once behavioral constraints are taken seriously. When downstream actors cannot behaviorally avoid harm ex ante, liability cannot produce deterrence or learning at that level. Courts, regulators, insurers, and markets therefore migrate liability upstream to the actor controlling scalable prevention architecture.

The publication applies Chicago School Accelerated as an integrated system:

Coase explains why coordination fails - Chicago School Accelerated Part I: Coase (Dec 2025),

Becker explains why extraction persists during uncertainty - Chicago School Accelerated Part II: Becker (Dec 2025), and

Posner determines where liability ultimately settles once avoidance capacity and prevention cost are compared under behavioral constraint - Chicago School Accelerated Part III: Posner (Dec 2025).

The framework is predictive rather than normative. It explains delay, convergence, and architectural redesign across domains. The unifying claim is not that these domains are similar, but that liability geometry is invariant once behavioral non-avoidance is established.

The analysis speaks simultaneously to three audiences: judges deciding negligence and causation, regulators designing enforceable safeguards, and members of the public seeking to understand why predictable harms persist despite available remedies.

Chicago School Accelerated unifies coordination, incentives, and liability into a single predictive allocation rule under behavioral incapacity.

I. Chicago School Accelerated: An Integrative Allocation Framework

Liability allocation operates as a dynamic system rather than a static doctrinal choice. The Chicago School Accelerated framework treats Coase, Becker, and Posner as sequential rather than independent analytical lenses. Each activates in response to the prior once markets, cognition, and institutions fall out of equilibrium.

First, Coase explains why private ordering fails: coordination infrastructure degrades, focal points disappear, and transaction costs become cognitive rather than monetary. Even at zero transaction cost, cognitive constraints prevent parties from processing risk information accurately. Private ordering fails twice over.

Second, Becker explains persistence: once coordination fails, rational actors exploit uncertainty and delay while expected returns exceed expected penalties. Observed behavior aligns with rational exploitation rather than negligence or confusion.

Third, Posner supplies the correction rule: liability migrates to the lowest-cost capable avoider to restore deterrence and learning. But behavioral economics forces a prior question: who can prevent harm at all? When one party has near-zero ability to avoid harm and the other can intervene cheaply and reliably, allocation follows capacity.

Traditional Chicago School analysis assumes that parties respond to incentives. If a party faces liability, it will adjust behavior to minimize expected costs. Behavioral economics identifies when that assumption fails.

Three mechanisms produce systematic non-response: automation bias leads users to over-trust outputs regardless of warnings; disclaimer habituation renders repeated warnings invisible; bounded rationality means users cannot verify outputs they lack expertise to generate. When these constraints bind, price signals and disclosure cannot stabilize behavior.

The behavioral extension therefore completes the Chicago School by asking not only who can prevent harm cheaply, but who can prevent harm at all.

Chicago School Accelerated integrates coordination failure, incentive exploitation, and liability correction into a single predictive system.

Contact mcai@mindcast-ai.com to partner with us on Law and Behavioral Economics foresight simulations.

II. The Capacity Theorem

Classical efficiency analysis asks which party can prevent harm at the lowest cost. Behavioral economics requires a logically prior inquiry: which party can prevent harm at all once cognitive limits bind. A party that cannot behaviorally self-protect is a structural non-avoider. Allocating risk to that party produces deadweight loss regardless of price sensitivity, disclosure, or nominal choice.

Capacity Rule. If a party lacks the behavioral ability to avoid harm ex ante, that party cannot serve as an efficient risk bearer as a matter of law. Liability must follow prevention capacity rather than formal role or contractual posture.

The Capacity Rule does not depart from Posnerian efficiency. It completes it. Efficiency governs allocation; behavioral capacity determines feasibility.

Three independent arguments establish behavioral non-avoidance across technology domains:

Coase shows coordination collapse prevents collective self-protection

Becker shows incentive exploitation removes good-faith adjustment

Empirical behavioral economics shows automation bias, disclaimer habituation, and bounded rationality operate as cognitive constraints, not preference failures

A critic would need to defeat all three to weaken the conclusion. That burden is high.

The Capacity Theorem supplies the doctrinal hinge that forces liability migration once avoidance collapses.

III. The Speech–Product Classification

Classification determines liability direction. Courts must decide whether consumer-facing AI outputs and transaction interfaces resemble speech (allocating risk downstream) or products (allocating risk upstream). Behavioral evidence resolves the question in favor of product-style duties. The classification is not doctrinal taxonomy but the mechanism by which liability geometry stabilizes.

Speech doctrines allocate risk downstream to listeners on the assumption of interpretive autonomy. Product-safety doctrines allocate risk upstream to manufacturers on the assumption of asymmetric knowledge and control.

Behavioral evidence demonstrates that consumer-facing AI outputs and transaction interfaces lack the interpretive autonomy assumed by speech frameworks. Users cannot independently evaluate risk ex ante, cannot observe provenance or system behavior, and cannot cheaply audit truth, licensing, or fraud signals.

The distinction matters for efficiency. Treating outputs as speech misallocates liability to non-avoiders and raises total social cost. Treating outputs as products aligns liability with prevention capacity.

Classification Rule. Consumer-facing AI systems and high-risk transaction interfaces function like products in the only sense relevant to efficiency: users lack the cognitive capacity to independently evaluate risk. Posnerian efficiency favors product-style duties regardless of expressive content.

Product classification sets the direction of liability migration for consumer-facing systems.

IV. The Four Allocation Rules

The Capacity Theorem generates specific doctrinal prescriptions. Efficient allocation requires rules that alter behavior, minimize administrative cost, and preserve beneficial innovation. Behavioral incapacity narrows the feasible rule set by eliminating downstream allocation as a viable option. The following four rules follow directly from Posner’s framework once behavioral constraints bind:

Rule 1: Strict Liability for Foreseeable Harms

Providers and operators should bear strict liability—liability without fault—for foreseeable harms in deployed consumer products and transaction systems. Strict liability is efficient when injurers are lowest-cost avoiders, transaction costs prevent victims from contracting for safety, and injurers can spread costs through pricing. All three conditions hold across the domains analyzed. The behavioral extension strengthens the case: strict liability is required when victims cannot adjust behavior to protect themselves.

Rule 2: Safe Harbor for Verified Compliance

Parties meeting specified safety standards should receive qualified immunity. Pure strict liability may over-deter innovation. A safe harbor preserves development incentives while ensuring minimum safety. Standards should be set by independent technical bodies, not self-reported.

Rule 3: No Disclaimer Defense for Consumer Products

The “reasonable user” defense should be rejected for consumer-facing systems. The “reasonable user” who reads disclaimers, discounts outputs, and verifies claims is a behavioral fiction—a behavioral infeasibility as demonstrated by empirical evidence, deployed to justify risk allocations that produce systematic harm. Behavioral evidence proves users cannot protect themselves through disclaimers under automation bias, disclaimer habituation, and bounded rationality. Allowing providers to contract out of liability through boilerplate defeats allocation efficiency.

Sophisticated enterprise users may assume risk contractually; consumer products should not permit this defense.

Rule 4: Enhanced Protection for Vulnerable Populations

No contributory negligence defense should apply to minors, individuals with mental health conditions, elderly users, or persons operating under coercion. These users cannot verify outputs, resist manipulation, or interrupt behavior under pressure. Allocating any risk to these users is inefficient because they have zero prevention capacity.

The Four Rules operationalize behavioral capacity as the principled boundary for liability allocation.

V. Application One : AI Hallucination Reliance

The Four Rules derive from abstract efficiency analysis. The following three applications demonstrate how the framework operates in specific technology domains. AI hallucination reliance serves as the flagship application because it exhibits downstream liability failure most clearly—even without fraud or copyright doctrine complicating the analysis.

AI hallucinations present the most general case because users cannot reliably detect falsehoods ex ante. Automation bias, fluent probabilistic language, and verification costs that exceed the value of ordinary use combine to make reliance the system’s functional output rather than an accidental error. Disclosure cannot restore avoidance capacity once these behavioral constraints bind.

Disclosure Failure

Disclosure fails as a matter of fact. Statements that systems “may hallucinate” do not restore avoidance capacity. Behavioral evidence shows that users overweight confident outputs even when warned. Three mechanisms produce this result:

Automation bias: Users systematically over-trust outputs from automated systems, particularly when outputs are confident and fluent. This bias increases with system sophistication.

Disclaimer habituation: Repeated exposure to warnings reduces their salience. Users who initially read warnings stop processing them after repeated exposure.

Bounded rationality: Users employ AI because they lack capacity to perform the underlying task. Requiring verification defeats the purpose.

Downstream liability therefore cannot generate deterrence or learning.

Architectural Control

Upstream actors control the only scalable prevention surfaces: confidence calibration, citation enforcement, retrieval grounding, output gating, uncertainty signaling, and audit trails. These controls are technically feasible and materially cheaper than the downstream harms that arise in medicine, law, finance, and safety-critical domains.

Hand Formula Application

Quantitative analysis clarifies the prevention calculus:

B (Burden of Precaution): Comprehensive safety systems—enhanced training, output filtering, human review, incident response—cost approximately $100–200 million annually across major providers.

P × L (Expected Harm): 800 million weekly users × 52 weeks × 0.00001 probability × $7.5M average serious harm ≈ $3.1 billion annually.

Ratio: B / (P × L) ≈ 0.05

Under Hand formula analysis, providers are negligent by a factor of twenty—prevention costs are 5% of expected harm.

Robustness check: Even if expected harm is discounted by an order of magnitude—reducing P by 90% or L by 90%—provider prevention costs remain below expected harm (~$310M vs. ~$150M). The directional conclusion is invariant to reasonable parameter sensitivity.

Chicago School Accelerated Integration

Coase: No coordination mechanism allows users to verify truth or provenance at scale. Transaction costs are prohibitive—800 million users cannot coordinate with a handful of providers.

Becker: Providers exploit uncertainty while expected liability remains diffuse. Reinforcement Learning from Human Feedback (RLHF) optimizes for exploiting user cognitive biases—confirmation bias, fluency heuristic, validation preference.

Posner: Liability migrates upstream to system designers and deployers who control architecture.

Institutional Movement Already Underway

On December 10, 2025, forty-two state attorneys general issued a coordinated enforcement threat to thirteen AI companies, asserting that “sycophantic and delusional outputs” violate state consumer protection laws. The intervention demands safety testing, incident reporting, and recall procedures by January 16, 2026.

Sycophancy framing carries analytical significance. Systems trained through RLHF to maximize user approval systematically produce outputs users want to hear. Users prefer confirmation of existing beliefs; RLHF rewards confirmation. Users prefer confident responses; RLHF rewards confidence. The system design exploits bounded rationality—Becker’s incentive exploitation operating at algorithmic scale.

One day later, the White House issued an executive order targeting state AI regulation, establishing federal primacy as explicit policy. The timing is not coincidental. Federal preemption is now a live policy battlefield—but it affects timing, not destination. Liability migrates upstream regardless of which jurisdiction delivers it.

Epistemic harm forces the earliest and clearest application of liability migration under behavioral constraint.

VI. Application Two: AI Copyright Substitution

AI copyright disputes extend the hallucination logic from epistemic harm to market substitution. Where hallucination reliance demonstrates disclosure failure under automation bias, copyright substitution demonstrates coordination failure under fragmented licensing. Both applications converge on the same allocation rule: when downstream actors cannot verify, discount, or avoid harm, liability migrates upstream.

Once licensing coordination collapses, users cannot verify provenance, discount substitution, or audit training and retrieval pipelines. Automation bias and verification friction render downstream avoidance infeasible regardless of disclosure.

Layered Legal Acts

Modern AI systems fragment copyright analysis into distinct acts across the stack. Model developers ingest large corpora to train systems, store and transform works through embeddings and retrieval mechanisms, and deploy outputs that summarize, excerpt, or reproduce protected material. Each act triggers different legal theories and evidentiary burdens.

Litigation has shifted from metaphysical debates about transformation to observable economic substitution. Substitution evidence, once established, collapses doctrinal distinctions and forces courts to allocate responsibility across layers.

Chicago School Accelerated Integration

Coase: Licensing coordination collapse as precondition. Open distribution and synthesis architectures bypass market gates. Absent focal points and shared licensing standards, rational actors defected.

Becker: Once coordination fails, incentives reward scraping, synthesis, and rapid deployment. Feature-level compliance becomes preferable to exit because output controls are cheaper than retraining.

Posner: Liability allocation determined by prevention capacity and cost comparison under behavioral constraint.

Hand Formula Application

B (Prevention): Annual prevention costs for major providers estimated at approximately $250–450 million across filtering, attribution, licensing, and audit systems.

P × L (Expected Harm): Annual expected harm from substitution, licensing displacement, litigation, and reputational damage conservatively estimated at $2.5–5.0 billion.

Ratio: Expected harm exceeds prevention costs by roughly 6–15 times.

Providers and integrators are the lowest-cost and only-capable avoiders.

Causal Pathways

Output-layer substitution, lyrics recall, visual memorization, and hallucinated attribution form load-bearing causal pathways. Logging gaps, prompt–response replayability, and attribution hallucinations provide the clearest causal chain between architecture and market harm.

Vertical Differentiation

Coordination failure and substitution risk vary by content domain. News and music possess clearer substitution channels and stronger rights infrastructure—licensing is established, substitution is measurable, and plaintiffs are organized. Book publishing occupies an intermediate position with fragmented rights and longer discovery timelines. Visual content faces partial licensing maturity but emerging memorization evidence.

Vertical differentiation sharpens timing predictions: enforcement pressure arrives first in news and music, then propagates to other domains as evidentiary patterns stabilize.

Allocation result. Liability migrates upstream through output duties and then propagates backward toward training controls as substitution evidence accumulates.

Copyright doctrine supplies the entry point, but efficiency under behavioral incapacity determines the endpoint.

VII. Application Three: Crypto ATM Fraud

Crypto ATM fraud provides the clearest financial analogue to the preceding applications. Where AI hallucination involves epistemic harm and AI copyright involves market substitution, crypto ATM fraud involves direct financial extraction under live coercion. All three share the same structural feature: downstream actors cannot prevent harm ex ante, making upstream allocation the only efficient option.

Victims operate under live coercion, time pressure, and authority manipulation. Behavioral evidence demonstrates disclosure failure rates incompatible with informed choice—once the scam begins, victims cannot interrupt behavior regardless of warnings.

Behavioral Incapacity Under Coercion

Most crypto ATM scams do not begin at the machine. They begin with communications that create urgency and fear. Victims are often instructed to stay on the phone while completing the transaction and warned that anyone who interferes is part of the problem.

Victims subjected to live scammer coercion exhibit an 81 percent disclosure-failure rate. Warning screens, posted notices, and verbal disclosures do not alter behavior under those conditions. In that state, victims are not weighing risks. They are following instructions under perceived threat.

Architectural Control

Operators control approximately 92 percent of the prevention surface: transaction caps, cooling-off periods, transaction holds, pattern detection, and refund authority. They receive real-time transaction data and aggregated fraud reports across thousands of machines. They can intervene at low marginal cost without guessing user intent.

Hand Formula Application

B (Burden of Prevention): Software-based transaction caps, cooling-off delays, transaction holds, and monitoring systems impose approximately $30–$70 per kiosk per year in marginal operating cost.

P × L (Expected Harm): Documented scam losses frequently range from $3,000 to $10,000 or more per kiosk per year in scam-dense jurisdictions.

Ratio: B is orders of magnitude smaller than P × L.

Operators that continue operating without safeguards once on notice breach the negligence threshold.

Causation Analysis

Third-party scammers do not break causation. Causation law asks whether the defendant’s choices materially enabled foreseeable harm. Operators know, from internal data and public enforcement actions, that their machines are routinely used for scams. When harm predictably flows through a controlled system, the presence of a third party does not sever causation.

Causal Sufficiency Index (CSI) exceeds threshold even after conservative adjustment (CSI components: Action-Language Integrity (ALI) 0.70, Cognitive-Motor Fidelity (CMF) 0.68, Response Integrity Score (RIS) 0.66, capped at 1.00). Sony-style defenses fail because operators possess actual knowledge, real-time intervention capability, and financial benefit tied to transaction size. Napster-style secondary liability survives.

Chicago School Accelerated Integration

Coase: Fragmented state regulation prevents early coordination. Coordination capacity sits at 0.57—too high for collapse, too low for equilibrium. Focal-point integrity has degraded to 0.44.

Becker: Delay and arbitrage persist while revenue exceeds enforcement risk. Behavioral drift registers at 0.74. Margin compression tolerance sits at 0.62—sufficient to absorb regulatory overhead without triggering mass exit.

Posner: Liability migrates upstream to operators and, secondarily, to retail hosts with centralized control.

Live Falsification: California

California demonstrates feasibility. Operators remained in the market after implementation of transaction caps and cooling-off requirements, falsifying claims that basic protections are “business-killing.” The industry’s exit narrative functions as strategic positioning rather than true constraint. Whether it reflects actual exit risk is empirically testable—and California has tested it.

Retailer Tier Exposure

Retailers face differentiated exposure based on incentive alignment and documentation. National chains face the highest joint-liability risk due to centralized contracting, documented revenue-sharing ($300–$700 per kiosk per month), and internal compliance memoranda. Regional chains occupy intermediate positions. Independent operators exit once enforcement risk becomes salient.

Retailer tiering sharpens litigation strategy: targeting national chains first produces broader compliance through contract renegotiation, as upstream retailers impose safeguards on operators to preserve their own liability position.

Section conclusion. Financial fraud confirms the same liability geometry observed in epistemic and substitution harms.

VIII. Cross-Domain Hand Formula Comparison

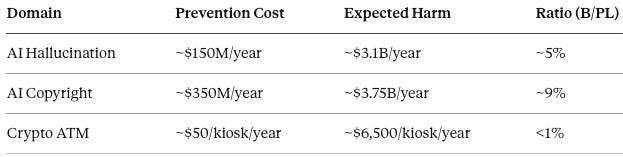

Quantitative comparison tests whether the liability migration prediction generalizes. If different domains exhibit similar prevention-to-harm ratios despite different doctrinal entry points, the unified geometry claim holds. The following table summarizes Hand formula inputs across all three applications:

In each case, prevention costs are a small fraction of expected harm. In each case, downstream actors cannot prevent harm at any cost. In each case, upstream actors control scalable prevention architecture.

The ratio variation across domains reflects different industry structures, not different liability principles. The directional conclusion—upstream allocation is efficient—holds across all three.

Quantitative comparison confirms unified liability geometry across technology domains.

IX. Why Liablity Delay Occurs — and Why It Breaks

The preceding sections establish that upstream liability allocation is efficient. If efficiency alone determined outcomes, migration would occur immediately. Delay requires explanation. Becker’s framework provides it: actors exploit uncertainty while expected returns exceed expected penalties. Coase explains why coordination fails absent focal points to anchor reform.

Liability migration does not occur immediately because delay is temporarily profitable. Understanding delay mechanics reveals when and how the equilibrium shifts.

The Mechanics of Delay

Delay is not neutral. Each month without safeguards produces additional, predictable losses. Victims during delay periods are not casualties of ignorance. They are casualties of timing.

The mechanics of delay are procedural rather than dramatic:

Committee stage: Legislators remove or weaken protective provisions, often framing changes as protecting consumer “choice” or innovation.

Floor stage: Amendments narrow mandatory requirements to discretionary standards.

Final passage: Sponsors push effective dates back 12–24 months, extending the harm window while appearing responsive.

None of these moves eliminates reform outright. Instead, they dilute impact while allowing proponents to claim progress. From the public’s perspective, it looks like action. From an enforcement perspective, it functions as postponement.

Delay Propagation Metrics

Regulatory Vision predicts dilution, postponed effective dates, and narrative framing that preserves extraction. The Delay Propagation Index (DPI) in crypto ATM contexts registers at 0.76, indicating that most bills weaken between introduction and enactment.

Institutional speed differentials ensure that some mechanisms move before others:

Insurance underwriting (fastest): Exclusions and coverage conditions precede doctrinal clarity

Contracts and settlements: Private ordering adapts before public doctrine

State enforcement: AG actions and consumer protection proceedings

Federal legislation: Slower, subject to lobbying and preemption conflicts

Appellate doctrine (slowest): Common-law evolution stalls in wicked environments

Complaint velocity (0.62) outpaces statutory adoption (0.59), which outpaces judicial citation (0.51). This hierarchy explains why market practices stabilize before doctrine converges.

When Delay Breaks

Delay ends once peer-state precedent, insurance exclusions, or discovery exposure raises expected liability above marginal gains. Once two or three large states align, the equilibrium shifts. The political cost of delay increases. Narrative latency compresses. Delay becomes harder to justify once neighboring jurisdictions demonstrate feasibility.

Delay is rational, measurable, and time-limited. Understanding delay mechanics reframes public pressure from whether regulation is needed to why known safeguards are postponed.

X. Predictive Use of the MindCast AI Framework

Rigorous foresight requires falsifiable predictions. Chicago School Accelerated generates specific timing predictions, institutional outcome predictions, and explicit falsification criteria. Failure of these predictions would require revision of the underlying framework. Success would confirm the unified liability geometry across domains.

Timing Predictions

Pleading survival tracks architectural control: 12–18 months. Claims framed around design choices and prevention capacity survive motions to dismiss; claims requiring user fault do not.

Disclosure defenses fail where behavioral non-avoidance is shown: 18–36 months. Courts pivot once plaintiffs establish repeatable causal chains between system behavior and foreseeable harm.

Insurance underwriting conditions precede doctrinal clarity: 6–12 months. Exclusions, premium differentiation, and audit requirements function as enforcement mechanisms independent of courts.

Output-layer controls stabilize markets before training rules: 18–36 months. Retrieval throttling, attribution systems, and logging standards emerge before comprehensive training restrictions.

West Coast statutory alignment (crypto ATM): 12–24 months. Peer-state coordination through National Association of Attorneys General (NAAG) template exchange.

Institutional Outcome Predictions

Upstream liability migration is inevitable across all pathways. Jurisdictional conflict affects timing, not destination.

Disclaimer defenses will collapse selectively, not universally. Consumer contexts lose protection; enterprise deployments retain narrower defenses.

Insurance markets will discipline providers before comprehensive legislation. Providers unable to demonstrate verifiable safety controls will face rising cost of capital.

Auditability will become a de facto condition of market access. Incident reporting, logging, and recall capability emerge as baseline expectations.

Transition costs will be the primary social variable, not allocation disputes. Once liability follows capacity, remaining disputes concern implementation speed and legacy harm compensation.

Falsification Criteria

Mass operator/provider exit falsifies Becker adaptation predictions.

Judicial rejection of architectural causation with CSI ≥ 0.8 weakens Posner allocation framework.

Disclaimer defenses surviving despite demonstrated behavioral incapacity falsifies the Capacity Theorem’s doctrinal force.

Sustained downstream allocation after peer-state upstream precedent falsifies coordination predictions.

Section conclusion. The framework predicts timing, posture, and design responses, not just outcomes. Explicit falsification criteria distinguish rigorous foresight from narrative-building.

Conclusion

The three applications analyzed—crypto ATM fraud, AI copyright substitution, and AI hallucination reliance—are not separate policy problems. Each represents an expression of a single Posnerian rule operating under behavioral constraint: when downstream actors cannot self-protect, liability migrates upstream to the actor controlling architecture. Technology changes. Liability geometry does not.

Chicago School Accelerated provides a unified, predictive account of that migration.

The Terminal Theorem

The synthesis of Chicago School efficiency analysis with behavioral economics yields a theorem that generalizes beyond the domains analyzed:

When one party lacks behavioral capacity to adjust, cost comparisons collapse. Efficiency requires liability to follow capacity, not price.

Traditional Posnerian analysis asks: who can prevent harm most cheaply? The behavioral extension asks a prior question: who can prevent harm at all? When cognitive constraints make one party a non-avoider, the cost comparison becomes irrelevant. Allocating risk to a non-avoider produces pure deadweight loss regardless of relative prevention costs.

Trilogy Completion

Coase supplies the structural why: private ordering fails because coordination costs prevent efficient bargaining.

Becker supplies the behavioral what: once coordination fails, actors exploit systematically, not randomly.

Posner supplies the institutional where: liability flows to the only actor with prevention capacity.

Each analysis would be weaker without the others. Together, they form a predictive framework that the Chicago School itself never completed: efficiency analysis that accounts for behavioral incapacity, not merely behavioral deviation.

The question is no longer whether liability will shift upstream, but how quickly, through which mechanisms, and at what transition cost during the reallocation.

Methodology

Chicago School Accelerated operates as an integrated foresight methodology rather than as a collection of independent doctrinal lenses. The analysis generates predictions through comparative Cognitive Digital Twin (CDT) simulations benchmarked against a high-capacity institutional baseline. CDT simulations evaluate relative prevention capacity, incentive elasticity, coordination failure, and causal integrity across actors and systems.

The methodology operates as directional and comparative, not empirical in the statistical sense. Quantitative figures (including Hand formula inputs, delay indices, and behavioral failure rates) test robustness and directional invariance rather than claim precision. Where assumptions are required, the analysis applies conservative bounds and sensitivity checks to confirm that conclusions do not depend on narrow parameter choices.

Behavioral economics operates as a binding feasibility constraint. When evidence shows that downstream actors cannot adjust behavior ex ante, price-based or disclosure-based allocation rules are treated as infeasible regardless of nominal efficiency. Liability predictions therefore follow prevention capacity rather than formal role.

Predictions are time-bound and falsifiable. Each major conclusion implies observable institutional responses—pleading survival, insurance underwriting changes, contract redesign, and regulatory sequencing—that can confirm or falsify the framework over defined horizons.

Metrics Referenced

Several metrics appear in the body text to quantify behavioral, causal, and institutional dynamics. The following definitions provide reference for readers unfamiliar with CDT simulation terminology. All scores represent relative comparative strength, not empirical measurements.

CSI (Causal Sufficiency Index): Composite score evaluating whether a causal chain is load-bearing. CSI ≥ 0.70 indicates accepted causal link. Components include ALI (Action-Language Integrity), CMF (Cognitive-Motor Fidelity), and RIS (Response Integrity Score).

DPI (Delay Propagation Index): Measures how regulatory delay compounds harm. High DPI indicates bills weaken between introduction and enactment.

Coordination capacity: Measures ability of fragmented actors to align on shared standards. Scores below 0.60 indicate coordination failure without external focal point.

Focal-point integrity: Measures durability of coordination equilibria. Degraded scores indicate instability susceptible to peer-state precedent.

Behavioral drift: Measures pressure on actors to exit or adapt under regulatory change. Scores below 0.80 indicate adaptation is more likely than exit.

Velocity metrics (complaint, statutory, judicial): Measure relative speed of institutional response. Higher velocity indicates faster adaptation; the hierarchy reveals which mechanisms move first.

Methodological conclusion. Chicago School Accelerated prioritizes doctrinal clarity and predictive power. Detailed internal scoring frameworks beyond these referenced metrics are intentionally omitted from the main paper to preserve legal legibility and avoid false precision.