MCAI Investor Vision: AI Investment Institutions and Public Trust

From Archetypes to Credibility, Extending Wealth Management Futures

See also MCAI Investor Vision: Executive Summary of AI Investment Series (Sep 2025) and companion studies- Archetypes in AI Investment, How Wealth Institutions Translate Their DNA Into AI Bets (Sep 2025), Legacy, Institutional Innovation, and the Future of Capital Stewardship; How Wealth Institutions Adapt, Converge, and Preserve Trust Under Stress (Sep 2025), The Investor Guide to AI Investment Allocation (Sep 2025).

Prologue

A tidal wave of capital is flowing into AI. Global banks, sovereign funds, family offices, and advisory networks are investing billions, even as headlines warn of an AI bubble. The reality is stark: 95% of corporate AI projects fail. Investors must navigate not only hype and volatility, but also the profound question of trust—who among the institutions claiming AI leadership can actually deliver? The prologue sets the stage for why trust, more than scale, determines survival in this era.

This vision statement extends Wealth Management Futures (Sept 2025) from firm-level archetypes to institutional credibility. If the earlier study asked how firms will allocate in an AI-driven future, this companion asks: which institutions can survive the bubble, steward trust, and endure across generations? In doing so, it also connects directly to the theme of Legacy Innovation: how credibility is preserved and compounded across cycles, shaping institutions that endure much like families and societies preserve their legacies.

Contact mcai@mindcast-ai.com to partner with us on AI driven investment analysis.

I. Why Public Trust Defines Institutional Advantage

Public trust has always been a decisive factor in market stability, but in the AI era it becomes existential. As investors pour capital into complex, opaque technologies, confidence rests less on traditional balance sheets and more on foresight integrity. Understanding why trust functions as survival capital requires looking beyond financial returns to the signals that institutions project and how those signals are received. This section frames trust not as a soft variable but as a measurable asset that shapes long-term advantage.

Trust, not scale, is the ultimate currency of the AI economy. Billions in assets under management mean little if investors lose confidence in foresight quality. Once trust erodes, recovery is rare and costly, because credibility cannot be engineered overnight. A bank may tout its capital reserves, or a sovereign fund its global reach, but neither matters if markets conclude their forecasts are hollow.

Institutions that demonstrate transparency, align narrative with reality, and anchor credibility across time gain structural advantage. Those that cannot will see both investors and markets turn away, regardless of performance history. The advantage of trust compounds across decades, making it a form of capital more enduring than any quarterly return. As noted in Economists on AI (Sept 2025), the wider economic debate on productivity and bubbles underscores why trust is the scarce variable in AI markets.

Robert Shiller has long observed that investors often move on perception as much as fundamentals. Daniel Kahneman and Richard Thaler highlight how behavioral biases shape trust and allocation decisions, while Joseph Stiglitz emphasizes the role of information asymmetry in undermining institutional credibility. Hyman Minsky’s financial instability hypothesis frames why bubbles form and burst, and Kenneth Rogoff and Carmen Reinhart provide historical perspective on how crises erode trust in institutions.

Wealth management firms in particular must balance public perception—which shapes inflows, confidence, and allocation choices—with the underlying market dynamics that ultimately determine outcomes. This tension between perception and reality is precisely where institutional trust is forged, and it sets the stage for how different types of institutions will be tested under AI pressure.

Trust is not a byproduct of performance but the foundation of institutional survival. Firms that integrate foresight integrity into their operations will compound credibility over time, while those that neglect it will find capital flight swift and unforgiving. This section establishes why trust is the decisive axis around which all future institutional advantage will turn.

II. Wealth Management Firms and the AI Buzz Economy

The rise of AI has created a buzz economy—an environment where narrative momentum often outpaces underlying fundamentals. Wealth management firms are caught in the middle, pressured to respond to client enthusiasm for AI-driven opportunities while maintaining professional integrity. This section examines how firms can navigate hype responsibly, safeguarding credibility even when capital flows demand risky bets. The focus here is on balancing short-term appetite with long-term trust.

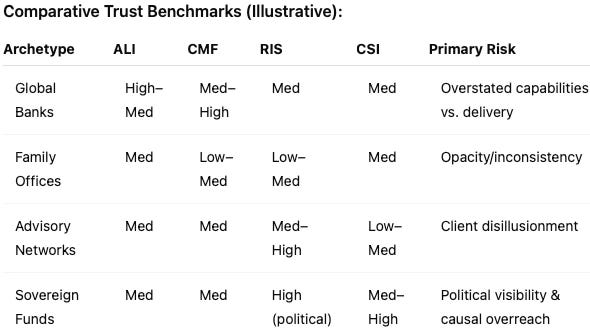

Wealth management firms must treat the AI buzz economy with caution and discipline. They can acknowledge the hype to engage clients, but should filter opportunities through foresight integrity metrics such as Action Language Integrity (ALI), Cognitive Motor Fidelity (CMF), Resonance Integrity Score (RIS), and Causal Signal Integrity (CSI). Rather than chasing every AI announcement, firms build credibility by positioning themselves as stewards who distinguish durable projects from noise. This approach reassures clients that their advisors neither dismiss AI’s potential nor blindly follow the buzz, but interpret developments responsibly to align perception with long-term market dynamics.

These firms must also manage a difficult paradox: they will attract significant inflows of capital from clients eager to ride the buzz, even when internal analysis shows the underlying opportunities are weak. The challenge is to balance investor appetite for hype-driven exposure with the duty to preserve trust. Mishandling this tension risks reputational damage if clients later discover their advisors knowingly chased hollow opportunities. Firms that set clear standards—explaining why certain buzz investments fail foresight tests—will strengthen credibility, while those that indulge the mania risk eroding it irreparably.

This paradox is a practical manifestation of the perception-versus-fundamentals dilemma introduced in Section I, and it provides the bridge into analyzing how institutional archetypes are tested under AI pressure.

The buzz economy offers short-term rewards but long-term risks. Wealth management firms that hold the line on foresight integrity will differentiate themselves as trusted stewards, while those that give in to hype will undermine the very credibility that sustains their business. The section underscores why cautious engagement with AI narratives is the only sustainable strategy.

III. Institutional Archetypes Under AI Pressure

Institutions differ in scale, structure, and strategy, but all face the same ultimate test: whether they can sustain public trust under AI-driven volatility. This section analyzes four archetypes—global banks, family offices, advisory networks, and sovereign funds—showing how each is uniquely exposed. The purpose is not simply to categorize but to reveal fault lines where credibility may fracture. By examining specific examples, we can see how trust functions differently across the investment landscape.

Global Banks (e.g., Goldman Sachs, BlackRock, JP Morgan): Scale offers immense resources, but foresight integrity—not balance sheet size—will determine survival. Goldman’s heavy AI positioning gives it confidence, yet trust depends on translating strategy into durable outcomes. BlackRock and JP Morgan emphasize AI in risk and trading, but their credibility hinges on proving results beyond narrative. Investors will watch whether their project portfolios beat the 95% failure rate or merely add to it.

Family Offices (e.g., Soros Fund Management, Walton Enterprises): Agility allows them to move quickly into AI opportunities, but absent a collective trust framework, they remain fragile. Soros exemplifies opportunistic scale, while multi-generational offices like Walton’s highlight the need to anchor innovation with credibility across time. The family office model thrives on discretion, but without shared standards of foresight integrity, trust deficits multiply.

Advisory Networks (e.g., Equitable Advisors, Edward Jones): Positioned as mid-tier stewards, their survival depends on transparent AI adoption. Equitable Advisors can use trust-focused AI integration to differentiate, while Edward Jones risks losing relevance if it fails to modernize for a retail client base. For these networks, the challenge is translating abstract AI concepts into client-facing credibility that withstands scrutiny.

Public Funds & Sovereigns (e.g., Norges Bank Investment Management, Temasek, CalPERS): Their legitimacy rests on public trust, not quarterly reports. Norway’s sovereign fund and Singapore’s Temasek have global visibility, but credibility deficits here would destabilize more than portfolios. U.S. pension giants like CalPERS carry political exposure, amplifying the trust premium in every AI bet. The sheer visibility of these funds means a failed AI allocation erodes not only returns but also public legitimacy.

Each archetype demonstrates both unique strengths and distinct vulnerabilities. The common denominator is that trust, once broken, cascades into lasting damage regardless of institutional scale. This section shows that the question is not whether AI will pressure these firms, but how each archetype will withstand the trust test.

IV. Measuring Trust with Predictive Cognitive AI

Investors and institutions alike need a systematic way to separate hype from durable foresight. This section introduces MindCast AI’s trust metrics—Action Language Integrity (ALI), Cognitive Motor Fidelity (CMF), Resonance Integrity Score (RIS), and Causal Signal Integrity (CSI)—as a structural alternative to traditional reporting. By applying these tools across archetypes, institutions can be evaluated on their ability to deliver foresight integrity. The goal is to make trust measurable rather than rhetorical.

Action Language Integrity (ALI): clarity and honesty in institutional signaling.

Cognitive Motor Fidelity (CMF): consistency between stated strategy and execution.

Resonance Integrity Score (RIS): trust alignment across stakeholders and time.

Causal Signal Integrity (CSI): reliability of inferred causal links and forecasts.

Together, these metrics offer a structural alternative to performance reporting. They reveal whether an institution’s foresight is not only precise but also trustworthy, adaptive, and durable.

For example:

Goldman Sachs’ public communications about its AI strategy can be evaluated through ALI: does the language used reflect clarity and honesty, or marketing gloss?

Family offices can be assessed through CMF: do they consistently execute on the agile strategies they proclaim, or is there a gap between intention and action?

Advisory networks like Equitable Advisors can be tested with RIS: do their clients’ trust levels align with the firm’s stated direction on AI adoption?

Sovereign funds such as Temasek can be measured through CSI: are their causal forecasts about AI-driven growth structurally reliable, or overstated narratives?

BlackRock’s narratives about AI-enhanced risk models can be stress-tested against ALI and CMF: do their communications match execution? CalPERS’ public positioning can be measured by RIS: does it align with stakeholder trust across political cycles?

MindCast AI created Cognitive Digital Twins (CDTs) of these institutions to simulate their strategies under pressure. By running foresight simulations of wealth management firms and applying Action Language Integrity (ALI), Cognitive Motor Fidelity (CMF), Resonance Integrity Score (RIS), and Causal Signal Integrity (CSI), the analysis benchmarks credibility not only conceptually but through modeled digital representations of institutional behavior.

CDT Foresight Scenarios (5–10 year outlook):

Scenario A — 2026–2030 Correction & Regulation Tightening: Rapid AI revenue disappointments trigger a market correction and stricter disclosure rules. Implications: Global Banks with high ALI and CMF sustain trust; weak-CMF banks face credibility shocks. Family Offices with thin governance see RIS fall as LPs demand transparency. Advisory Networks that translated AI to client outcomes maintain RIS; narrative-heavy shops suffer churn. Sovereign Funds with overstated causal theses see CSI downgraded and face public scrutiny.

Scenario B — 2025–2032 Productivity Lift & Soft-Landing: AI gains arrive gradually; winners compound trust via consistent execution. Implications: Global Banks that kept ALI high (clear roadmap, honest misses) attract strategic mandates. Family Offices with documented CMF (repeatable playbooks) become preferred co-investors. Advisory Networks with measurable client outcomes see RIS rise. Sovereign Funds whose causal chains survived stress tests gain CSI leadership and policy influence.

Note: Scores are relative, derived from CDT stress tests and are intended as directional signals, not ratings.

As explored in MindCast AI InvestorVision Modeling (Sept 2025, www.mindcast-ai.com/p/mindcast-ai-investorvision-modeling), these metrics provide a practical lens for applying foresight integrity tests to real institutional behavior. These tools allow investors to distinguish institutions worth trusting from those merely surfing hype.

Predictive cognitive metrics transform trust from a subjective perception into an auditable standard. When applied across archetypes, they expose both strengths and weaknesses that traditional reporting misses. This section highlights how investors can rely on structured foresight signals rather than market noise.

V. The Public Trust Dilemma

Even when metrics exist, institutions face structural tensions that complicate trust. This section frames those dilemmas as recurring conflicts between transparency and opacity, narrative and reality, and legacy and innovation. These tensions are not peripheral but central to whether institutions can endure. Recognizing them allows investors to interpret institutional behavior through a more critical lens.

Transparency vs. Opacity: Markets reward clear foresight, yet legacy models still prize secrecy. Investors increasingly prefer firms that share the logic of their forecasts rather than hide behind proprietary opacity.

Narrative vs. Reality: Media framing accelerates trust cycles, while 95% of corporate projects fail beneath the surface. Institutions that over-promise and under-deliver will lose legitimacy faster than they lose capital. As argued in Realpolitik AI (Sept 2025, www.mindcast-ai.com/p/realpolitikai), the perception of power and narrative control often determines whether institutions can sustain trust in the face of failure.

Legacy vs. Innovation: Institutions that connect past credibility with future commitments through mechanisms like MindCast AI’s Legacy Retrieval Pulse secure multi-generational legitimacy. Those that sever the link between track record and innovation risk hollowing out their own authority.

Institutions that ignore these dilemmas risk systemic obsolescence, while those that confront them transparently can translate foresight into durable trust.

The trust dilemma is not a temporary challenge but a structural condition of the AI economy. Institutions that reconcile these tensions will earn lasting legitimacy, while those that evade them will see their authority erode. This section clarifies why foresight metrics must be paired with institutional courage.

Biggest Risks to Public Trust by Archetype

While dilemmas define the general landscape, each archetype faces its own distinct risks. This subsection breaks those risks out clearly, showing where credibility is most fragile. By doing so, it links foresight metrics to practical vulnerabilities across the investment ecosystem.

Global Banks: Their biggest risk is overstating AI capabilities in public communications. Credibility collapses if bold forecasts are not matched by execution.

Family Offices: Their risk is opacity and inconsistency. Secrecy undermines trust if agility is not backed by coherent and consistent action.

Advisory Networks: Their vulnerability is client disillusionment. If firms like Equitable Advisors appear to push AI narratives without transparent adoption, client trust may erode quickly.

Public Funds & Sovereigns: Their greatest exposure lies in political visibility. Failed AI allocations can erode not only financial returns but also citizen legitimacy, amplifying systemic consequences.

Mini-Case (Anonymized, 2024–2027): Advisory Network “A”

Set-up: Firm A launched an “AI portfolio” with aggressive marketing (ALI high on volume, low on honesty about limits).

Stress: 2026 correction exposed gaps between claims and delivery (CMF weak; product pipelines slipped).

Outcome: Client surveys showed falling confidence (RIS ↓), while back-tests invalidated several causal theses (CSI↓). After adopting a foresight checklist (clear roadmap, execution gating, causal validation), ALI improved (fewer promises, more disclosure), CMF recovered (quarterly execution sprints), RIS stabilized (transparent client reporting), and CSI rose (third-party validation).

The archetype-specific risks highlight how different institutions can lose credibility in different ways, but the outcome is always the same: erosion of trust. By surfacing these vulnerabilities, investors can apply foresight metrics with greater precision. This subsection reinforces that losing trust is the fastest path to systemic fragility.

VI. Investor Takeaway

Investors ultimately seek clarity about where to place capital, and this section distills the study into a practical signal. The key insight is that trust is not a vague impression but a measurable, structural factor that should guide allocation decisions. This framing makes explicit what earlier sections implied: foresight integrity is capital.

For investors, the question is no longer which firms can forecast but which institutions can be trusted when forecasts collide with reality. In an AI economy defined by bubbles and project failures, capital should follow institutions that prove foresight integrity. Trustworthiness becomes a measurable signal, not a vague impression, and those who lack it will struggle for survival capital.

Trust, not assets under management, is the decisive survival capital of the AI era.

As Shiller reminds us, perception drives markets as powerfully as fundamentals. For investors, anchoring allocations to institutions that can manage both perception and market dynamics is no longer optional—it is essential. This echoes the paradox outlined in Section II: firms may attract capital chasing buzz, but only those that manage the tension between hype and foresight will endure.

The investor takeaway is straightforward: demand proof of foresight integrity. Capital placed with institutions that demonstrate trust metrics will compound; capital placed with those chasing hype will dissipate. The logic of allocation in the AI economy is therefore a logic of credibility.