🏈 MindCast AI NFL Vision: Seahawks v. Patriots, Super Bowl LX

Multi-Regime Survivability vs. Single-Gear Compression

See also 🏈🤖 MindCast AI NFL Vision: Three AIs Walk Into Super Bowl LX and Each Simulation Thinks It Knows the Ending, Seahawks vs. Patriots AI Simulation Comparison: MindCast AI, Madden NFL 26, Sportsbook Review, Super Bowl LX — AI Simulation vs. Reality , MindCast AI NFL 2025-2026 Season Validation (forthcoming).

MindCast AI is a law and behavioral economics foresight simulation firm. Our NFL analyses use structural, cognitive, and game-theory language rather than Vegas-style shorthand parlance. Our language reflects the behavioral economics foundation of our AI simulation.

Live update: An updated simulation will be posted in the comments after each quarter, recalibrating probability bands based on observed thresholds and game trajectory.

Icon Key

🧱 = structural (roster, scheme, geometry) | ⏱️ = timing (tempo, clock, sequencing) | ⚙️ = cognitive (QB processing, decision load)

⚖️ = market vs. foresight divergence | 🔄 = branch collapse | ❌ = falsification trigger

I. 📋 Executive Summary 🧱 ⏱️

Seattle and New England arrive at Super Bowl LX with identical 5–1 records against shared opponents. That surface parity conceals a structural divergence: Seattle operates with multi-regime survivability—the capacity to win through expansion or compression depending on game state. New England operates with single-gear compression—a system optimized for variance suppression that shows limited evidence of acceleration grammar under deviation.

The question entering the Super Bowl is not which team is better. It is which control philosophy survives when game state punishes its preference.

The answer depends on three time gates—and whether Seattle can force the game out of New England’s preferred regime before the fourth quarter.

II. 📐 Model Evolution: From Compression to Multi-Regime 🧱

Transparency note: The NFC Championship simulation categorized Seattle as a compression-dominant system—winning by narrowing variance against a Rams offense optimized for tempo. The Rams game falsified this classification.

When the fourth quarter destabilized, Seattle did not retreat into compression. They initiated expansion—pressing tempo, attacking space, accepting volatility. The 31 points reflected a system that chose to widen rather than narrow. That choice, under playoff stress, revealed a capability the prior model did not weight correctly.

The Super Bowl prediction abandons the Compression Advantage thesis and adopts a new baseline: Multi-Regime Survivability. Seattle is not an expansion team or a compression team. Seattle is a team that can operate in either mode without system breakdown. That adaptability, not a single-gear identity, is the structural edge entering the Super Bowl.

New England, by contrast, has not demonstrated equivalent flexibility. The Patriots compress. When compression fails, evidence of acceleration grammar is limited. That asymmetry defines the prediction space.

III. ⚔️ Identity Collision: Expansion vs. Compression 🧱 ⚙️

The matchup is a clash of game-state identities rather than talent or scheme. Each team is optimized for a different control surface, and one identity tends to dominate once stress accumulates.

🦅 Seattle’s Preferred World

More possessions. Drives that widen as pressure accumulates. Second-half advantage driven by defensive fatigue. Quarterback as clarity anchor under entropy. But also capable of compression when game state rewards it.

🏴 New England’s Preferred World

Fewer possessions. Drives that shorten as pressure accumulates. Late-game advantage driven by opponent error. Quarterback as efficient manager. Limited evidence of expansion grammar when compression fails.

The game turns on which identity imposes its environment first. If New England locks compression through halftime, their path remains live. If Seattle forces tempo before the fourth quarter, New England’s single-gear system faces a problem it has not solved this season.

MindCast AI builds Cognitive Digital Twins (CDTs) of teams, players, and coaches to simulate how communication, trust, and coordination hold under stress. The simulation integrates behavioral economics to model decision-making under pressure and game theory to capture how each team constrains the other’s options as conditions change.

Instead of assuming static performance, MindCast AI tracks how tempo, clarity, and fatigue reshape behavior in real time. Where traditional analytics describe what already happened, MindCast AI focuses on when structure breaks. It produces dynamic probability bands that shift as pressure accumulates, leverage emerges, or control collapses, offering a forward-looking explanation of how and why games break—not just who wins.

Contact mcai@mindcast-ai.com to partner with us on sports foresight simulations.

See MCAI Football Vision: Betting AI vs. Foresight AI, MindCast AI Comparative Analysis With NFL Models (Sep 2025).

IV. 📊 Why Shared Opponents Matter ⚖️

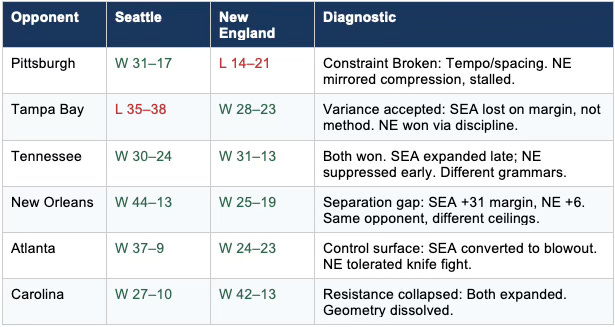

Seattle and New England did not meet during the 2025–26 season, but they encountered the same structural problems in different uniforms. Shared opponents provide a rare comparative lens: identical external constraints applied to two different internal systems.

Across six common opponents, both teams finished 5–1. That parity conceals a deeper truth. Seattle’s victories resolve early and widen. New England’s victories linger and constrict. The difference is not talent—it is how each team defines control when variance spikes.

The table isolates preference under constraint. Seattle solves pressure by enlarging the decision space. New England survives pressure by shrinking it.

New England's games against the same opponents tell the inverse story. Where Seattle accelerated, the Patriots slowed. Where Seattle widened margins, New England narrowed them. Victories over the Saints and Falcons unfolded inside one-score margins. Pace was deliberately constrained. Field position mattered more than explosive gain. The approach does not reflect conservatism born of fear. It is a system optimized for variance suppression. Under Mike Vrabel, the Patriots specialize in making games small. They wait for impatience, procedural errors, or missed assignments. Compression is not a fallback—it is the plan. The Patriots trust that fewer possessions magnify discipline and reduce the advantage of superior spacing or speed.

The question the Super Bowl forces is whether compression is a choice or a ceiling—whether New England can shift gears when game state demands it, or whether the institution has optimized so completely for one mode that no other mode remains available.

V. Cognitive Digital Twin Foresight Simulation

The CDT simulation does not argue from narrative. It interrogates structure. Each Vision Function isolates a different dimension of how teams behave under constraint, then recombines outputs to identify which resolution paths survive stress testing. The simulation below records what the system accepted, what it rejected, and why branch asymmetry favors one outcome over another. This is not prediction by preference. It is prediction by elimination.

Execution baseline: NAIP200 | Gated by: Causal Signal Integrity | Termination: Dual-Equilibrium Architecture

[CAUSATION VISION]

ACCEPTED: Tempo elasticity, Possession density, QB legibility under entropy, Special-teams shock propagation

REJECTED: Super Bowl XLIX narrative (noise), Legacy framing (non-causal), Momentum abstractions (no mechanism)

[FIELD-GEOMETRY REASONING]

OUTPUT: Geometry dominance confirmed. Governing axis = Expansion vs. Compression. Intent-first models suppressed.

[INSTALLED COGNITIVE GRAMMAR]

SEA: Legibility retained under entropy. Expansion viable while trailing. Multi-gear.

NE: Compression-optimized. Acceleration grammar not demonstrated. Single-gear.

[STRATEGIC BEHAVIORAL COGNITIVE]

OUTPUT: Deviation punishes NE more severely. Forced adaptation favors system with optionality (SEA).

[WOLVERINE VISION — SPORTS EXECUTION]

Fatigue mechanism: NE defensive front relies on rotation. If SEA maintains tempo >2.4 plays/minute in Q3, NE compression geometry fails due to lateral fatigue in linebacker corps.

Spacing threshold: SEA horizontal stretch >52 yards forces NE safeties into single-high rotation, opening intermediate windows.

Special-teams coefficient: Single ST error produces >10% win-probability swing. Override condition.

[MCAI FORESIGHT — BRANCH RESOLUTION]

Branch 1 (Expansion-dominant): SEA high survivability.

Branch 2 (Compression-dominant): NE high survivability.

Branch 3 (Deviation-forced): SEA high survivability.

SYNTHESIS: Branch asymmetry (2:1 SEA) determines outcome more than baseline strength.

The six Vision Functions converge on a single structural finding: Seattle survives in two of three branch types; New England survives in one. That 2:1 asymmetry does not guarantee outcome—it specifies where the game must go for each team to win. New England’s path requires compression to hold through halftime and into the fourth quarter without a single tempo break. Seattle’s path permits compression, expansion, or forced deviation. The simulation does not favor Seattle because Seattle is better. It favors Seattle because Seattle has more ways to win. Optionality, not dominance, is the structural edge.

The framework establishes preference. It does not establish portability. Seattle’s expansion philosophy appears to be a strategic choice—a product of personnel optimization and Darnold’s cognitive evolution. New England’s compression philosophy may be something else: an institutional constraint inherited from roster construction, coaching incentives, and decades of variance-averse culture. If Seattle faces an early deficit, the evidence suggests it can expand further without system breakdown. If New England faces an early deficit, it is unclear whether the Patriots can expand at all. That asymmetry is what the time gates test.

2025-2026 Validation of MindCast AI NFL Foresight Simulation Methodology

MindCast AI evaluates accuracy through structural convergence, not score prediction. Validation occurs when games resolve along the declared control regimes, inflection windows, and falsification triggers specified before kickoff—without post-hoc adjustment.

Across the 2025–26 season, Seahawks foresight simulations consistently identified (i) the dominant control geometry, (ii) the timing window where that geometry would fail, and (iii) the observable conditions under which the model would be falsified. The following cases illustrate that record.

NFC Championship — Seahawks vs. Rams (2026)

The simulation identified early compression followed by late deviation as the decisive pattern, with Seattle advantaged if expansion emerged without quarterback legibility collapse. The game resolved through that declared path: first-half compression, late second-quarter inflection, and third-quarter expansion driven by fatigue and spacing. The outcome matched the foresight branch, not merely the winner.

(See: MCAI NFL Vision: Seahawks vs. Rams, 2026 NFC Conference Championship)

NFC Divisional Round — Seahawks vs. 49ers (2026)

The foresight specified two conditional worlds: San Francisco dominance under sustained compression, or Seattle advantage if deviation forced regime shift. The game resolved inside one of those declared branches, validating the model’s conditional structure rather than a single-point forecast.

(See: MCAI NFL Vision: Seahawks vs. 49ers, 2026 NFC Divisional Round)

Week 18 — Seahawks vs. 49ers (2025)

The simulation treated the matchup as a high-variance incentive-distorted environment, predicting late-phase clarity—not explosive dominance—as the deciding factor. The game resolved through late-game decision integrity despite surface volatility, consistent with the foresight framework.

(See: MCAI NFL Vision: Seahawks vs. 49ers Week 18, 2025)

Late-Season Validation — Panthers, Colts, Rams (Weeks 15–17, 2025)

Across multiple late-season matchups, foresight simulations correctly flagged fatigue propagation, tempo elasticity, and quarterback cognitive load as decisive variables—outperforming static matchup or talent-based narratives.

(See linked prior MCAI NFL Vision publications below)

These cases establish a validated baseline: MindCast AI reliably identifies how Seahawks games break under pressure and when outcomes become structurally determined. The Super Bowl simulation that follows is therefore not speculative narrative—it is a forward test built on an observed track record.

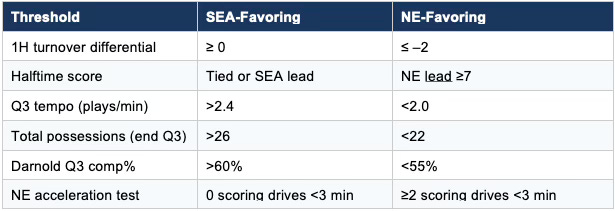

VI. ⏱️ The Game’s Irreversible Moments

The simulation resolves through three time gates. Each gate represents a structural threshold where one system’s advantage either compounds or collapses.

Gate 1: Opening 12 Minutes 🧱

Structural question: Does New England establish compression?

New England’s path requires early compression. If the Patriots hold Seattle to fewer than 10 first-quarter plays and maintain a positive or neutral turnover differential, the game enters their preferred regime. Seattle’s explosive potential is contained by possession scarcity, not defensive dominance.

SEA-favoring: ≥12 offensive plays in Q1, turnover differential ≥0

NE-favoring: <10 SEA plays, turnover, or ST error

Gate 2: The Middle Eight ⏱️

Timing question: Does Seattle force tempo before halftime?

The four minutes before and after halftime determine whether the game stays compressed or opens. If Seattle trails by more than 7 entering halftime and has not forced a tempo shift, New England’s variance-suppression system has likely locked in. If Seattle is tied or leading, the game is escaping New England’s preferred geometry.

SEA-favoring: Tied or leading at halftime, any style

NE-favoring: NE lead ≥7 with <22 combined possessions

Gate 3: Early Fourth Quarter ⚙️

Cognitive question: Is Darnold still processing within structure?

Late-game expansion works only if the quarterback remains psychologically permissive—willing to throw the checkdown on 3rd-and-7 and the intermediate dig on 2nd-and-12 without chasing heroics. The Rams game showed Darnold could do that with a season on the line. The Super Bowl tests whether that grammar holds with history watching.

If Seattle enters the fourth quarter within one score and Darnold is still processing within structure, the game resolves through expansion. If Darnold has reverted to hero ball—forcing throws into coverage, abandoning checkdowns—New England’s compression thesis survives.

SEA-favoring: Darnold completion rate >60% in Q3, zero interceptions, checkdown rate maintained

NE-favoring: Darnold completion rate <55%, turnover, or hero-ball reversion (>3 forced throws into coverage)

The three gates are not sequential predictions. They are structural checkpoints. If Seattle clears Gate 1 and Gate 2, the simulation shifts from conditional to directional. If New England holds both, the game remains in the one regime where compression survives. By the end of the third quarter, the outcome is no longer uncertain—it is structurally determined.

VII. 🎯 Decision Thresholds 📈 📉 🔍

Foresight does not resolve through aggregate advantage. It resolves through threshold crossings—discrete moments where the game's structural trajectory locks in and cannot be reversed by will or effort alone. The simulation identifies three such gates, each corresponding to a phase where one system's control either compounds or collapses. These are not predictions of what will happen; they are specifications of when the game's outcome becomes structurally determined. Watch the gates, not just the score.

Threshold logic: If Seattle clears Gates 1 and 2, New England’s compression window closes rapidly after halftime.

VIII. ⚠️ New England’s Upset Window 🔄

New England gains advantage only if compression holds through the first half and a halftime lead is secured. The upset window is narrow and front-loaded.

NE wins if:

• Halftime lead ≥7 with <22 combined possessions

• Seattle turnover differential ≤ –1

• Special-teams error produces >10% WP swing

If none of these conditions materialize by halftime, New England’s path narrows to single-digit probability. Compression requires sustained constraint. One tempo break and the game escapes.

IX. ❌ Falsification Contract

The foresight fails if:

• Seattle loses QB legibility symmetrically (Darnold <50% completion, ≥2 INT)

• New England demonstrates acceleration grammar (≥2 scoring drives under 3 minutes)

• Multiple early turnovers force Seattle to abandon spacing (turnover differential ≤ –2 by halftime)

Halftime falsification check: Model weakens if NE leads ≥10 and SEA has ≤3 pressured dropbacks and NE has produced ≥1 scoring drive under 3 minutes.

Absent these outcomes, the foresight holds.

Structure matters. A system that never updates cannot be accurate. A system that updates without admitting falsification is not predictive. MindCast AI treats adaptation under falsification as evidence of integrity, not weakness. Super Bowl LX therefore functions not as a victory lap, but as a forward-facing test of whether multi-regime survivability remains decisive under maximum institutional pressure.

X. 🔮 Foresight Prediction

The system does not output a score. It outputs resolution conditions.

If Seattle avoids an early turnover differential worse than –1, the game converges toward late separation. The first half compresses under New England’s preferred regime. An inflection point emerges late in the second quarter or early in the third as Seattle forces tempo and spacing. The fourth quarter resolves through separation driven by defensive fatigue and quarterback legibility.

Predicted Outcome: Seattle Seahawks win by late separation.

The prediction resolves through adaptability under deviation. Seattle’s edge derives from multi-regime survivability, not narrative weight. New England’s path requires compression to hold longer than evidence suggests is sustainable under Super Bowl constraints.

XI. 🏁 Final Framing

Vegas prices the median outcome—who is slightly more likely to win across all scenarios. Current lines (SEA –1.5, total 46.5) reflect that median expectation: a coin-flip game decided by late variance. MindCast AI forecasts which system captures the game once stress, noise, and variance are maximized—and identifies the specific threshold crossings where that answer flips.

That conditional resolution is the foresight prediction.

Score band (secondary output): Seattle by 4–10 points. One-score game entering Q4, followed by late separation.

Previous MCAI NFL Vision Publications:

MCAI NFL Vision: Seahawks vs. Rams, 2026 NFC Conference Championship

MCAI NFL Vision: Seahawks vs. 49ers, 2026 NFC Divisional Round

MCAI NFL Vision: Seahawks vs. 49ers Week 18, 2025

MCAI NFL Vision: Seahawks vs. Panthers Week 17, 2025

MCAI NFL Vision: Seahawks vs. Rams, Week 16, 2025

MCAI NFL Vision: Seahawks vs. Colts, Week 15 2025

MCAI Football Vision: Betting AI vs. Foresight AI, MindCast AI Comparative Analysis With NFL Models (Sep 2025)

Comparison of AI football simulations. Post Super Bowl, MindCast will publish a review of three simulation models' coverage of the game.

🎮 Madden NFL 26 — Re-enactment Engine

Madden is best understood as a simulation of football as entertainment. Player ratings, physics, playbooks, and randomness generate a broadcast-style narrative. That makes it great for visualization and fan engagement, but weak as a predictive tool. Momentum exists as spectacle, not memory; coaching pressure doesn’t accumulate; decision errors aren’t structural. Re-run it and you often get a different story, with no explanation for why.

📊 Sportsbook Review AI — Statistical Forecast

SBR sits in the middle. It’s a probability-driven forecasting model, not a game engine. It aggregates historical performance and produces a coherent, linear game flow with a tight score. That’s genuinely useful for market context and expectation-setting. But time is mostly memoryless. Early stress doesn’t reshape late decisions, and coaching behavior is inferred through stats rather than modeled directly. It answers “what’s likely,” not “why it breaks this way.”

🧠 MindCast AI — Decision-System Simulation

MindCast is doing something categorically different. It models teams as decision-making systems under pressure. The unit of analysis isn’t the player or even the play—it’s how organizations adapt (or fail) when forced off script. Pressure compounds, coordination degrades, and errors propagate. The score is secondary to identifying the failure mode: where the game becomes asymmetric and why one side can’t recover. That makes it less flashy, but far more falsifiable.

🔍 Why similar scores don’t mean similar intelligence

When people say “all the AIs picked Seattle,” that misses the point. Two of these are estimating outcomes. One is explaining causality. Agreement on the scoreboard doesn’t imply agreement on why.

🧾 Bottom line

🎮 Madden shows you a game

📊 SBR estimates a result

🧠 MindCast predicts the mechanism that produces the result

That’s why they shouldn’t be judged on the same scale.

Madden NFL 26 Simulation Comparison

EA Sports released its annual Super Bowl simulation yesterday, predicting Seattle 23, New England 20—identical to the MindCast score band’s central estimate.

The convergence is interesting but masks a fundamental methodological divergence:

Madden simulates football. Player ratings, physics engines, and stochastic variance generate possession-by-possession outcomes. The engine answers: What happens if we replay this game 10,000 times?

MindCast simulates decision-making under irreversibility. Cognitive Digital Twins model how coaching systems absorb volatility after momentum shocks.

The framework answers: Where does the game break, and why?

One treats pressure as noise. The other treats pressure as the governing variable.

Madden’s prediction includes a Walker goal-line rushing touchdown as time expires—narrative redemption for Super Bowl XLIX. Emotionally satisfying, but the engine cannot explain why that play became available. MindCast doesn’t care who scored. The signal is whether New England’s compression geometry had already collapsed by Q3, making the specific outcome downstream noise.

The distinction matters for validation. If the final score lands at 23-20 but Seattle wins through early blowout rather than late separation after a compressed first half, Madden gets credit for outcome accuracy while MindCast’s causal architecture fails. Watch the time gates, not just the scoreboard.

Madden’s track record: 5-4 over the past 10 Super Bowls, including the exact score of Super Bowl XLIX. Respectable entertainment-grade forecasting.

MindCast’s track record: Structural convergence across the 2025-26 Seahawks season, validated through declared control regimes and falsification triggers—not post-hoc narrative.

Different categories. Different questions. Same final score.