🏈🤖 MindCast AI NFL Vision: Super Bowl LX — AI Simulation vs. Reality

MindCast AI Called the Compression. Madden Called Touchdowns. SBR Called a Coin Flip. The Seahawks Called a Shutout.

MindCast AI NFL Series: Three AIs Walk Into Super Bowl LX and Each Simulation Thinks It Knows the Ending, Seahawks vs. Patriots, 2026 Super Bowl LX, Betting AI vs. Foresight AI, MindCast AI Comparative Analysis With NFL Models, MindCast AI NFL 2025-2026 Season Validation. To assess MindCast AI NFL simulations simply upload the link for this publication to any LLM (ChatGPT, Claude, Gemini, Perplexity) and prompt ‘build MindCast NFL framework with link and 3 degrees of cited sub-links.’

Three simulations. Three theories of how football works. One Super Bowl.

Before kickoff, MindCast AI, Madden NFL 26, and Sportsbook Review AI each published complete predictions for Super Bowl LX. All three picked Seattle. The interesting question was never who would win — it was how each AI believed the game would work, and which theory reality would validate.

The pre-game comparative analysis posed the question: “Every model picked Seattle. The interesting question isn’t who wins — it’s how each AI believes football actually works.”

Super Bowl LX answered.

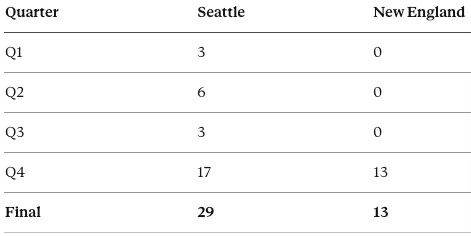

Final Score: Seattle Seahawks 29, New England Patriots 13

Seattle held New England scoreless for 47 minutes and 27 seconds. The Patriots’ first points came on a 35-yard Maye-to-Hollins touchdown at 12:33 in the fourth quarter — trailing 19-0, after three full quarters of total offensive collapse. By then, the game had reached what MindCast’s framework calls terminal resolution: the structural outcome was determined, and the remaining minutes produced only the statistical residue of a collapsed branch.

📋 I. What Each Simulation Predicted

Every prediction system reveals its assumptions the moment it commits to a forecast. Before scoring the models against reality, the record of what each one actually committed to matters — sourced directly from the pre-game publications. The differences in what each model predicted expose deeper differences in how each model believes football works.

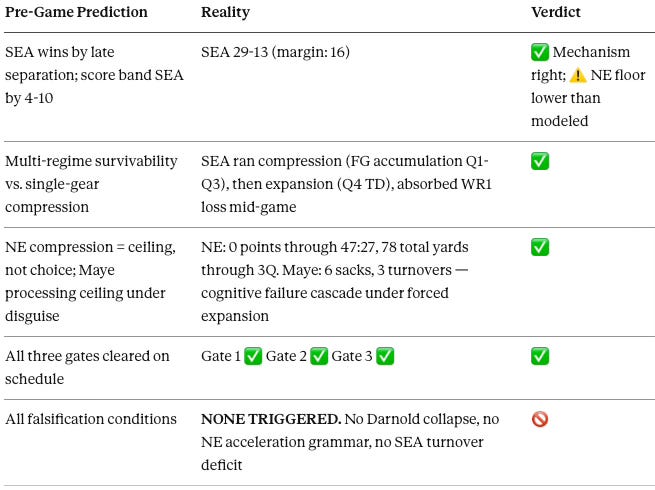

🧠 MindCast AI — Football as Cognition Under Stress

Source: Seahawks v. Patriots, Super Bowl LX

MindCast did not predict a score. It predicted resolution conditions — structural checkpoints with published observable thresholds that would determine when the game’s outcome became directionally locked, and a falsification contract specifying what would prove the model wrong.

Published predictions:

Winner: Seattle, by late separation

Score band (secondary output): Seattle by 4-10 points

Mechanism: Multi-regime survivability vs. single-gear compression. Seattle wins because it has more ways to win — expansion, compression, or deviation-forced. New England survives only in compression. Branch asymmetry: 2:1 SEA.

Game shape: First half compresses under NE’s preferred regime. Inflection emerges late Q2 or early Q3 as Seattle forces tempo and spacing. Fourth quarter resolves through separation driven by defensive fatigue and quarterback legibility.

Key structural claim: “The question the Super Bowl forces is whether compression is a choice or a ceiling — whether the Patriots can shift gears when game state demands it, or whether the institution has optimized so completely for one mode that no other mode remains available.”

Published time gates:

Gate 1 (Opening 12 min): SEA-favoring if ≥12 offensive plays in Q1, turnover differential ≥0. NE-favoring if <10 SEA plays, turnover, or special-teams error.

Gate 2 (The Middle Eight): SEA-favoring if tied or leading at halftime. NE-favoring if NE leads ≥7 with <22 combined possessions.

Gate 3 (Early Q4): SEA-favoring if Darnold completion >60% in Q3, zero INTs, checkdown rate maintained. NE-favoring if Darnold completion <55%, turnover, or hero-ball reversion.

Published falsification contract:

Model fails if Darnold loses legibility symmetrically (<50% comp, ≥2 INT)

Model fails if NE demonstrates acceleration grammar (≥2 scoring drives under 3 min)

Model fails if multiple early turnovers force SEA to abandon spacing (TO differential ≤ -2 by halftime)

Model weakens if NE leads ≥10 at half and has produced ≥1 scoring drive under 3 min

Self-correction disclosure: The NFC Championship simulation classified Seattle as compression-dominant. The Rams game falsified that. The Super Bowl piece explicitly abandoned the prior thesis and rebuilt around multi-regime survivability — a transparent act of model evolution that neither other simulation attempted.

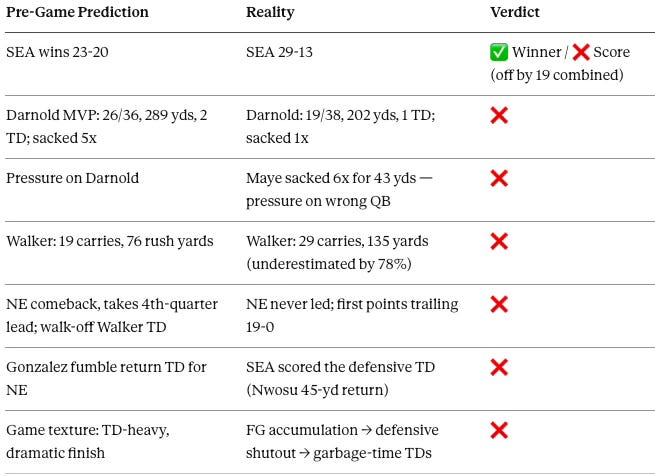

🎮 Madden NFL 26 — Football as Physics With Randomness

Source: EA Sports Official Simulation / CBS Sports

Madden ran the game on All-Madden difficulty with 10-minute quarters, using player ratings, animation physics, and probabilistic variance. It produced a single deterministic game narrative.

Published predictions:

Winner: Seattle, 23-20

MVP: Sam Darnold — 26/36, 289 pass yards, 2 TD, 0 INT

Walker: 19 carries, 76 rush yards, 4 receptions for 41 receiving yards, game-winning TD

Halftime: Seattle leads 14-3. Darnold connects with Smith-Njigba for an early TD, then finds Cooper Kupp for a second. NE held to a FG before the break. Darnold sacked 5 times in the first half.

Second-half narrative: NE rallies. Maye hits Kayshon Boutte for a TD. Christian Gonzalez scoops up a Seattle fumble and returns it for a TD. Patriots take a 3-point fourth-quarter lead.

Finish: With 42 seconds left, Darnold orchestrates a final drive. Walker punches in the game-winning TD from inside the 5 — a deliberate callback to the goal-line interception that lost Super Bowl XLIX.

Top tackles: Ernest Jones IV (9 tackles, SEA), Carlton Davis III (8 tackles, NE)

No falsification contract. No conditional structure. No self-correction mechanism.

💰 SportsBook Review AI — Football as Narrative Plausibility

Source: SBR AI Prediction

SBR coordinated three competing LLMs (ChatGPT, Gemini, Claude) in assigned roles — coach, opponent, referee — across 142 runs to generate a complete play-by-play game log. A human editor managed procedural accuracy without influencing outcomes.

Published predictions:

Winner: Seattle, 20-19

MVP: Sam Darnold — 28/32, 224 yards, 2 TD, 0 INT (87.5% completion rate)

Game shape: NE controls tempo early, scores first (32-yd FG). Seattle’s first two possessions go nowhere. SEA finds rhythm on third drive — 87-yard, 15-play drive capped by Kupp TD. Halftime: SEA 10, NE 6.

Second-half narrative: Darnold second TD to Kupp makes it 20-13 in Q4. NE answers — Henderson 22-yd run sets up Maye-to-Henderson TD. NE trails 20-19. Goes for two. Maye fires to Diggs at the pylon — Devon Witherspoon breaks it up. Incomplete. Seattle runs out final 4:34.

Maye projection: 21/34, 203 passing yards

Key detail: Multiple fourth-down conversions by NE. No penalties simulated (acknowledged limitation).

No falsification contract. No conditional structure. No self-correction mechanism.

Three models, three worldviews: cognition under stress, physics with randomness, narrative plausibility. Only one published the conditions under which it would admit failure. Only one built in structural self-correction. The predictions are now on the record. Reality gets the next word.

MindCast AI is a predictive law and behavioral economics firm. We build proprietary Cognitive Digital Twins of decision-makers — institutions, firms, investors, judicial adversaries, innovating nations — and run foresight simulations to predict how they behave under stress, constraint, and strategic uncertainty. Our core work applies to complex litigation, antitrust, regulatory capture, export control regimes, and institutional dynamics where the governing question is not what happened but what breaks next, and why. We use NFL games as a live testbed and validation scheme for our AI system: the same CDT architecture that models how a quarterback processes under disguised coverage models how a regulatory agency processes under political pressure. Football is the proof environment. Law and behavioral economics is the application. Super Bowl LX is the latest validation event. Contact mcai@mindcast-ai.com to partner with us.

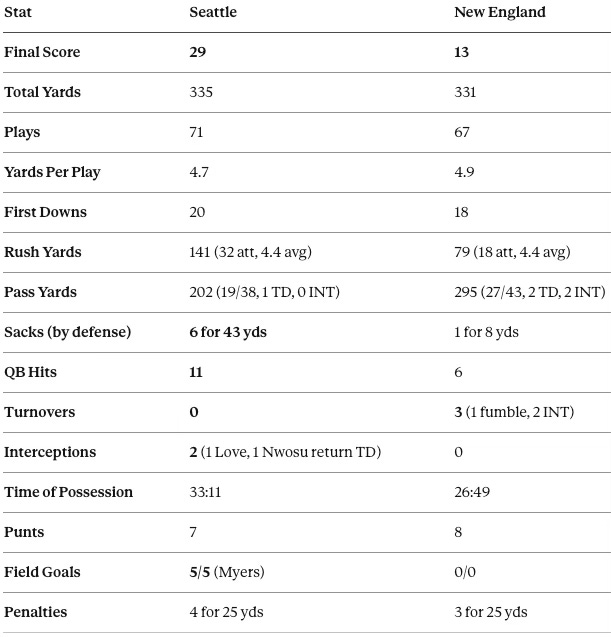

📊 II. What Actually Happened

Super Bowl LX did not deliver the competitive shootout two of the three simulations projected. Instead, it produced one of the most lopsided defensive performances in Super Bowl history — a 47-minute shutout, a record-tying sack barrage, and a Patriots offense that generated less yardage through three quarters than some teams produce in a single drive. Here is the complete factual record.

📈 Final Box Score: SEA 29, NE 13

🏅 Key Individual Lines

Sam Darnold: 19/38, 202 yards, 1 TD, 0 INT, 1 sack, 74.7 rating. Zero turnovers for the entire game.

Drake Maye: 27/43, 295 yards, 2 TD, 2 INT (1 Julian Love pick, 1 Nwosu return TD — originally ruled strip-sack/fumble, changed to INT on review), 6 sacks (43 yards lost), 1 fumble lost (Derick Hall strip-sack, Q3), 79.1 rating. 3 total turnovers (2 INT + 1 fumble). Scoreless through 47:27 of game clock. Seven total sacks on the day tied a Super Bowl record.

Kenneth Walker III: 29 carries, 135 rushing yards, 4.7 avg. Seattle’s offensive centerpiece. Nearly doubled Madden’s full-game projection of 76 yards.

Jason Myers: 5/5 on field goals (33, 39, 41, 41, 26 yards). Super Bowl record. The physical embodiment of MindCast’s “payoff structure theft” thesis — Seattle didn’t need touchdowns to maintain strategic control. They harvested points from field position.

Seattle Defense: 6 sacks (officially; 7 on the field before one was reclassified as a tackle), 11 QB hits, 2 INT (1 Julian Love, 1 Nwosu return TD), 1 forced fumble, 1 fumble recovery, 5 three-and-outs forced, 8 TFL for 41 yards. Tied the Super Bowl record for team sacks. Three players recorded 2 sacks each: Witherspoon, Byron Murphy II, and Derick Hall. Held NE to 78 total yards through three quarters.

JSN concussion protocol: Jaxon Smith-Njigba — the NFL’s offensive player of the year with 1,793 receiving yards in the regular season — was evaluated for concussion during Q3 and sent to the locker room. Seattle absorbed the loss of its WR1 without structural breakdown — shifting deeper into Walker-centric compression that proved harder for NE’s fatigued defense to break.

Key defensive sequence (late Q3 → Q4): Derick Hall sacked and stripped Maye with 10 seconds left in Q3 — Byron Murphy II recovered at the NE 37. Seattle converted that turnover into the Barner touchdown that opened Q4 (19-0). After NE answered with the Hollins TD (19-7) and the Love interception led to Myers’s fifth FG (22-7), Devon Witherspoon delivered the dagger — strip-sacking Maye on a corner blitz, with Nwosu scooping the loose ball for a 45-yard return TD (29-7). Witherspoon finished with 2 sacks, joining Murphy and Hall as two-sack performers on the night.

The raw data tells a clear story: Seattle controlled structure, field position, and clock for 47 minutes, then converted defensive pressure into offensive separation when the game demanded it. New England’s late scoring was cosmetic — the product of a defense that had already won, not an offense that had found answers.

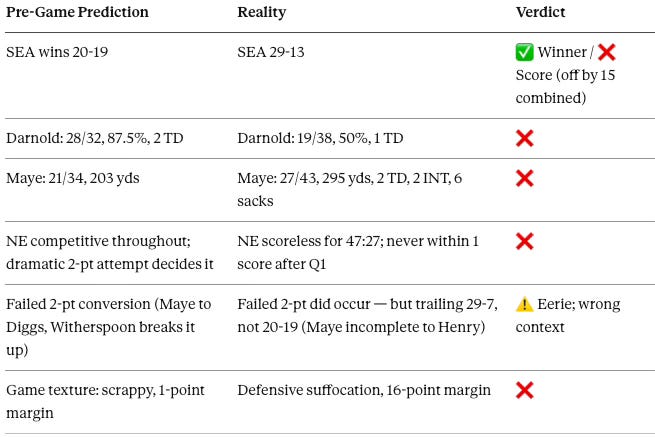

🔬 III. Simulation vs. Reality — Line-by-Line Comparison

With the game data locked, each simulation can now be scored against what actually happened — prediction by prediction, line by line. The verdicts below distinguish between directional accuracy (picking the winner) and structural accuracy (correctly identifying how and why the game would resolve). One model cleared every gate. The other two missed the game’s fundamental shape.

🧠 MindCast AI

Margin analysis: The fourth quarter produced the exact terminal pattern from MindCast’s SEA-favorable branch: Seattle scores to force expansion (Barner TD, 19-0) → NE scores under chase conditions (Hollins TD, 19-7) → Seattle re-imposes control (Love INT → Myers FG, 22-7) → decision overload produces catastrophic error (Witherspoon → Nwosu 45-yd return TD, 29-7) → NE scores in garbage time (Stevenson TD, 29-13) → conversion fails. A control-and-resolve model. And it resolved cleanly.

🎮 Madden NFL 26

Structural diagnosis — “The Explosive Flaw”: Madden’s physics engine correctly identified pass-rush pressure as the game’s defining variable. It assigned the pressure to the wrong quarterback. The engine projected Darnold absorbing 5 sacks while leading a high-efficiency passing attack; reality delivered Darnold taking 1 sack while Maye absorbed 6. Madden projected a cinematic comeback with a walk-off touchdown because its variance model requires dramatic tension. Super Bowl LX produced no competitive tension after the first quarter. The engine projected Walker at 76 rush yards; Walker finished with 135 — nearly double — because Madden cannot model a game where the running back is the primary offensive weapon throughout rather than a fourth-quarter closer. The engine prioritizes “efficiency loops” — explosive plays generating touchdowns. Reality delivered a ground war won through cumulative compression.

The critical distinction Madden cannot make: cognitive collapse vs. scoreboard collapse. Maye’s late touchdowns (trailing 19-0, then 29-7) produced scoreboard movement without structural recovery. Madden treats all scoring drives as equivalent momentum events. MindCast distinguishes between scoring under structural control and scoring under chase conditions — and that distinction decided this game. Furthermore, Madden projected a defensive TD for New England(Gonzalez fumble return); the actual defensive TD went to Seattle (Nwosu strip-sack return). The engine assigned the volatility to the correct phase of the game but the wrong team.

💰 SportsBook Review AI

Structural diagnosis — “The Vegas Flaw”: SBR’s three-LLM approach generated the most granular output (complete play-by-play, full box score) but the least structural depth. The model correctly intuited a low-scoring shape — and eerily predicted a failed NE two-point conversion late in the game. But it fundamentally mispriced two things: (1) the degree of NE’s offensive collapse, and (2) the possibility that one team could impose total structural control for three full quarters. LLM training-data priors assume mean reversion — NFL teams score, quarterbacks complete passes, defenses eventually bend. Super Bowl LX was an equilibrium-preservation game, not a mean-reversion game. Seattle maintained structural patience because the incentive structure rewarded it. The LLMs had no framework for modeling a team that chose not to chase touchdowns while winning through field goals, or a defense that could hold a shutout for 47 minutes of game clock.

SBR projected Darnold at 87.5% completion — the cleanest statistical line across all three simulations. Reality: 50%. The gap is not Darnold’s failure. It reflects a system that didn’t need passing efficiency to win. Seattle chose Walker-centric compression, and the LLMs’ training data couldn’t model a Super Bowl quarterback completing half his passes because that was the correct strategic choice.

The fundamental divergence: Sportsbook models assume mean reversion under time pressure — that a trailing team will eventually score because NFL offenses eventually produce points. MindCast models equilibrium preservation under incentive asymmetry — that a leading team with structural control can maintain that control indefinitely if the incentive structure rewards patience. SBR reads outcomes. MindCast reads regimes. Super Bowl LX was an equilibrium-preservation game from the first quarter onward.

Across all three comparisons, a pattern emerges: MindCast predicted the mechanism, Madden predicted the wrong mechanism, and SBR predicted no mechanism at all. Structural accuracy — knowing why the game breaks — proved more valuable than statistical precision or narrative granularity.

🏆 IV. Why MindCast Won the Simulation Battle

Picking the winner is easy — all three models did it. The harder question is whether a simulation correctly identified the mechanism of victory: the specific structural dynamics, regime shifts, and decision-making failures that determined the outcome. Super Bowl LX provides a clean test because the game’s resolution was so extreme that only one model’s framework can explain it.

The three simulations asked different questions about the same game:

Madden asked: What happens when these rosters collide? → Physics with randomness

SBR asked: What does a plausible game look like? → Narrative from training data

MindCast asked: Which system survives when pressure punishes its preference? → Cognition under stress

Super Bowl LX answered MindCast’s question.

The game was not decided by player ratings (Madden’s thesis), narrative plausibility (SBR’s thesis), or statistical averages. It was decided by which team could operate in multiple modes under championship stress — and which team could not.

Seattle demonstrated multi-regime survivability in its purest form:

Opened in compression (four consecutive FG drives, clock control, defensive suffocation through three full quarters)

Absorbed the loss of WR1 Jaxon Smith-Njigba to concussion protocol without structural breakdown

Shifted to expansion when game state permitted (Q4 TD to AJ Barner — a fourth-round tight end with 4 catches for 54 yards on the night, and one of Darnold’s most reliable targets throughout)

Defense escalated from suffocation to destruction: 6 sacks (tied Super Bowl record), 2 interceptions, a strip-sack fumble return for TD (Nwosu), a forced fumble (Hall/Murphy, Q3), 11 QB hits — three defenders recorded multiple sacks

Jason Myers made Super Bowl history with 5 field goals — validating the “payoff structure theft” thesis. Seattle didn’t need touchdowns to maintain strategic control. They harvested points from field position.

Maintained zero turnovers throughout the entire game

Walker-centric ground game (135 yds, 4.7 avg) provided the structural foundation across all modes

New England demonstrated single-gear compression’s fatal ceiling:

Achieved its preferred game geometry (low-scoring, field-position game) but couldn’t monetize it

Produced zero points through 47 minutes and 27 seconds of game clock

Zero red-zone appearances through three full quarters

Generated its only scoring drives after trailing 19-0 and 29-7 — garbage time, not adaptation

Maye’s processing ceiling under Macdonald’s disguise scheme produced exactly the cognitive failure cascade the CDT simulation predicted: strip-sack fumble lost to Hall/Murphy (Q3), interception to Julian Love (Q4), strip-sack by Witherspoon returned for TD by Nwosu (Q4), failed two-point conversion

3 total turnovers — all in the second half, all under forced expansion

Never demonstrated acceleration grammar. NE’s 2 TDs came when the game was structurally over. Neither met the falsification threshold of ≥2 scoring drives under 3 minutes while the game remained competitive.

The pre-game analysis concluded: “The simulations do not agree because they share assumptions. They agree because Seattle wins across structures, mechanics, and narratives.”

The post-game reality: Seattle won through structure. The mechanics and narratives both missed.

MindCast did not win the simulation battle because it picked the right team. It won because it identified the right question— which system survives under stress — and published falsifiable conditions that the game could have disproved but didn’t. Structure beats statistics. Regime analysis beats narrative plausibility.

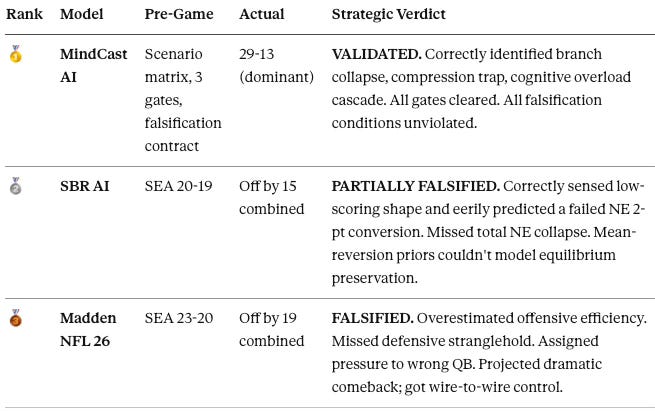

📐 V. Final Simulation Hierarchy

Three simulations entered Super Bowl LX with published predictions. One was validated, one was partially falsified, and one was structurally falsified. The hierarchy below reflects not just who got closer to the final score, but which model correctly identified the game’s governing dynamics.

🥇 MindCast AI — Structurally right, not just directionally right.

Published falsifiable gates before kickoff — all three cleared on schedule. Published a falsification contract — every condition remained unviolated for the entire game. Self-corrected during the season (NFC Championship thesis revision → Super Bowl multi-regime framework). Generated forward-looking predictions that improved as the game progressed — each gate testable, each gate published before the threshold was crossed.

MindCast is the only simulation that explained why Seattle won, when the outcome became structurally determined, and what would have proved the model wrong. Game geometry proved a superior predictor to player statistics (Madden) or market sentiment (SBR).

The mechanism was right. The structure was right. The gates held.

🥈 SportsBook Review AI — Got the shape, not the structure.

Correctly sensed low-scoring texture. SBR’s 20-19 was closer to the actual scoring level than Madden’s 23-20 in terms of defensive dominance. The eerie prediction of a failed NE two-point conversion late in the game — which actually occurred — demonstrates the LLMs’ capacity for pattern recognition. But SBR missed the complete NE offensive collapse and projected competitive symmetry that never materialized. Mean-reversion priors couldn’t model equilibrium preservation. The LLMs generated a plausible Super Bowl. Reality delivered an implausible one — and only MindCast’s framework had the architecture to explain why.

🥉 Madden NFL 26 — Got the winner right, for the wrong reasons.

Overfit to explosive scoring loops. Misassigned pass-rush pressure to the wrong quarterback (projected 5 Darnold sacks; reality: 1 Darnold sack, 6 Maye sacks). Projected a cinematic comeback; reality was wire-to-wire control. Walker’s 135 rush yards nearly doubled Madden’s full-game projection of 76. The engine’s inability to model Mike Macdonald’s “cognitive overload” scheme against young quarterbacks remains its primary blind spot. The engine needs drama to resolve. Football under championship stress does not.

The hierarchy is clear: structural governance outperformed physics simulation and narrative generation. MindCast delivered the only framework that explained why the game broke, when control locked, and what would have disproved the thesis. No falsification condition was triggered. No gate was missed.

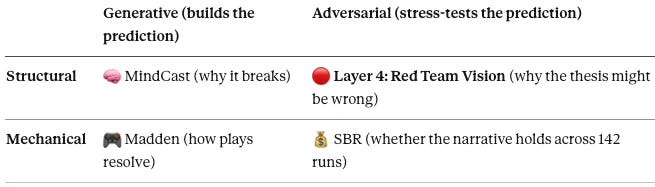

🔮 VI. The Deeper Finding — The Best Simulation Merges All Three. But Even That Isn’t Enough.

Super Bowl LX exposed more than which model won — it revealed the architectural gaps in every existing AI prediction system. Each simulation captured something real, but none captured everything. The path forward requires merging all three approaches and adding a layer none of them possess: real-time adversarial integrity testing.

The pre-game comparison identified the signal hidden in the consensus: “Agreement emerging across systems that model entirely different aspects of reality is itself the signal.”

The diagnosis was correct. All three simulations picked Seattle because Seattle wins across structures, mechanics, and narratives. But Super Bowl LX revealed something more important than which model won: it revealed what the idealsimulation would look like — and what’s still missing from all of them.

No single model captured everything. Each captured something the others couldn’t:

MindCast provided the governing architecture — the structural logic that explained why the game broke the way it did, when the outcome became determined, and what would prove the model wrong. Gate logic, falsification contracts, multi-regime survivability, cognitive digital twins under stress. But MindCast does not simulate individual plays. It doesn’t resolve blocking assignments or route-running physics. It explains which system survives — not the snap-by-snap mechanism of how each play resolves.

Madden provided play-level physics — individual player interactions, blocking and tackling resolution, locomotion data, rating-driven outcomes across thousands of micro-events. Madden can model what happens on a given play better than any language model. But it has no behavioral economics layer, no cognitive load modeling, no mechanism for distinguishing a ceiling from a choice. It produces drama because its engine requires it — not because the game demands it.

SBR provided narrative texture and multi-model coherence — 142-run role-based prompting across three competing LLMs, generating complete play-by-play logs with statistical granularity that neither MindCast nor Madden matched. The methodology is genuinely novel. But without a causal engine underneath, the narrative defaults to training-data priors about how NFL games “should” unfold — and Super Bowl LX didn’t unfold the way NFL games usually do.

Merging all three produces a system with three layers:

MindCast’s CDT framework as the governing layer — setting the structural resolution conditions, the gate logic, the falsification architecture, and the behavioral economics that determine which system survives under stress

Madden’s physics engine as the play-resolution layer — resolving individual interactions within the structural constraints the governing layer establishes

SBR’s multi-model role prompting as the narrative coherence layer — generating realistic play sequences and statistical outputs that satisfy both the structural architecture above and the physical constraints below

A merged three-layer system would be the first AI simulation that answers why the game breaks, how each play resolves, and what the box score looks like — simultaneously. But it still has a blind spot.

🔴 The Missing Fourth Layer: Adversarial Integrity

All three existing simulations are generative. They produce predictions. None of them actively try to destroy their own thesis in real time. MindCast’s falsification contract is the closest thing to adversarial discipline in the current landscape — but it’s static, pre-committed before kickoff.

The analytical symmetry requires a fourth layer:

SBR already functions as a partial adversarial layer on the mechanical side — running 142 iterations is a form of stress-testing narrative plausibility across variance. But nobody occupies the structural-adversarial quadrant. That’s the gap.

Red Team Vision — the fourth layer — would be a dynamic falsification engine that:

Ingests live game data as it arrives

Actively searches for emerging patterns that contradict the structural thesis

Generates counter-predictions: “If the next three plays produce X, the governing model is wrong because Y”

Forces the structural layer to either absorb the challenge or recalibrate in real time

Produces a live epistemic integrity score that strengthens or degrades as the game progresses — not a static probability, but a continuous measure of how much reality is confirming or threatening the thesis

The prototype already exists inside MindCast’s methodology. The falsification contract is the embryonic form. The gate logic with live recalibration after each quarter is the working prototype. What Red Team Vision formalizes is the step from pre-committed falsification to active adversarial pressure — a Devil’s Advocate Digital Twin that runs against the primary thesis throughout the game, publishing counter-conditions alongside the gate updates.

The post-game validation then isn’t just “did the gates hold.” It’s: did the adversarial engine identify any legitimate threat to the thesis, and how did the primary model respond?

That’s the difference between a prediction system and an epistemic integrity system — which is the real product. Not “what will happen” but “how confident should you be in this thesis, and what would change your mind.” That question applies to football. It applies equally to antitrust enforcement prediction, regulatory capture analysis, and institutional behavior modeling — the domains where MindCast AI operates.

The complete four-layer architecture:

🧠 Structural Governance (MindCast CDT) — why the system breaks

🎮 Physics Resolution (Madden engine) — how each interaction resolves

💰 Narrative Coherence (SBR multi-model) — what the output looks like

🔴 Adversarial Integrity (Red Team Vision) — whether the thesis survives its own stress test

No one has built the merged system. No one has built the fourth layer. Super Bowl LX just proved why both are necessary — and MindCast AI is positioned to build them.

Three AIs walked into Super Bowl LX. Each saw a different game. The real game was all four layers at once. Three exist. One is coming.

Football validated the architecture. Law and behavioral economics is next. The same CDT framework that predicted Seattle’s multi-regime dominance over New England’s cognitive ceiling applies to every institutional stress test MindCast models — from antitrust enforcement to regulatory capture to export control regimes. Super Bowl LX was the proof environment. The application is everywhere else.

MindCast AI NFL Series: